Obstacles in camera blind spot are cleared from local costmap

I'm trying to implement obstacle avoidance using move_base package on ROS 1 Melodic. Current issue is that when the robot approaches a short obstacle, the obstacle is first correctly drawn to local costmap, but closer the robot gets to it, it starts to clear it from the costmap. I believe this happens because of the fact that the camera has a blind spot (~30cm) in front of the robot. When the camera doesn't see the obstacle anymore, move_base obstacle layer does raytracing over it to the next nearest obstacle and clears the closest one from the costmap. Desired behavior would be that the robot doesn't remove the obstacle from local costmap even when it is in the blind spot.

So the question is, is there a way to avoid this from happening? I believe one solution would be to have minimum distance for the raytracing (== 30cm, length of the blind spot), under which raytracing is not done, but move_base currently doesn't seem to have this sort of parameter.

I'm using Intel D435i depth camera which publishes a pointcloud from short distance and a laser scan from longer distance. This is how the obstacles layer looks like:

obstacles_laser:

observation_sources: camera_1_obstacles camera_1_laser

camera_1_laser: {data_type: LaserScan, clearing: true, marking: true, topic: /camera_1/laser_scan, obstacle_range: 5.5, raytrace_range: 6.0 }

camera_1_obstacles: {data_type: PointCloud2, clearing: true, marking: true, topic: /camera_1/obstacles_cloud, obstacle_range: 5.5, raytrace_range: 6.0}

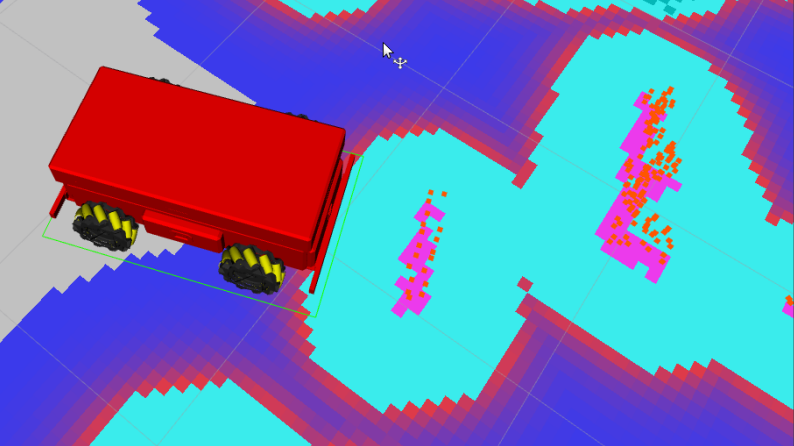

Robot approaching a short obstacle:

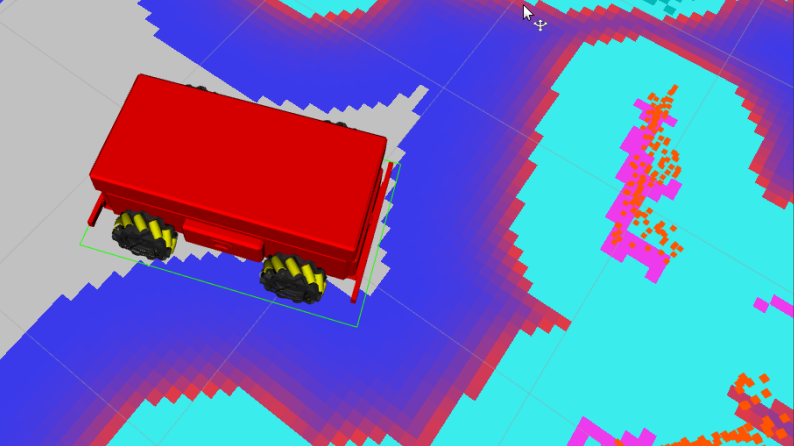

Robot has moved close enough for the obstacle to disappear from the local costmap

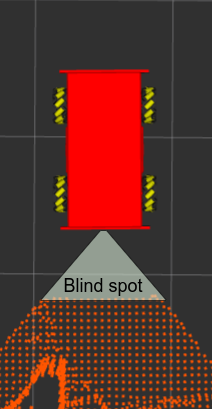

Visualization of the blind spot of the Intel camera. Visible area marked with orange points.