Bad MapCloud with RtabMap

Hi all,

I'm trying to use RtabMap for 3D map reconstruction in ROS Kinetic. My robot is equipped with:

- odometry (MD25 + EMG)

- lidar (RPLidar)

- depth camera (RealSense R200)

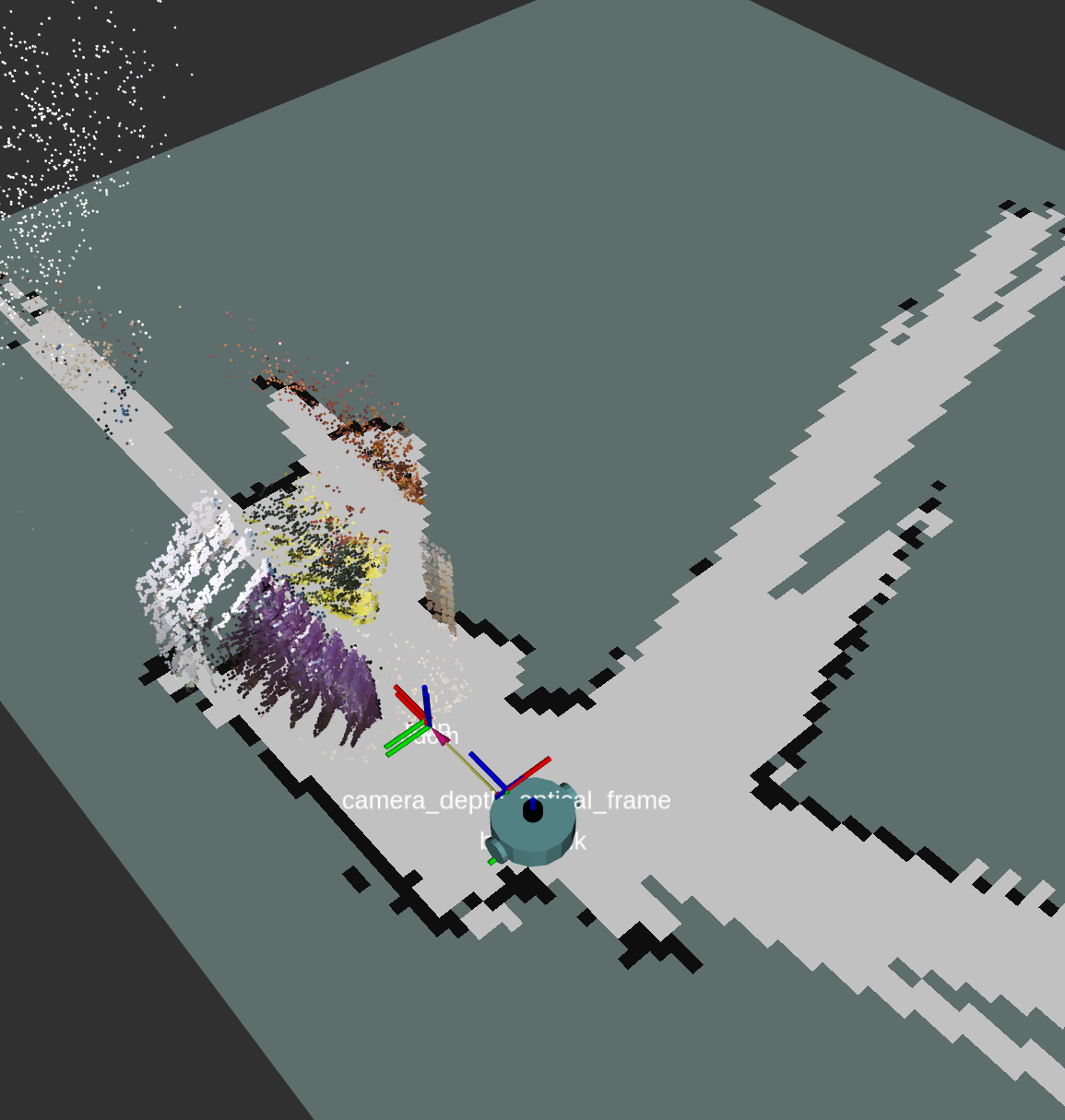

I followed the tutorial from ROS wiki but the resulting map is really strange:

It looks like the map follow the rebot during movement: i checked the TF tree and messages frames and all looks good (by the fact if I show depth cloud topic from camera it looks to have right frame). Furthermore the map generated by laser scan seems to not be affected by same problem.

Here the TF tree:

and RtabMap parameters:

* /rtabmap/rtabmap/Grid/FromDepth: false

* /rtabmap/rtabmap/Mem/RehearsalSimilarity: 0.45

* /rtabmap/rtabmap/RGBD/AngularUpdate: 0.01

* /rtabmap/rtabmap/RGBD/LinearUpdate: 0.01

* /rtabmap/rtabmap/RGBD/NeighborLinkRefining: true

* /rtabmap/rtabmap/RGBD/OptimizeFromGraphEnd: false

* /rtabmap/rtabmap/RGBD/ProximityBySpace: true

* /rtabmap/rtabmap/Reg/Force3DoF: true

* /rtabmap/rtabmap/Reg/Strategy: 1

* /rtabmap/rtabmap/Rtabmap/TimeThr: 700

* /rtabmap/rtabmap/Vis/InlierDistance: 0.1

* /rtabmap/rtabmap/Vis/MinInliers: 5

* /rtabmap/rtabmap/frame_id: base_link

* /rtabmap/rtabmap/map_frame_id: map

* /rtabmap/rtabmap/queue_size: 100

* /rtabmap/rtabmap/subscribe_depth: True

* /rtabmap/rtabmap/subscribe_scan: True

EDIT

Here the transformation from base_link to camera_rgb_optical_frame:

$ rosrun tf tf_echo base_link camera_rgb_optical_frame

At time 0.000

- Translation: [0.125, -0.045, 0.130]

- Rotation: in Quaternion [-0.500, 0.500, -0.500, 0.500]

in RPY (radian) [-1.571, -0.000, -1.571]

in RPY (degree) [-90.000, -0.000, -90.000]

At time 0.000

- Translation: [0.125, -0.045, 0.130]

- Rotation: in Quaternion [-0.500, 0.500, -0.500, 0.500]

in RPY (radian) [-1.571, -0.000, -1.571]

in RPY (degree) [-90.000, -0.000, -90.000]

[...]

The transformation never change accordling to the fact that camera is fixed to the robot base.

EDIT 2

The RtabMap DB can be found here.

You can open it with rtabmap-databaseViewer and see that while the 2D map by scan is quite good the 3D map is really bad!

EDIT 3

Here the topic published frequencies:

subscribed to [/scan]

average rate: 7.733

min: 0.060s max: 0.214s std dev: 0.05153s window: 7

subscribed to [/odom]

average rate: 30.019

min: 0.029s max: 0.039s std dev: 0.00152s window: 30

subscribed to [/camera/depth_registered/image_rectified]

average rate: 29.917

min: 0.019s max: 0.054s std dev: 0.00792s window: 29

subscribed to [/camera/rgb/image_rectified]

average rate: 30.136

min: 0.016s max: 0.055s std dev: 0.00766s window: 28

subscribed to [/camera/rgb/camera_info]

average rate: 29.909

min: 0.022s max: 0.046s std dev: 0.00628s window: 28

Laser scan is published at about 8 Hz where odometry and depth are published at 30 Hz. Since odometry and depth came from different sensors the publish rate is the same but they are not synchronized.

Any help will be appreciated!

Thanks Ale

Make sure to set global fixed frame in rviz to map.

Setting map as global fixed frame does not solve. Anyway I cannot understand how this can solve the problem... can you explain me your idea?

Having for example global frame in rviz set to base_link, MapCloud would not correctly show the 3d clouds of the map in right poses (which are in map frame). What is tf transform between base_link and the frame of the rgb image? (

rosrun tf tf_echo base_link camera_rgb_optical_frame)Edited to add requested info. Thanks!

Can you share the resulting database? (~/.ros/rtabmap.db)

Added RtabMap DB. It does not refer to the RViz picture added before but you can appreciate the quality difference between 2D and 3D map! Thanks again for your help!

Looking at the database, it seems that there is a poor synchronization between lidar, realsense and odom, because only when the robot is not moving, the 3D cloud matches the 2D lidar. What is the framerate of these topics: scan, rgb image, depth image, camera info, odom? (

$ rostopic hz)Added published frequencies too. You are rigth: my laser scan is published at 8 Hz, lower than odom and depth that are published at 30 Hz. Odometry and depth are also not synchronized since came from different sensors. Do you think this is the problem? How to solve in that case? Thanks for support.