advice for SLAM with 3D lidar

Hello. The project is running Ubuntu 16.04, with Kinetic on an Intel PC.

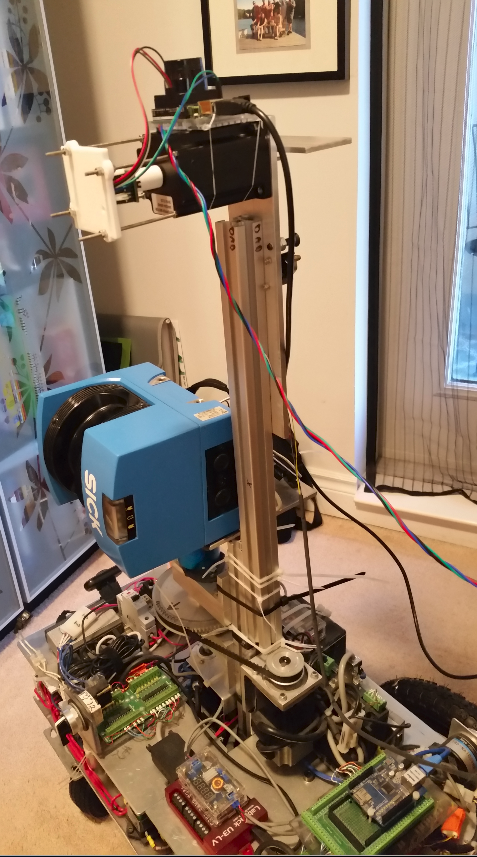

Some background: I designed and built a robot, and was at the SLAM phase. The turtlebot tutorials (https://learn.turtlebot.com/) are a great guide to SLAM for a person like me. Then, I experienced a real kick in the pants - it turns out that the current offering of SLAM packages is geared towards horizontal (planar) lidar, and not vertical lidar like the one I built (see: https://answers.ros.org/question/3466...). Well, life is a learning experience so I built a new horizontal 3D lidar system:

At present the new horizontal/planar lidar system is hanging onto the robot with zip ties, and needs to be mounted onto the robot:

Before I rip out the vertical 3D lidar system, and replace it with the horizontal system I need to decide the height at which to place the new horizontal lidar. I have a group of related questions that I am hoping will guide the placement:

- It is my understanding that gmapping is the recommended mapping engine for the Navigation stack (https://wiki.ros.org/navigation/MapBu...). Is gmapping still the best tool for creating a 2D map (given the 3D lidar)?

- I want to create a 2D map, but avoid obstacles using the full 3D lidar data (exactly like the video on the navigation stack home page: https://wiki.ros.org/navigation). Using the navigation stack with gmapping, and amcl will I be able to reach this objective?

- Can you please recommend package combinations that will allow the robot to build a 2D map, localize in the map, and navigate to points on the map while avoiding obstacles using the full horizontal 3D lidar data?

I am pre-emptively asking these questions becasue I don't want to rebuild my robot to later learn I positioned the lidar in a way that does not work optimally with the current SLAM offerings.

Thanks a ton for your time!

Mike

Edit #1 - I appreciate all suggestions for packages that prevent bumping into the top of the table as I navigate around the table legs; also I don't want to run over things laying on the floor. On closer analysis, it looks like like the PR2 has a pitching lidar AND a fixed lidar, AND rgbd cameras. I am really hoping to get some guidance on package selection for building a map, localizing, and navigating to points on the map (map of my apartment - small area). I would prefer to do it all via lidar, but if more "cheap" hardware will really help, I am very open to those suggestions. Any full working solutions are very, very appreciated. Thanks again.

I work with rgbd cameras but some things might work in your case as well. There is http://wiki.ros.org/pointcloud_to_las... , on top of my head i cannot say wether it is this package or an aditional one, but there is the possibility to recognize obstacles depending on size, so you actually dont get a slice of the pointcloud, but more of a projection taking obstacle hight in to consideration, you dont just "look under the desk" kind of idea. There are older packages that tried 3d, 2d navigation http://wiki.ros.org/humanoid_navigation, and http://wiki.ros.org/3d_navigation - but these seem to have gone closed source or were abandoned. As I look at your lidar I wonder wether you could muster the resources and get a rgbd camera and try rtabmap-slam, its apearance based, needs a camera for this. I have found it not to be ideal ...(more)

@Dragonslayer Thanks for the info. I am not a programmer, and am unfortunately isolated from anyone who knows ROS or robotics.... (but I have the internet...whooo hooo), so I really appropriate guiding comments like yours. Thanks for mentioning something new that I had no idea about. I will look into it. Having said that, I still have to do the lidar thing as I am so heavily invested in it. Thanks again!

I see. The real nice thing about rtabmap is that it gives you lots of outputs, obstacles map, floormap(projection map), octomap etc. Maybe you could just use it to "convert" your pointcloud to then use those topics/data types to go on with other slam packages. Its not a fine solution but it can get you testing qicker then dealig with specialized packages that process the pointcloud.

Regarding EDIT1: You are actually already there it seems to me, that is if you have odometry. Use the planar lidar with gmapping and then amcl for mapping and navigation via global_costmap and navigation stack, planners by move_base. And the costmap2d (from navigation stack) as local costmap (obstacle avoidance) to create an obstacle layer link text from the 3d-llidar pointcloud in the local costmap for obstacle avoidance. There is a parameter for obstacle hight (its actually in this package not the one I suspected in the earlier comment). This will give you cmd_vel output, which you have to get to your motor drivers/controllers via a hardware interface, thats it. link text