In the first experiment the pi and tx2 were connected via WIFI. Rostopic hz /camera1/depth/color/points on SLAVE gives me 2 Hz while it is 15 Hz on MASTER.

I would not use any wireless links for something like this. They're too low bandwidth.

In second experiment I connected the two using ethernet cable as assigned ip using (sudo ip ad add 10.2.2.250/24 dev eth0). Rostopic hz /camera1/depth/color/points on SLAVE gives me 6hz - 12 Hz while it is 15 Hz on MASTER.

A gigabit link provides at most a throughput of 125 MB/s. At most, as it is the theoretical limit of the transport. More often than not it won't get there.

I'm not sure what the datarate of the points topic of a realsense is, but if you'd get 6 to 12 Hz, it's probably on the order of 10 to 15MB/sec, which seems like it could be correct.

Running 2 cameras without any compression will not make things any faster.

If with "network overhead" you meant the fact that you're transferring the data over a network (ie: just that fact), then it's indeed true that this will place a bottleneck in your system.

Traditionally though, the term "overhead" is used to mean "additional traffic not directly used to transmit the payload itself" (so: headers, signalling, etc). I'm not sure that would be the main cause of the reduced data rates here. My first though would be you're simply saturating the available bandwidth.

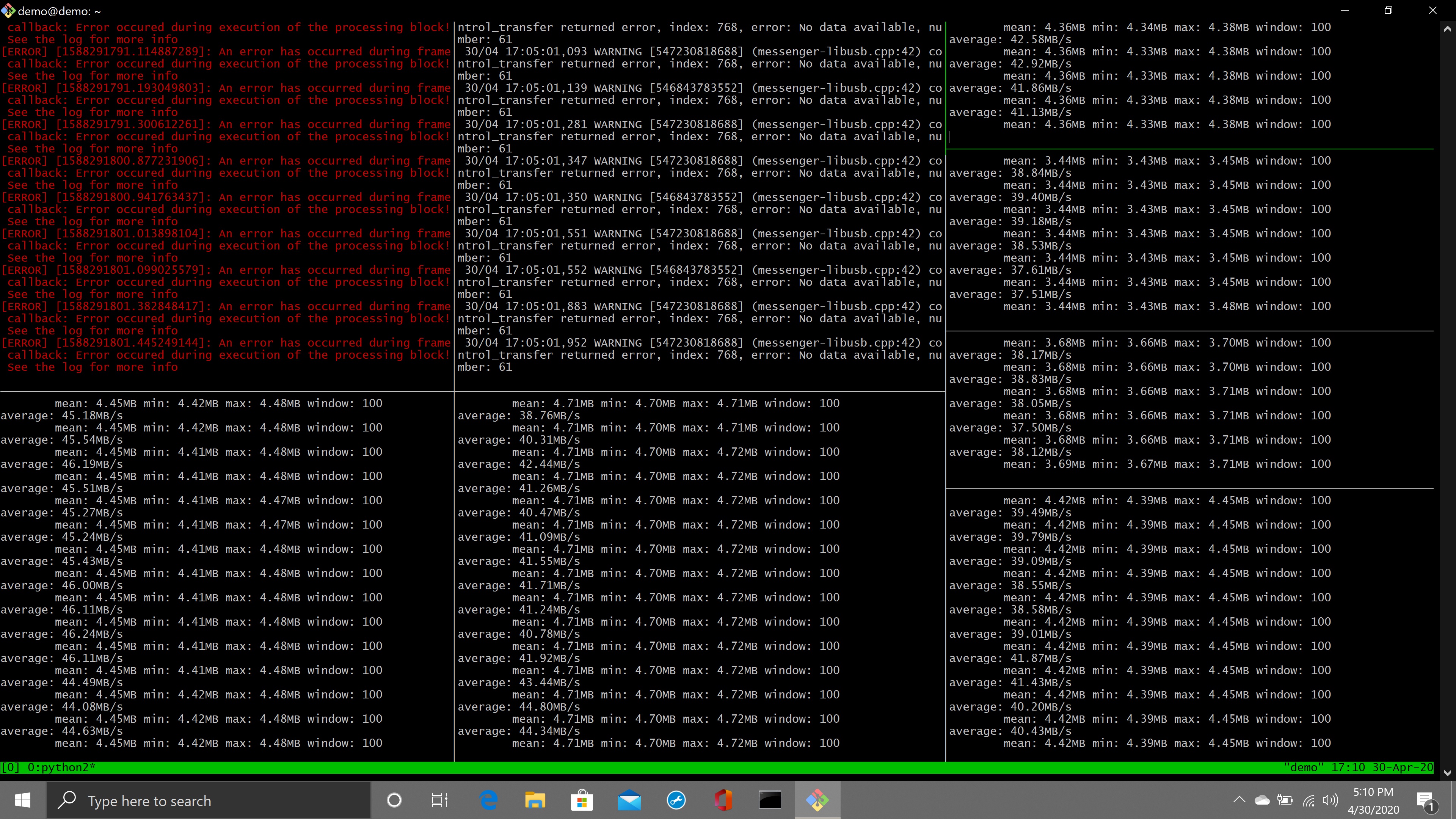

Perhaps try a rostopic bw /camera1/depth/color/points on your master and post those results here.

what is the best setup for interfacing 6 realsense cams in ros?

I'm not convinced your problem is with ROS necessarily: saturating a specific link with traffic will become a problem in many (all?) cases, no matter which communication infrastructure you use. How the saturation is dealt with, that would seem to be specific to whatever you're using.

Edit: something to look into may be compressed_depth_image_transport. It trades spending CPU time for network bandwidth. Instead of sending over entire pointclouds, you would use depth images, compress and then decompress them.

For PointCloud2 there has been some discussion (such as Compressed PointCloud2? on ROS Discourse), and some people have implemented custom solutions (such as paplhjak/point_cloud_transport mentioned in ros-perception/perception_pcl#152).

You could see whether using those helps with the bandwidth problem.

this seems like you've already figured out what your problem is. If you believe it's due to network overhead, then it would good if you could explain how you arrived at that conclusion.

I have updated the question with more details on the experiment.

Streaming data from the realsense over wifi is generally a bad idea. It is well known that the realsense pumps an insane amount of data out from its USB3 port (images, pointclouds and IMU data) and WiFi is not well suited for it. As you showed, wired connections are more efficient. Make sure they are 1Gbps bandwidth rated cables (Cat E). Even over 1 camera directly plugged, it sometimes struggled to get 15fps as the realsense driver takes 1 core of my CPU.

Hi do you mind updating how the 150mb/s bandwidth was derived? I could see various 40mb/s topics in your terminal screenshot, but it is not clear which topics they were referring to. I'm guessing its mostly pointcloud2? For your real use case what topics would you need to use from each of the 6 cameras? The ROS network is setup such that the data will not be transferred over the network unless someone is "subscribing" to the data, in this case your

rostopic bwcommand was subscribing. Otherwise, if you were to only subscribe to image feed without pointcloud, I'm guessing the network usage may not be so high. You can also configure the realsense-ros driver to not publish certain topics. That reduces the CPU usage.the 150mb/s was obtained by adding bw of /depth_img + /infra1_img + /pointcloud .

Yes I modified the driver to not published compressed, theora and compressedDepth, and infra2 image topic.

I am using two topics pointcloud and infra1 image.