Rosbag API and TF transforming tools

I am currently writing some tools in Python to visually judge the quality of the odometry. For this I record a bag with odom topic as well as tf, lidar scans and wheel encoders.

Then I loop over the bag data, read all poses from odom and project the lidar scans to cartesian coordinates in the odom frame using the odom pose with the closest timestamp.

My odom is published at 100 Hz while the lidar scans are published at around 10 Hz so in worst case the time offset will be 5ms.

I then plot all lidar scans for all poses and see how well they overlap (Similar to setting decay_time of lidar scans to a very big value in Rviz)

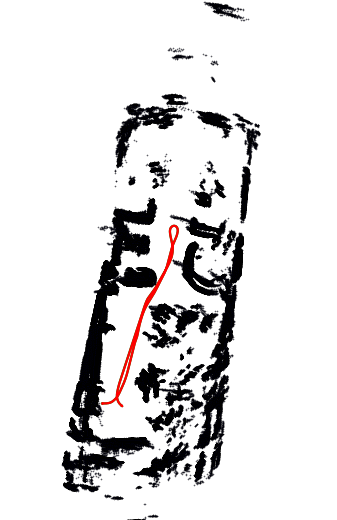

Here is the result:

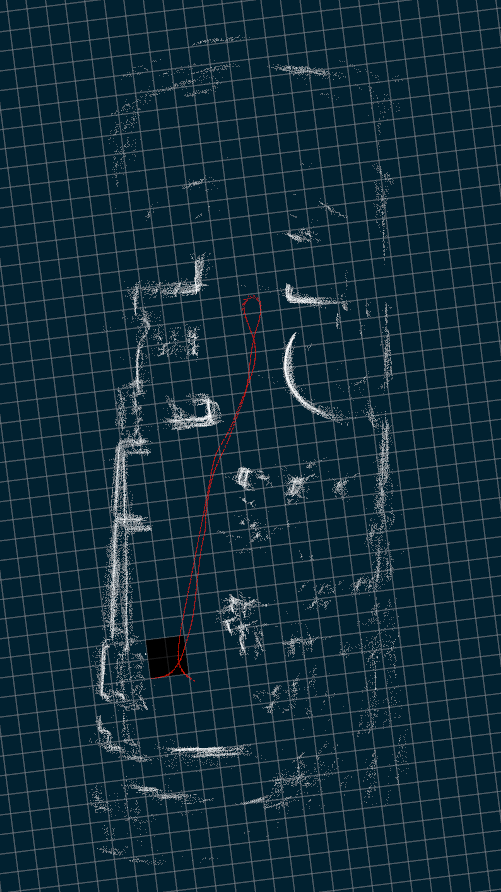

Compared to Rviz (with big decay time)

I noticed that my plot and the data displayed in Rviz (with a decay time covering all the length of the bag while doing rosbag play) looks slightly different.

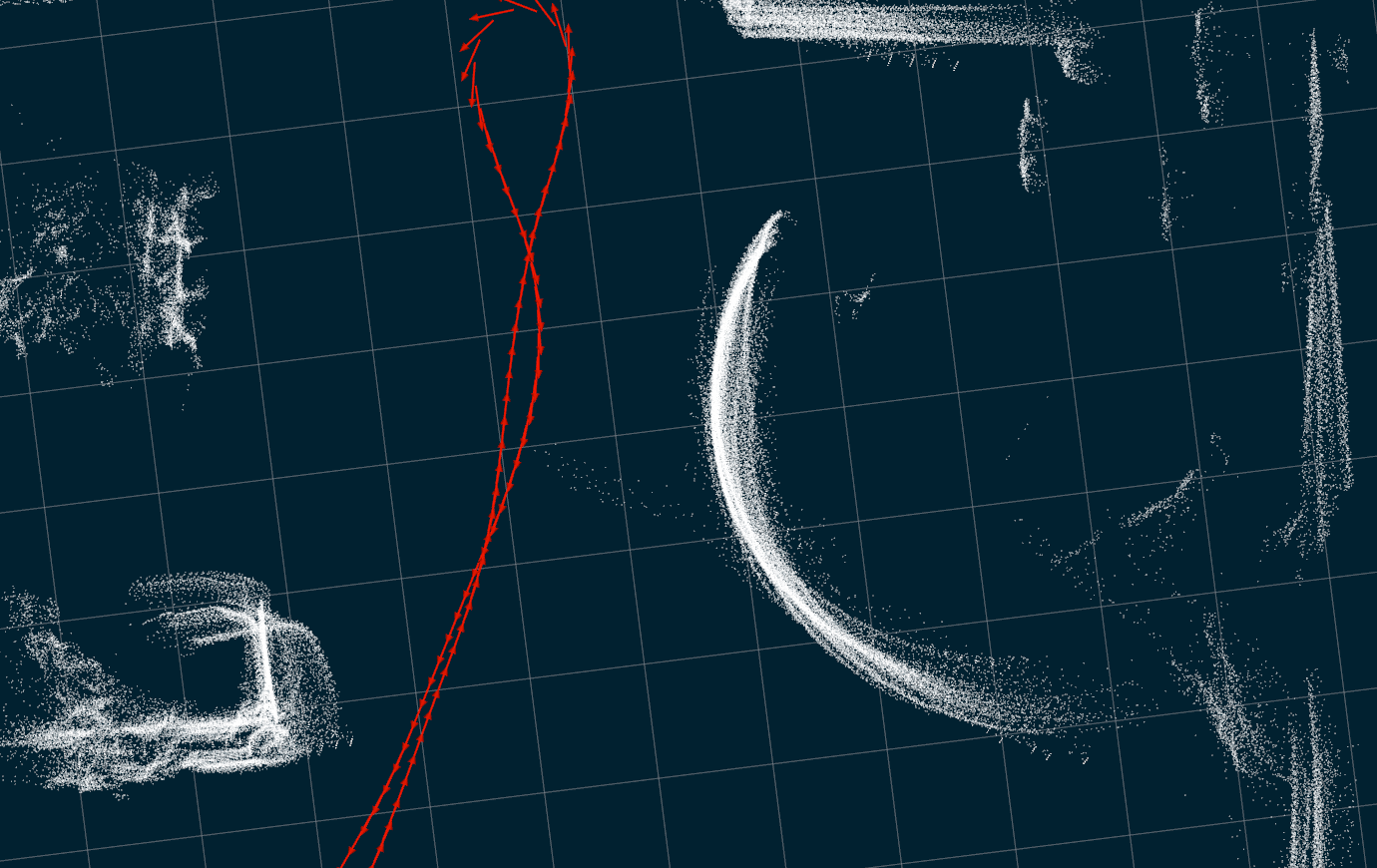

Especially when zooming on known features, for example the half circle:

My plot:

Rviz:

The difference becomes very clear.

When looking in the source code of Rviz I saw that it just transforms the lidar scan to a pointcloud and then uses TF to transform it to the correct frame. My question is how to do such a thing without having to play the bag, but only using the rosbag API, on Python? I suspect using the closest timestamp to be the source of error. Does TF interpolate to get the exact pose?