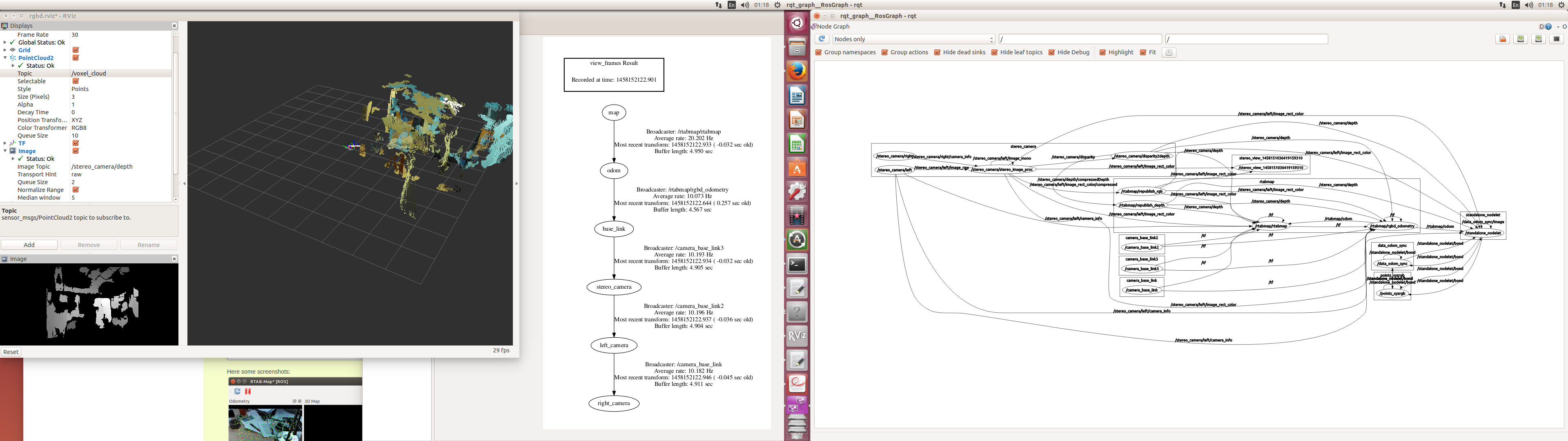

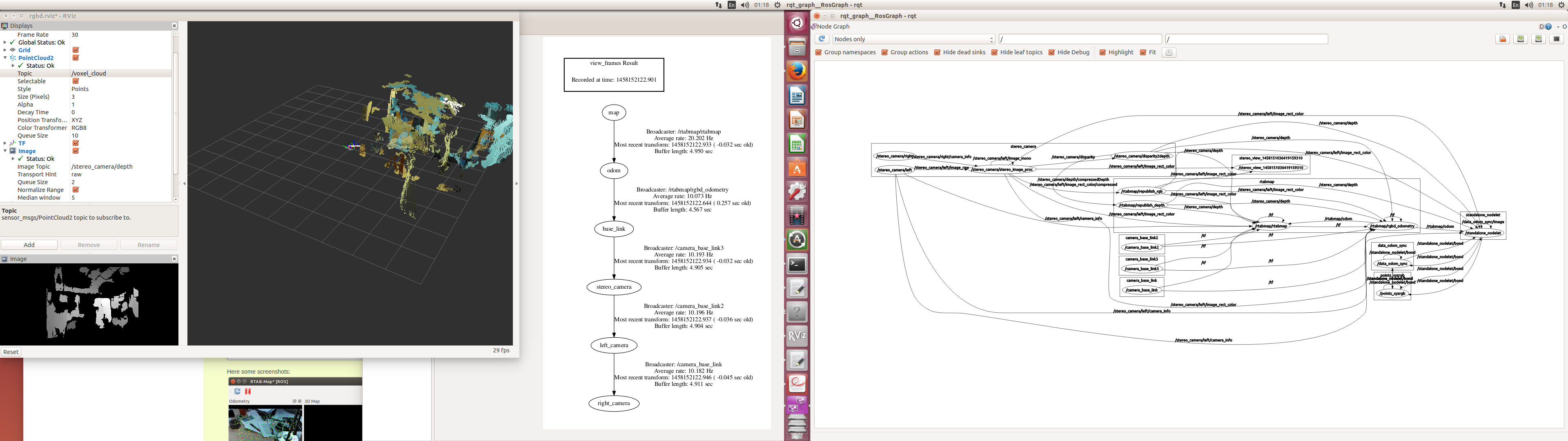

Check my result , please?

roslaunch rtabmap_ros rgbd_mapping.launch rgb_topic:=/stereo_camera/left/image_rect_color depth_registered_topic:=/stereo_camera/depth camera_info_topic:=/stereo_camera/left/camera_info frame_id:=base_link rtabmap_args:="--delete_db_on_start" estimation:=1 compressed:=true

Use 2 digital camera for stereo camera

My_stereo_camera.launch

<launch>

<group ns="/stereo_camera" >

<node name="left" pkg="usb_cam" type="usb_cam_node">

<param name="video_device" value="/dev/video1" />

<param name="image_width" value="640" />

<param name="image_height" value="480" />

<param name="camera_info_url" type="string" value="file:///home/greenout/catkin_ws/left.yaml" />

<param name="framerate" value="60" />

<param name="camera_frame_id" value="left_camera" />

</node>

<node name="right" pkg="usb_cam" type="usb_cam_node">

<param name="video_device" value="/dev/video0" />

<param name="image_width" value="640" />

<param name="image_height" value="480" />

<param name="camera_info_url" type="string" value="file:///home/greenout/catkin_ws/right.yaml" />

<param name="framerate" value="60" />

<param name="camera_frame_id" value="right_camera" />

</node>

</group>

<!-- Rotate the camera frame. -->

<arg name="pi/2" value="1.5707963267948966" />

<arg name="optical_rotate" value="0 0 0 -$(arg pi/2) 0 -$(arg pi/2)" />

<node pkg="tf" type="static_transform_publisher" name="camera_base_link"

args="0 0 0 0 0 0 left_camera right_camera 100" />

<node pkg="tf" type="static_transform_publisher" name="camera_base_link2"

args="0 0 0 0 0 0 stereo_camera left_camera 100" />

<node pkg="tf" type="static_transform_publisher" name="camera_base_link3"

args="$(arg optical_rotate) base_link stereo_camera 100" />

</launch>

stereo_image_proc for image rectification and disparity computation

<launch>

<!-- Run the ROS package stereo_image_proc for image rectification and disparity computation -->

<group ns="/stereo_camera" >

<node pkg="stereo_image_proc" type="stereo_image_proc" name="stereo_image_proc" required="true" output = "screen">

<param name="approximate_sync" value="true"/>

</node>

<node pkg="nodelet" type="nodelet" name="disparity2depth" args="standalone rtabmap_ros/disparity_to_depth"/>

</group>

</launch>

rgbd_mapping.launch

<launch>

<!-- Your RGB-D sensor should be already started with "depth_registration:=true".

Examples:

$ roslaunch freenect_launch freenect.launch depth_registration:=true

$ roslaunch openni2_launch openni2.launch depth_registration:=true -->

<!-- Choose visualization -->

<arg name="rviz" default="true" />

<arg name="rtabmapviz" default="false" />

<!-- Corresponding config files -->

<arg name="rtabmapviz_cfg" default="-d $(find rtabmap_ros)/launch/config/rgbd_gui.ini" />

<arg name="rviz_cfg" default="-d $(find rtabmap_ros)/launch/config/rgbd.rviz" />

<arg name="frame_id" default="camera_link"/> <!-- Fixed frame id, you may set "base_link" or "base_footprint" if they are published -->

<arg name="time_threshold" default="0"/> <!-- (ms) If not 0 ms, memory management is used to keep processing time on this fixed limit. -->

<arg name="optimize_from_last_node" default="false"/> <!-- Optimize the map from the last node. Should be true on multi-session mapping and when time threshold is set -->

<arg name="database_path" default="~/.ros/rtabmap.db"/>

<arg name="rtabmap_args" default=""/> <!-- delete_db_on_start, udebug -->

<arg name="launch_prefix" default=""/> <!-- for debugging purpose, it fills launch-prefix tag of the nodes -->

<arg name="rgb_topic" default="/camera/rgb/image_rect_color" />

<arg name="depth_registered_topic" default="/camera/depth_registered/image_raw" />

<arg name="camera_info_topic" default="/camera/rgb/camera_info" />

<arg name="compressed" default="false"/>

<arg name="convert_depth_to_mm" default="true"/>

<arg name="subscribe_scan" default="false"/> <!-- Assuming 2D scan if set, rtabmap will do 3DoF mapping instead of 6DoF -->

<arg name="scan_topic" default="/scan"/>

<arg name="visual_odometry" default="true"/> <!-- Generate visual odometry -->

<arg name="odom_topic" default="/odom"/> <!-- Odometry topic used if visual_odometry is false -->

<arg name="namespace" default="rtabmap"/>

<arg name="wait_for_transform" default="0.1"/>

<!-- Odometry parameters: -->

<arg ...

(more)

What do you mean when you say "I get good parameter on rqt_reconfig" ? Are you using rqt_reconfig to modify the default parameters?

Yes, result is good (/stereo_camera/points2 ) but when i launch rtabmap_ros stereo_mapping.launch ,i get voxel_cloud ,it have speckle yet why`? stereo_camera/points2 what to do

I still don't understand. Maybe a few pictures or screenshots would help.

can I use stereo Hand-Held Mapping of Rtabmap use to pointcloud2(stereo_camera/points2) from stereo_image_poc to make map ? if it not use what should I calibrate disparity map? (voxel_cloud)