Gmapping in Gazebo with Hokuyo: problems due to max range measurements

Hi all,

I am simulating gmapping in Gazebo with my Turtlebot. I have attached an Hokuyo laser on my (virtual) turtlebot and want to use this for gmapping instead of the virtual kinect laser.

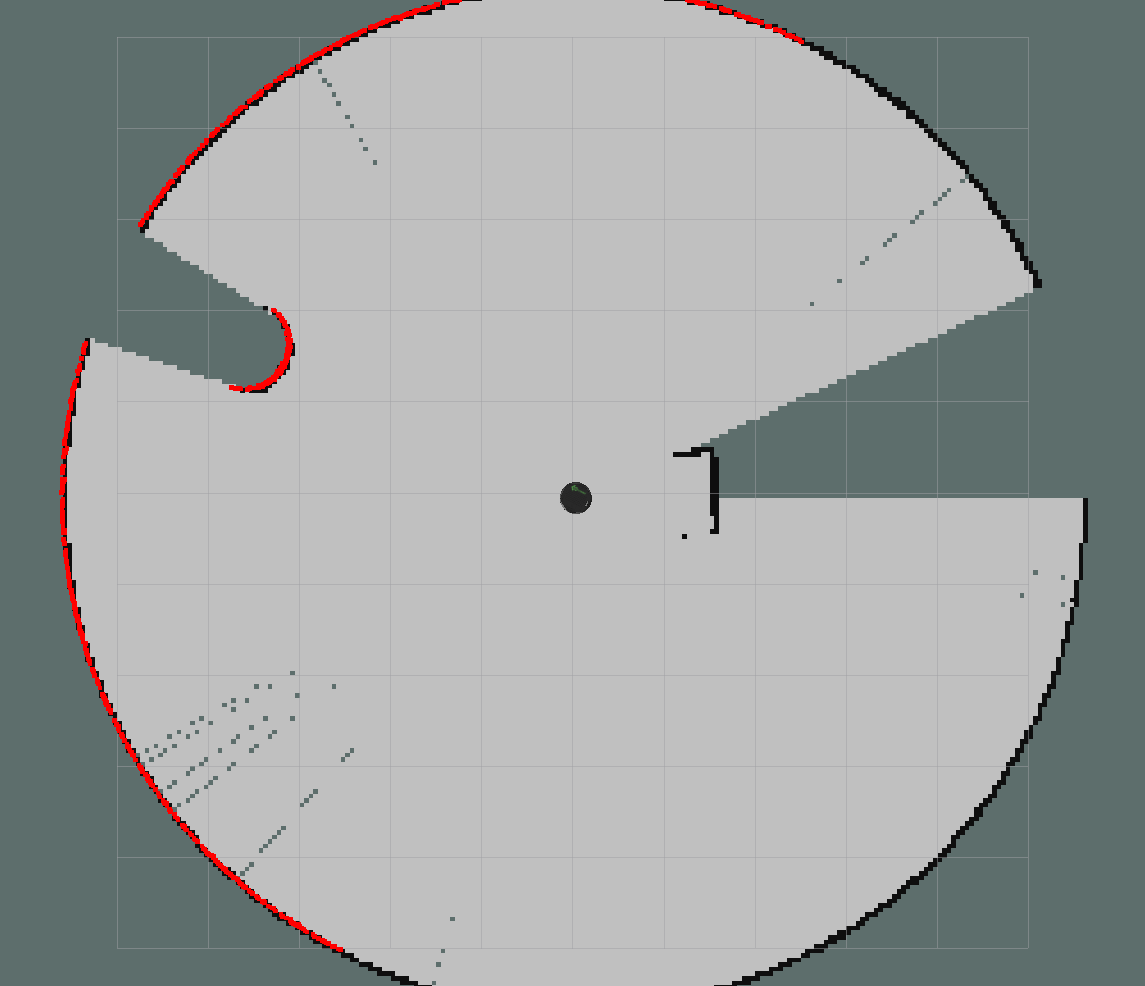

The problem I have is that the virtual Hokuyo returns measurements at its maximum range. E.g. it would return a circle of measurment points of it would be placed in an empty world. Gmapping uses these measurements as if obstacles are detected there. This completely messes up the scan matching process, as it tries to match everything with the non-existent circular wall.

If I use the Hokuyo on my real robot, it only returns true measurements, i.e. if it cannot detect something at a certain angle, it will not return any data point (as if the distance seems infinite).

How should I adapt my virtual Hokuyo to not return data points when it has not detected anything at its max range?

Or alternatively, is there any way to adapt gmapping to cope with such measurements (which less elegant I think, as the difference between simulation and real experiments persists in other fields of measurement usage)?

Please see the screenshots attached: rviz clearly shows the 'circular non-existent' walls. I have only turned around in circles for this screenshot. If I would also move around with the robot, the scan-matching trouble becomes clear. I also have these issues (but to a lesser extend) when using it in a gazebo indoor building world (willowgarage world).

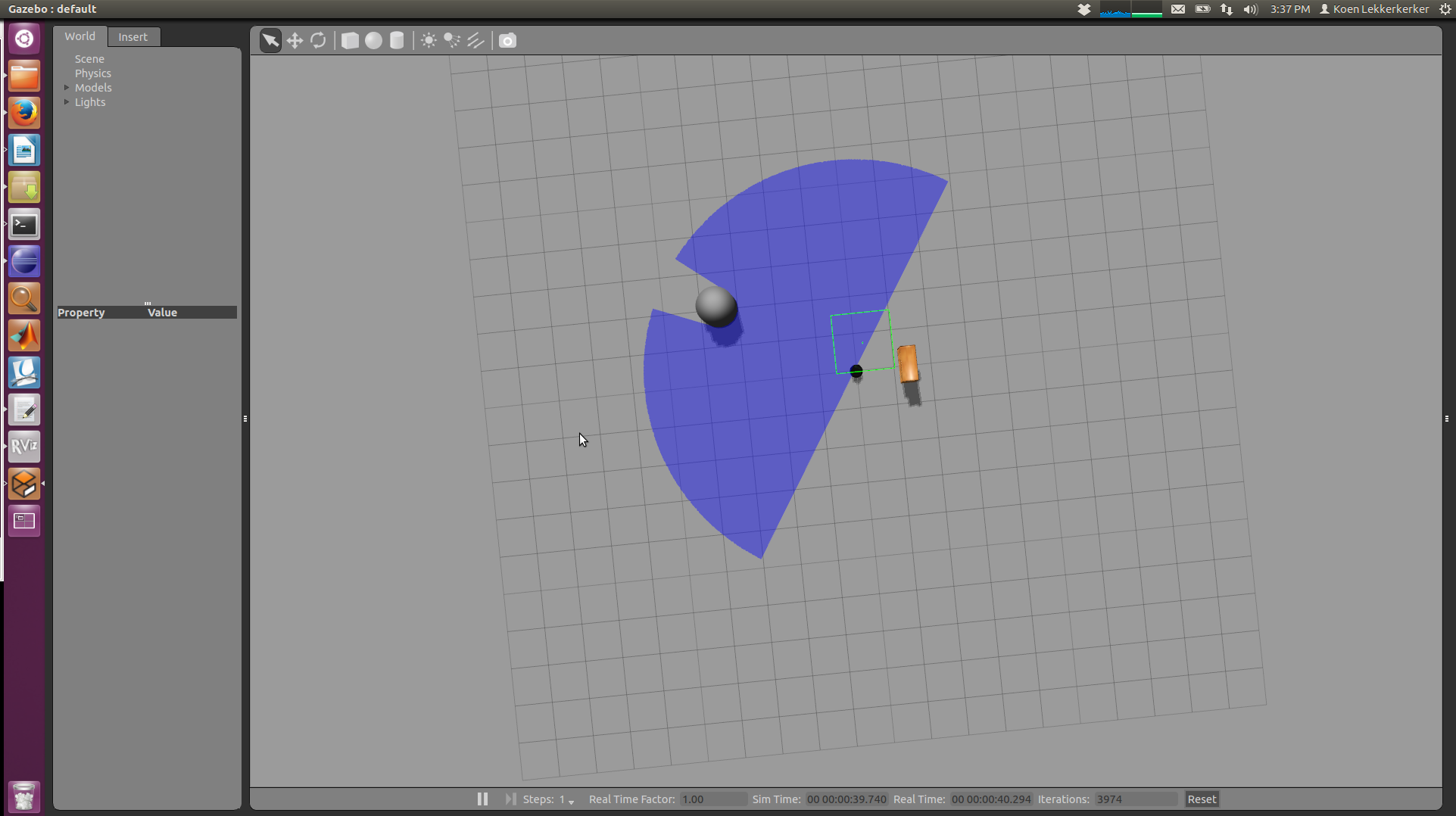

Gazebo world (with laser visualized):

rviz result:

Any help would be very much appreciated.

Regards, Koen

UPDATE:

A slight addition to my comment on dornheges answer: the maxRange can probably better be omitted, as skipped/missing measurements lead to the conclusion that certain space is empty, although the robot cannot actually see this. See for example the explored space outside of the room in the screenshot... Clearly, the robot couldnt know this: it is based on the assumption that no measurment equals free space.

Does anybody know a nice way to still use this 'space is free if nothing is measured' feature, without running into this glitch (i.e. make it robust against skipped/missing/erroneous measurements)?