Relative tf between two cameras looking at the same AR marker

Hi everyone!

I was thinking about using tf lookup to obtain the relative transform between two cameras looking at the same AR marker (as seen in ar_pose). Looking at tf/FAQ, though, I stumbled upon this comment:

The frames are published incorrectly. tf expects the frames to form a tree. This means that each frame can only have one parent. If you publish for example both the transforms: from "A (parent) to B (child)" and from "C (parent) to B (child)". This means that B has two parents: A and C. tf cannot deal with this.

Is there a standard alternative to obtain the transform between A and C?

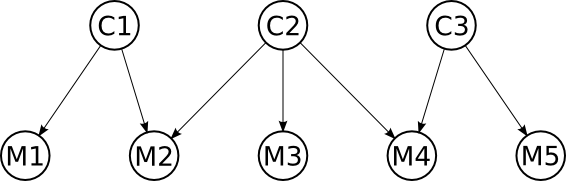

EDIT: What I am looking for is a way to daisy chain transforms between Kinects in order to place multiple clouds in a single reference frame. See the "tf graph" below, for instance:

Nodes represent frames and arrows represent transforms. Suppose I want all my point clouds in marker M3's frame (visible only to camera C2). I would have to figure out the transform from C1 and C3 to C2, taking advantage of the common markers visible to them (i.e. M2 and M4).

What I got from tf/FAQ is that I cannot look up these transforms natively, so I wanted to know if there is a standard way to do it. Otherwise, I will just have to request the relevant transforms (e.g. C2->M4 and C3->M4), invert them as appropriate (e.g. M4->C2) and compose them into a single transform through multiplication (e.g. C3->M4->C2).

Hello georgebrindeiro, how does your work going? Could you solve your problem at merging the estimation? I am working on the same problem and i`m very interested in your results. Could you please update these article?