IMU + Odometry Robot Localization Orientation Issue

Hello I am trying to use robot localization package for fusing IMU and Wheel Encoder Odometry such that x and y velocities are taken from odometry data and heading is taken from imu.

However I am getting this issue such that fused localization is not really paying attention to the heading from the IMU. Such that the ekf output just goes down whatever heading the odometry gives while still facing the correct imu direction.

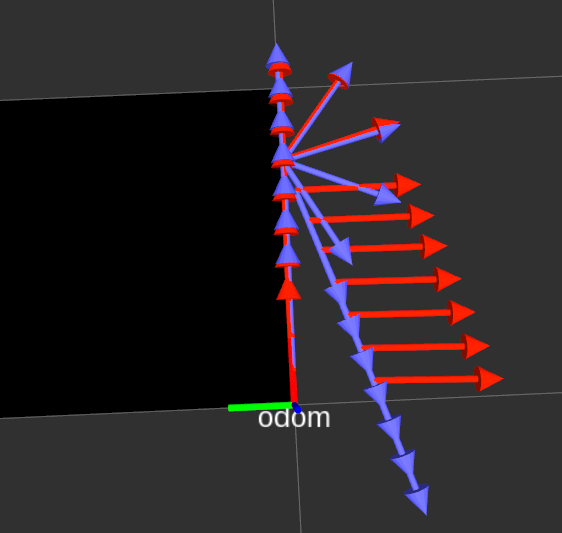

For example, in the picture below , I have my rover going forward and then turn right 90 degrees and then go forward. Blue is wheel odometry (It is very off when coming up with yaw) and the red is the ekf output.

I have also attached a bag file of this test.

Here is my ekf params:

map_frame: map

odom_frame: odom

base_link_frame: base_footprint

world_frame: odom

odom0: odometry/wheel

odom0_config: [false, false, false,

false, false, false,

true, true, false,

false, false, false,

false, false, false]

odom0_queue_size: 10

odom0_nodelay: true

odom0_differential: false

odom0_relative: false

imu0: /imu/data

imu0_config: [false, false, false,

false, false, true,

false, false, true,

false, false, true,

false, false, true]

imu0_nodelay: false

imu0_differential: false

imu0_relative: false

imu0_queue_size: 10

imu0_remove_gravitational_acceleration: true

Thoughts?

Why do you filter out yaw' int the odom0_config? Mybe can you also show an image of the fused odom output?

Here is a link with a a walktrough on configuring the ekf

http://docs.ros.org/melodic/api/robot...

The odom fused output is the red arrows, that is fusion of both the odom and the imu.

Reason why I took out the yaw was because the wheel odometry is very off when it comes to turning so I wanted the yaw to be only fused from the IMU.

I have already tried using the params from there however I have the same issue.