Sensor fusion of IMU and ASUS for RTAB-MAP with robot_localization

Hi,

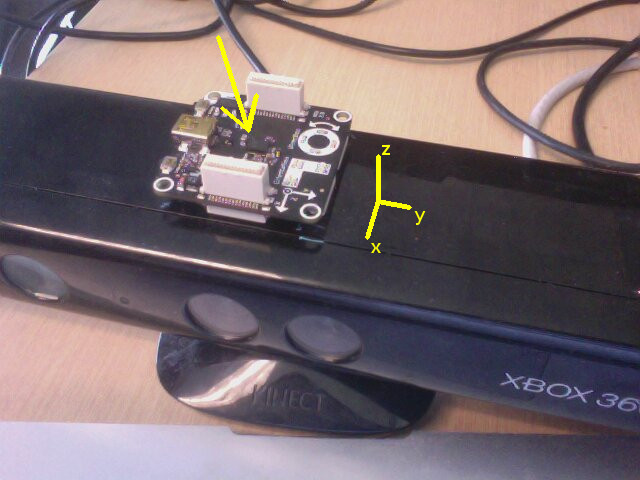

@matlabbe, I have ASUS xtion pro live and vn-100T IMU sensors like the following picture:

I tried to fuse the data from these two sensors similar to your launch file here with robot_localization. The difference in my launch file is that I added the tf relationship as:

<node pkg="tf" type="static_transform_publisher" name="base_link_to_camera_link_rgb" args="0.0 0 0.0 0.0 0 0.0 base_link camera_link 20" />

<node pkg="tf" type="static_transform_publisher" name="base_link_to_imu_link" args="0.0 0.0 0.5 0 0 0 base_link imu 20" />

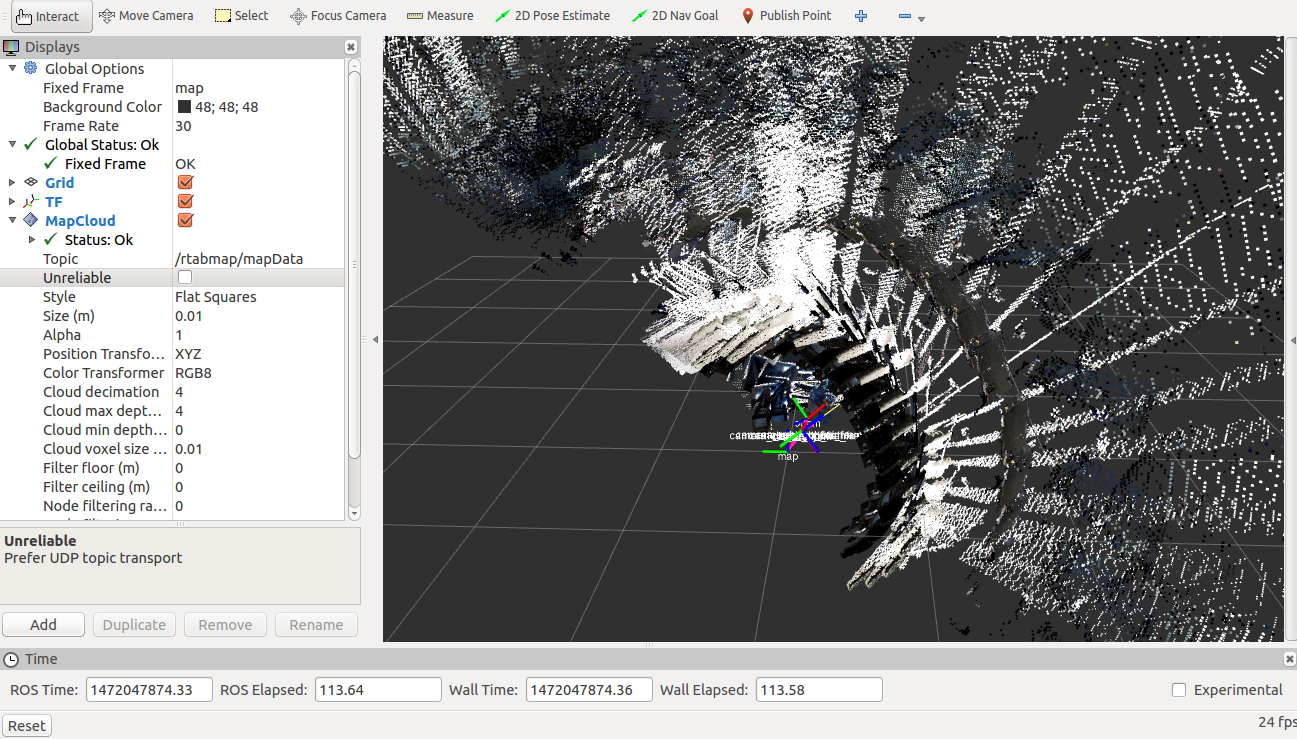

After I launch it, I get the following result for the map could. In this picture I am not moving sensors at all. It starts to rotate around the view, and the information is not correct.

Also, when I move the sensors, the coordinate frames do not move correctly. I read "Preparing your sensor data", but I am not sure if something is wrong with the placement of the IMU or not!

Do you think there is a problem with the usage of the tf package? I think the relationship is correct, but I don't know how to remove this drift (or motion) when I don't move the sensors at all.

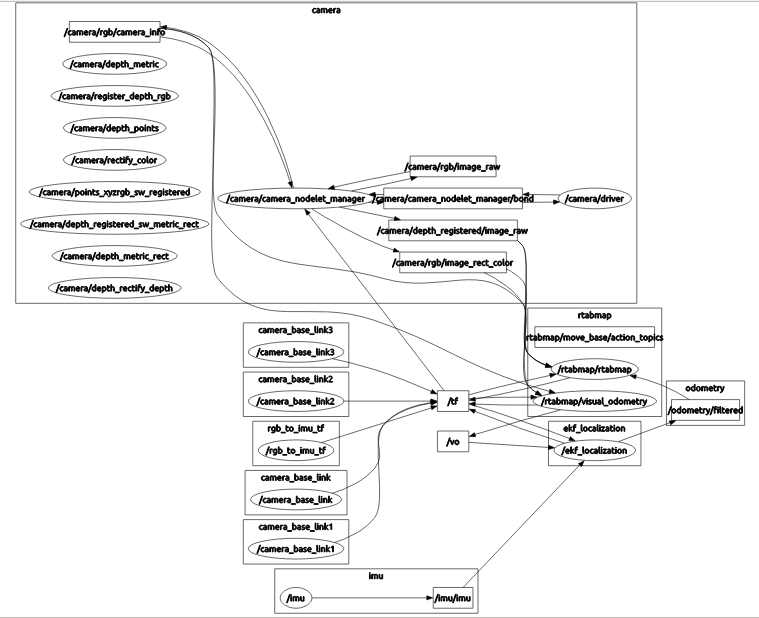

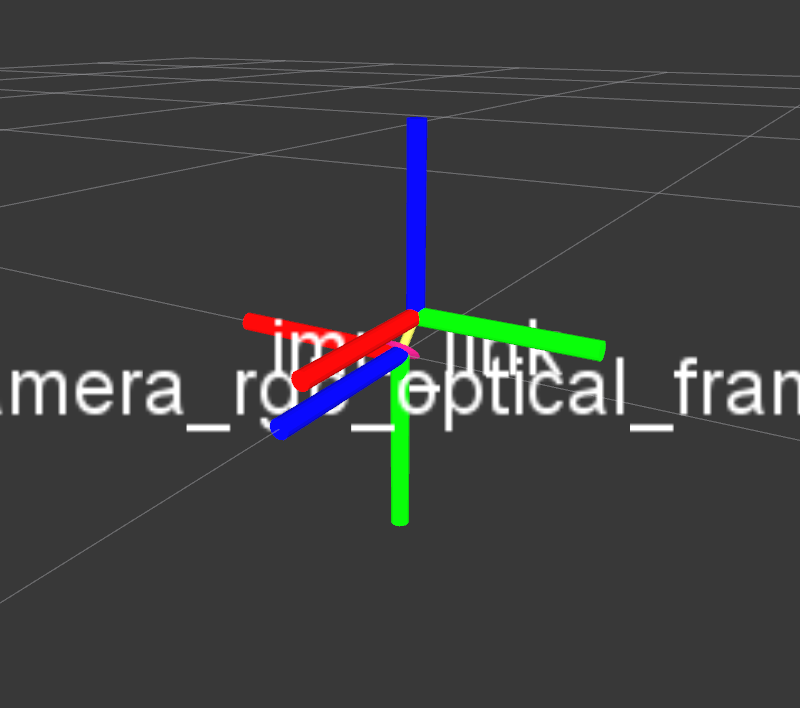

The rqt_graph and tf_frames are like the following:

I also read these two question (1) and (2), but I didn't understand how to solve it. Thank you.

Edit:

When I do rostopic echo /imu/imu, I get the following output:

---

header:

seq: 1912

stamp:

secs: 1472737002

nsecs: 470006463

frame_id: imu

orientation:

x: -0.989729464054

y: -0.141599491239

z: 0.0163822248578

w: 0.0108099048957

orientation_covariance: [0.0005, 0.0, 0.0, 0.0, 0.0005, 0.0, 0.0, 0.0, 0.0005]

angular_velocity:

x: 0.0242815092206

y: -0.000830390374176

z: -0.0146896829829

angular_velocity_covariance: [0.00025, 0.0, 0.0, 0.0, 0.00025, 0.0, 0.0, 0.0, 0.00025]

linear_acceleration:

x: 0.298014938831

y: 0.262553811073

z: 9.89032745361

linear_acceleration_covariance: [0.1, 0.0, 0.0, 0.0, 0.1, 0.0, 0.0, 0.0, 0.1]

---

@Tom Moore would you please give me some hints on my question. Thank you.

Can you please post your launch file and sample messages for all inputs? Which IMU driver are you using? Is it reporting data in ENU frame? It looks like it must be, given your linear acceleration, but I want to be sure.

@Tom Moore For the IMU I am using this driver on Ubuntu 14.04 with ROS indigo. Similar to the picture in the question, I put the IMU on top of the vision sensor. I tried to use ENU frame, but the positive direction for the IMU is NED

If I use NED, is it making a problem?

I put the launch files here. I will add some plots to the question for the outputs.

@MahsaP

I am using your same IMU and Driver, and with unedited code my linear accelerations are the about the negative versions of yours. Did you have to modify the driver code so that z acceleration = +g when in the orientation of your picture? This is not a correction, but me trying to understand

@matthewlefort I just modified the code to add the covariance matrix.

If it's NED frame, then yes, that is definitely a problem.

r_lassumes ROS standards (see REP-103), and an IMU that measures motion around the Z axis in the wrong direction is not going to work.