unable to find the correct transform between odom and base_link for a mobile robot

Hi, This must be a simple problem, but I somehow messed up. I am trying to move pioneer P2DX in Gazebo from say (-5,0) to (5,0). The robot is fitted with two lidars facing 180 deg apart, at two different ends. The important part of the model is below.

<model name="pioneer2dx_with_lidars">

<include>

<uri>model://pioneer2dx</uri>

<pose>0 0 0 0 0 0</pose>

</include>

<include>

<uri>model://hokuyo_a</uri>

<name>hokuyo_1</name>

<pose>0.2 0 0.30 0 0 0</pose>

</include>

<include>

<uri>model://hokuyo_b</uri>

<name>hokuyo_2</name>

<pose>-0.2 0 0.30 0 0 3.14159</pose>

</include>

<joint name="joint1" type="fixed">

<child>hokuyo_1::link</child>

<parent>pioneer2dx::chassis</parent>

</joint>

<joint name="joint2" type="fixed">

<child>hokuyo_2::link</child>

<parent>pioneer2dx::chassis</parent>

</joint>

</model>

Then, I created a world with other objects along with this robot model. The initial position of the robot was set at (-5,0). Then, I use this package for merging two scans . map was not available initially. So, I wrote a node with br.sendTransform(

tf::StampedTransform(

tf::Transform(q1, tf::vec(x, y, msg->pose.pose.position.z)),

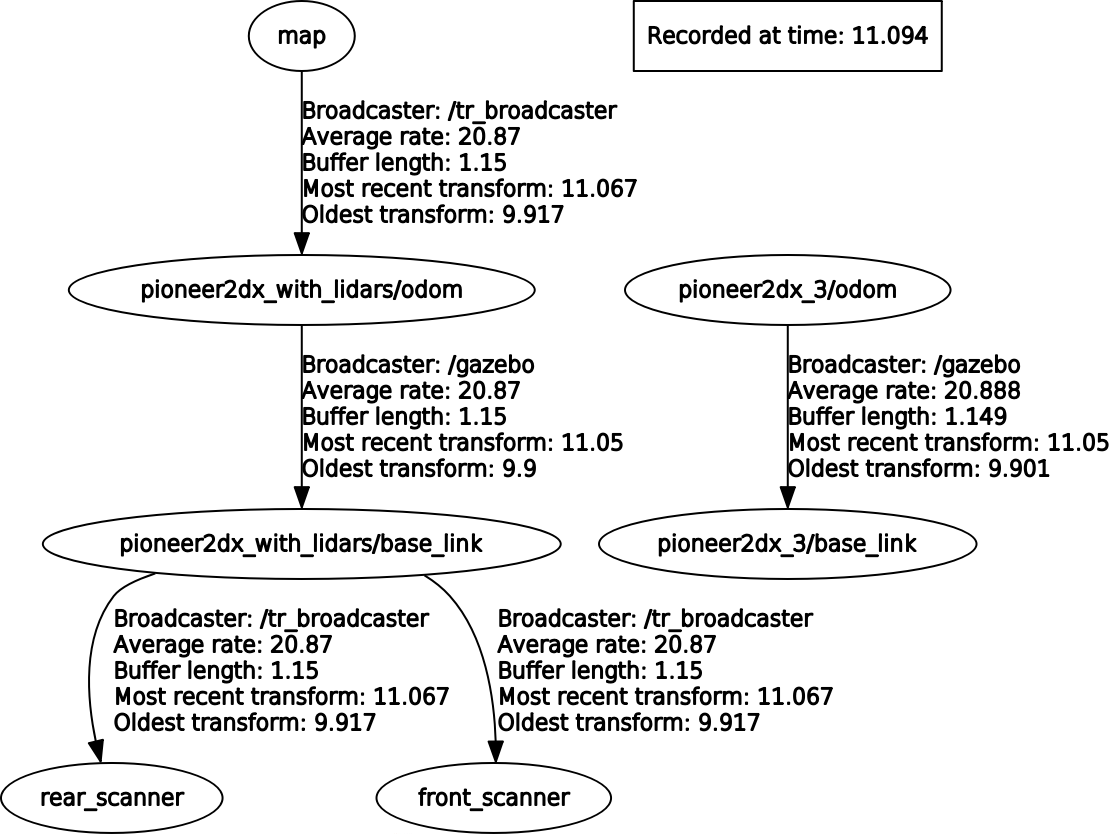

ros::Time::now(), "map", "pioneer2dx_with_lidars/odom")); as well as sendTransform between base_link and the two scanners. The tf tree is given as

(left part is important here).

(left part is important here).

The tf in the rviz looks like  The problem is that although odometry is good but positions of

The problem is that although odometry is good but positions of parents in tftree, show incorrect value. The base_link is separated from odom by huge distance and base_link position is showing to be close to -10

just after starting whereas, it should be close to -5. The merged pointcloudis shown in sky blue. Both seems to move along with odom and base_link.

Any help is highly appreciated.

Hi,

I highly recommend you to use the

robot_state_publisherpackage so you can get rid of tf problems.When you load your model this package will use the joints in the URDF/Xacro to compute and publish all the TF of your robot (Including the lasers). To link the

base_footprintframe withodomframe you can implement a node or a Gazebo model plugin.From there you can have AMCL for localization and Navigation stack to move the platform with obstacle avoidance.

All my models are in SDF. I didn't find any easy way to convert SDF-> URDF? Can you suggest any?

Hi Spartan_007,

If you are using ROS and Gazebo I recommend you to start with Xacrothat will help you mantaining an URDF. The general flow is Xacro -> URDF -> SDF, you can learn more [here].(http://gazebosim.org/tutorials...

ok, thanks Weasfas. I'll try and update here.

Hello, I am having some trouble, when I used the package above mentioned in the question and I am using a robot_state_publisher. Is there any solution for this?

@Nagarjun, can you post your problem in a new question so you can be more specific? However, bear in mind that the

robot_state_publisherneeds the joint states from thejoint_state_publishernode, so it is advisable to have both launched.