In robot_localization, why does differential mode not use static transforms?

Hello, My setup is ROS Kinetic, using the kinetic level of robot_localization on Ubuntu 16.04 I am running into a problem using the differential parameter in the robot localization package. Below is my launch file and a YAML file with the parameters. I am using the differential parameter for my visual odometry which is outputting a PoseWithCovarianceStamped (I know this is unusual output for visual odometry but it is what I have) which I pass as pose0 to the ekf filter I am using (ekf_se_odom). I want the differential data instead so if visual odometry resets it will not start providing data based on an origin centered at its location when it re-initializes.

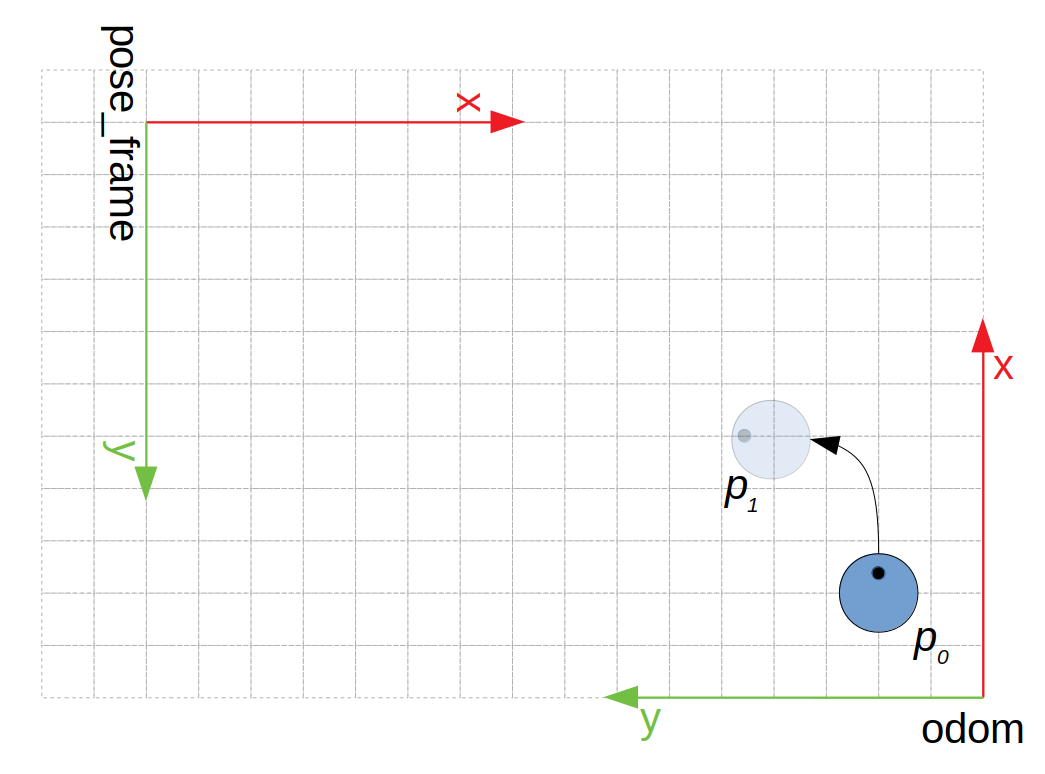

However, when I turn the differential parameter on, the filter ignores a 180 degree roll I am applying with a static transform publisher (in the launch file below) from my visual odometry sensor. Thus my y-axis values get flipped and yaw changes in the wrong direction. I traced the code that handles this in the robot_localization package. Specifically, ros_filter.cpp starting at line 2542:

// Handle issues where frame_id data is not filled out properly

// @todo: verify that this is necessary still. New IMU handling may

// have rendered this obsolete.std::string finalTargetFrame;

if (targetFrame == "" && msg->header.frame_id == "")

{

// Blank target and message frames mean we can just

// use our world_frame

finalTargetFrame = worldFrameId_;

poseTmp.frame_id_ = finalTargetFrame;

}

else if (targetFrame == "")

{

// A blank target frame means we shouldn't bother

// transforming the data

finalTargetFrame = msg->header.frame_id;

poseTmp.frame_id_ = finalTargetFrame;

}

else

{

// Otherwise, we should use our target frame

finalTargetFrame = targetFrame;

poseTmp.frame_id_ = (differential ? finalTargetFrame : msg->header.frame_id);

}

The trinary statement on the last line seems to suggest that when differential mode is on, the source and target frames are both set to targetFrame (which I traced to be the world frame for the filter). So in my case it will cause an odom to odom transformation and thus my static transform is not used.

So my question is why is this the case? Does anybody know the reasoning behind this? Please let me know if any more information is needed from me.

Launch File:

<launch>

<!-- starting middleman covariance nodes (allows setting of covarainces after data collection) -->

<rosparam command="load" file="$(find tractor_localization)/params/dual_ekf_navsat_IMU.yaml" />

<node pkg="pose_covariances" type="pose_covariances.py" name="pose_covariances" clear_params='true' output="screen" />

<node pkg="imu_covariances" type="imu_covariances.py" name="imu_covariances1" clear_params='true' output="screen" />

<node pkg="pose_covariances" type="pose_to_odom.py" name="pose_to_odom" clear_params='true' output="screen" />

<!-- starting static transform publishers -->

<node pkg="tf" type="static_transform_publisher"

name="gps_transform"

args="0.055 -0.095 0 0 0 0 base_link gps 30" /> <!-- should this be to the map frame?? -->

<node pkg="tf" type="static_transform_publisher"

name="imu_transform"

args="-0.068 -0.062 0 -1.763 0 0 base_link base_link_imu1 30" />

<node pkg="tf" type="static_transform_publisher"

name="svo_transform_odom"

args="0.023 0.1 0 0 0 3.1415 odom cam0 30" />

<!-- x y z yaw pitch roll frame_id ...