setOrigin Broadcast a TF Transform for a filtered cluster box

Greetings!

I'm currently working on Pick and Place using Kinect camera, detecting a box from the PointCloud.

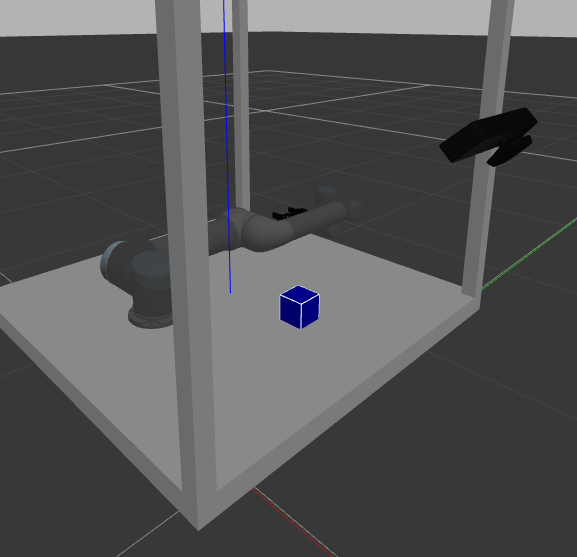

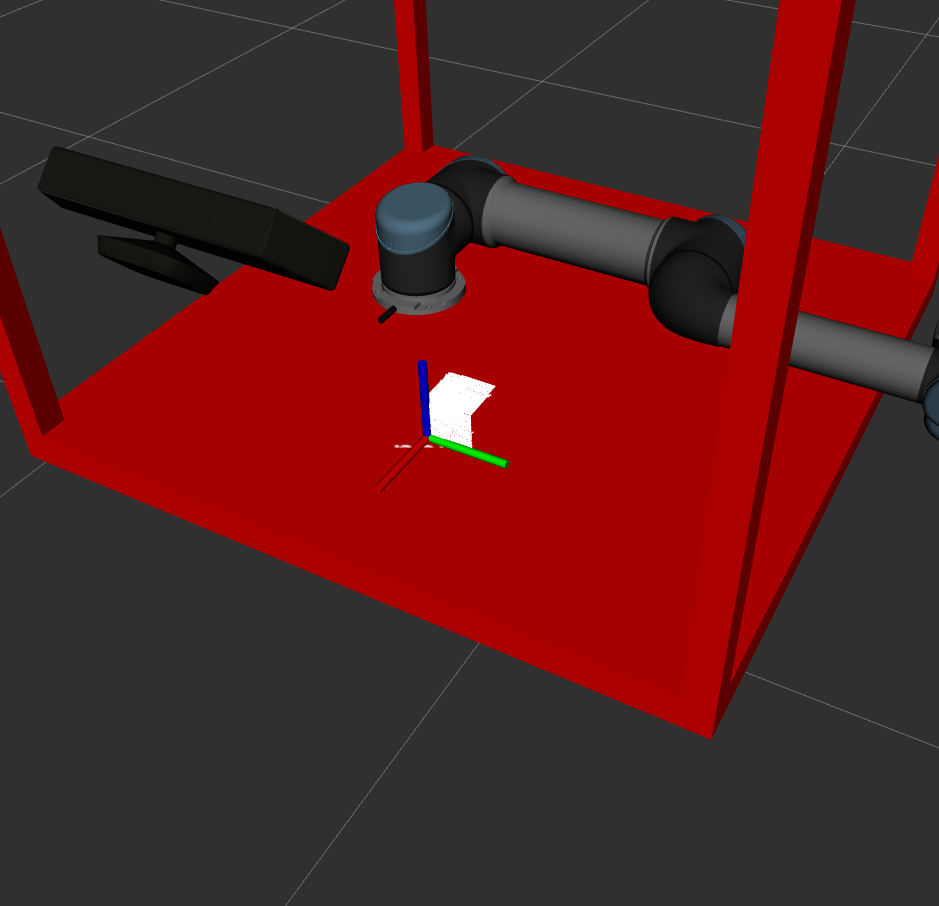

As it's shown in the pictures below, by using several PCL methods the box-like cluster is getting successfully detected (as the biggest cluster on the table).

In my understanding, the goal of the pipeline is to publish the result as a TF transform for the arm to move towards and grasp.

Unfortunately, while trying to broadcast a transform, the origin sets up just in the corner of the box, but not in the middle/top, which is not good for moveit_commander to work with.

Following Source 1 and Source 2, tf Broadcaster seems to be set as so:

static tf::TransformBroadcaster br;

tf::Transform part_transform;

// Here in the tf::Vector3(x,y,z) x,y, and z should be calculated based on the pointcloud filtering results

part_transform.setOrigin( tf::Vector3(sor_cloud_filtered->at(0).x, sor_cloud_filtered->at(0).y, sor_cloud_filtered->at(0).z) );

tf::Quaternion q;

q.setRPY(0, 0, 0);

part_transform.setRotation(q);

// Broadcast a transform

br.sendTransform(tf::StampedTransform(part_transform, ros::Time::now(), world_frame, "part"));

Am I mistaken at some point? Have someone faced the same problem of "tf in the corner"?

Feel free to ask and clarify any moments!

Best Regards,

Artemii

Could you please attach your images directly to the question? I've given you sufficient karma for that.

Thank you, @gvdhoorn! Attached directly now.