robot_localization - IMU setup or other problem?

Hi ROS friends,

I'm building a custom ROS robot using the SenseHat ( https://projects.raspberrypi.org/en/p... ) for the IMU.

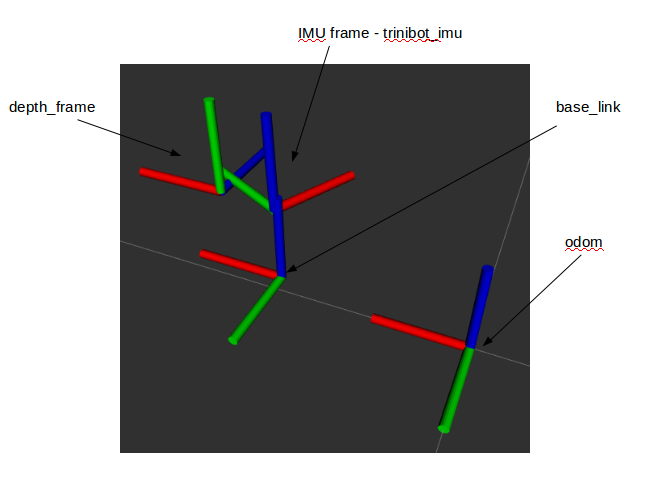

I have followed REP-105 to define a set of frames published by TF2. All frames move consistently as seen in RViz (see image)

I'm now trying to use the EKF filter in robot_localization to fuse odometry and the IMU. After hours of hacking the EKF node doesnt output anything!!

I think my main issue is the IMU configuration and wondering if any expert out there can help me with this!

What the robot is providing at the moment:

odometry : pose (x,y, theta) and velocity (vx,vy, w)

TF: odom, baselink, trinibot_imu (imu frame) and depth_frame (for a ToF) (As shown in the image below)

Following transforms are being published:

odom->baselink

base_link -> trinibot_imu

base_link -> depth_frame

The IMU frame, trinibot_imu provides a static transform of the IMU relative to base_link like so

imu_tr.header.frame_id = "base_link"

imu_tr.child_frame_id = "trinibot_imu"

imu_tr.transform.translation.x = 0.008

imu_tr.transform.translation.z = 0.084788

imu_tr.transform.translation.y = 0.0

imu_tr.transform.rotation.x = 0

imu_tr.transform.rotation.y = 0

imu_tr.transform.rotation.z = 0

imu_tr.transform.rotation.w = 1

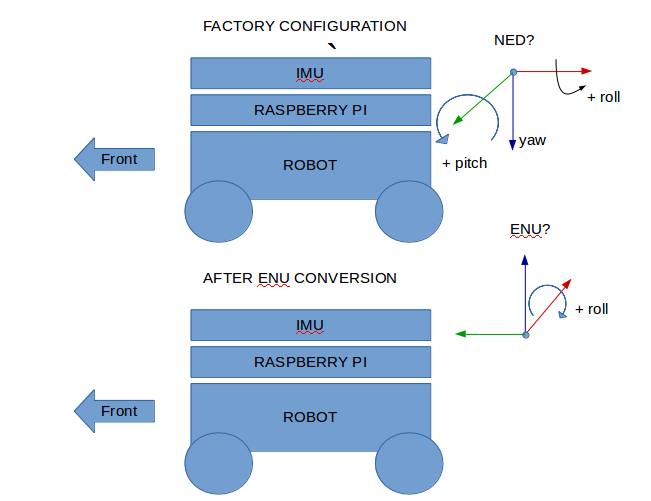

The IMU (sensehat) seemed to be providing a NED transform. The sensehat API only provides Euler angles so I transformed these into a quaternion and then applied a ENU transformation like so:

quat = tf.transformations.quaternion_from_euler(sense.get_orientation_degrees()['roll']*D2R, \

sense.get_orientation_degrees()['pitch']*D2R, \

sense.get_orientation_degrees()['yaw']*D2R)

quat= quat_NED2ENU(quat)

def quat_NED2ENU(iquat):

a = np.array([0.707, 0.707, 0, 0 ])

b = np.array([[[iquat[3], -iquat[2], iquat[1], -iquat[0]], \

[iquat[2], iquat[3], -iquat[0], -iquat[1]], \

[-iquat[1], iquat[0], iquat[3], -iquat[2]], \

[iquat[0], iquat[1], iquat[2], iquat[3]]]])

c = np.array([[[0, 0, -0.707, 0.707], \

[0 , 0, 0.707, 0.707], \

[0.707, -0.707, 0, 0], \

[-0.707, -0.707, 0 ,0]]])

return (a.dot(b)).dot(c)

The above seems to transform the NED frame in an ENU frame. When aligning the rear of the robot against magnetic north (using my phone app), the quaternion looks like this and oriented as seen by the trinibot_frame in the image

orientation:

x: -0.00801062127965

y: 0.00182113633242

z: -0.973651144752

w: 0.226565780595

Pitching the robot (clock-wise base_link Y) results in CW rotation around the imu_frame RED axis

Rolling the robot positive (clock-wise base_link X) results in a Counter-clockwise rotation around the imu_frame GREEN axis

Yaw around base_link Y is aligned with the imu yaw rotation

and accelerations of the IMU correspond ;

+9.81 in the Z axis

+9.81 in the Y axis (base_link roll 90 deg)

-9.81 in the X axis (base_link pitch 90 deg)

as stated here http://docs.ros.org/lunar/api/robot_l...

QUESTION 1: The robot_localization docs states that the IMU can be arbitrarily oriented. However it suggests a body fixed frame for the acceleration vector!!!

Acceleration: Be careful with acceleration data ...