Build a map with RTABMAP, RealSense, Sick and IMU with sensor fusion

Hi to all,

I'm trying to continue my work on 3D map building by using RealSense R200, a SICK laser and a XSENS IMU.

In particular, I would like to integrate 3D data coming from the RealSense with the 2D data from the SICK laser by compensating them with the XSENS IMU data. Currently, I'm using rtabmap_ros package with the subscribe_scan option enabled and I can successfully display 3D data under RTAB-Map.

These are the commands I use to run Realsense+SICK topics on RTAB-Map:

$ roscore

$ rosparam set use_sim_time true

$ rosrun tf static_transform_publisher 0 0 0 -1.5707963 0 -1.5707963 base_link camera_color_optical_frame 100

$ rosrun tf static_transform_publisher 0 0 0 0 0 0 camera_color_optical_frame camera_depth_optical_frame 100

$ roslaunch rtabmap_ros rgbd_mapping.launch rgb_topic:=/camera/color/image_raw depth_registered_topic:=/camera/depth/image_raw camera_info_topic:=/camera/color/camera_info compressed:=true frame_id:=base_link rtabmap_args:="--delete_db_on_start" estimation:=1

$ rosbag play --clock test1.bag (the bag contains both realsense and laser data)

And I have these transformations at the end of rgbd_mapping.launch file:

<node pkg="tf" type="static_transform_publisher" name="base_to_realsense"

args="0 0 0 -1.5707963 0 -1.5707963 /base_link /realsense_frame 100" />

<node pkg="tf" type="static_transform_publisher" name="base_to_laser"

args="0 0 0.1 0 0 0 /base_link /laser 100" />

<node pkg="tf" type="static_transform_publisher" name="base_to_color"

args="0 0 0.6 -1.5707963 0 -1.5707963 /base_link /camera_color_optical_frame 100" />

<node pkg="tf" type="static_transform_publisher" name="color_to_depth"

args="0 0 0 0 0 0 /camera_color_optical_frame /camera_depth_optical_frame 100" />

The problem is that without using the /imu/data messages, I'm not able to add information about pitch, roll and yaw angles; these are very important for my research since my robot moves on agricultural terrains which are often rough.

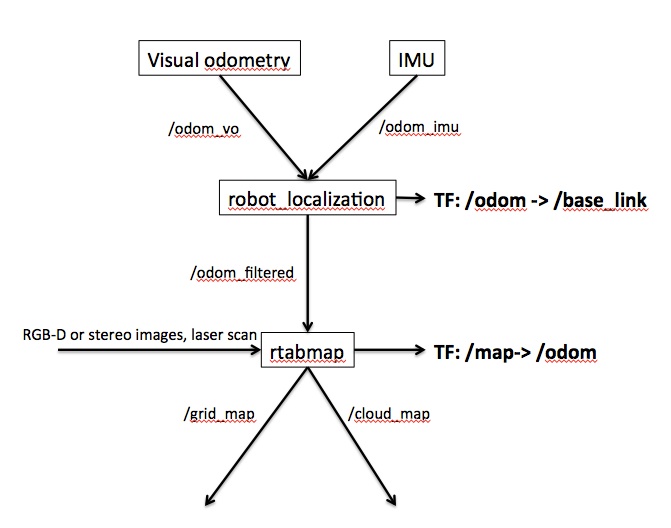

I've read that in order to add IMU information to RTABMAP, I need to perform some kind of sensor fusion by using robot_localization package.

If I well understood, I need to run robot_localization before to call rgbd_mapping.launch because I need to publish /odometry/filtered, is it correct?

I tried to create a new robot_localization launch file as below. Can you tell me if it is OK, please?

Moreover, I don't know how to generate the /odometry message since I only have some recorded bag files which contains encoders and differential_velocity messages. Can I generate /odometry message with this information?

Thank you!

<launch>

<node pkg="robot_localization" type="ekf_localization_node" name="ekf_localization" clear_params="true">

<param name="frequency" value="30"/>

<param name="sensor_timeout" value="0.1"/>

<param name="two_d_mode" value="false"/>

<!--

2. If you are fusing continuous position data such as wheel encoder odometry, visual odometry, or IMU data,

set "world_frame" to your odom_frame value. This is the default behavior for robot_localization's state

estimation nodes.

-->

<!-- Defaults to "map" if unspecified -->

<param name="map_frame" value="map"/>

<!-- Defaults to "odom" if unspecified -->

<param name="odom_frame" value="odom"/>

<!-- Defaults to "base_link" if unspecified -->

<param name="base_link_frame" value="base_link"/>

<!-- Defaults to the value of "odom_frame" if unspecified -->

<param name="world_frame" value="odom"/>

<param name="transform_time_offset" value="0.0"/>

<param name="odom0" value ...