Multiple Kinect(s) on the same Turtlebot3

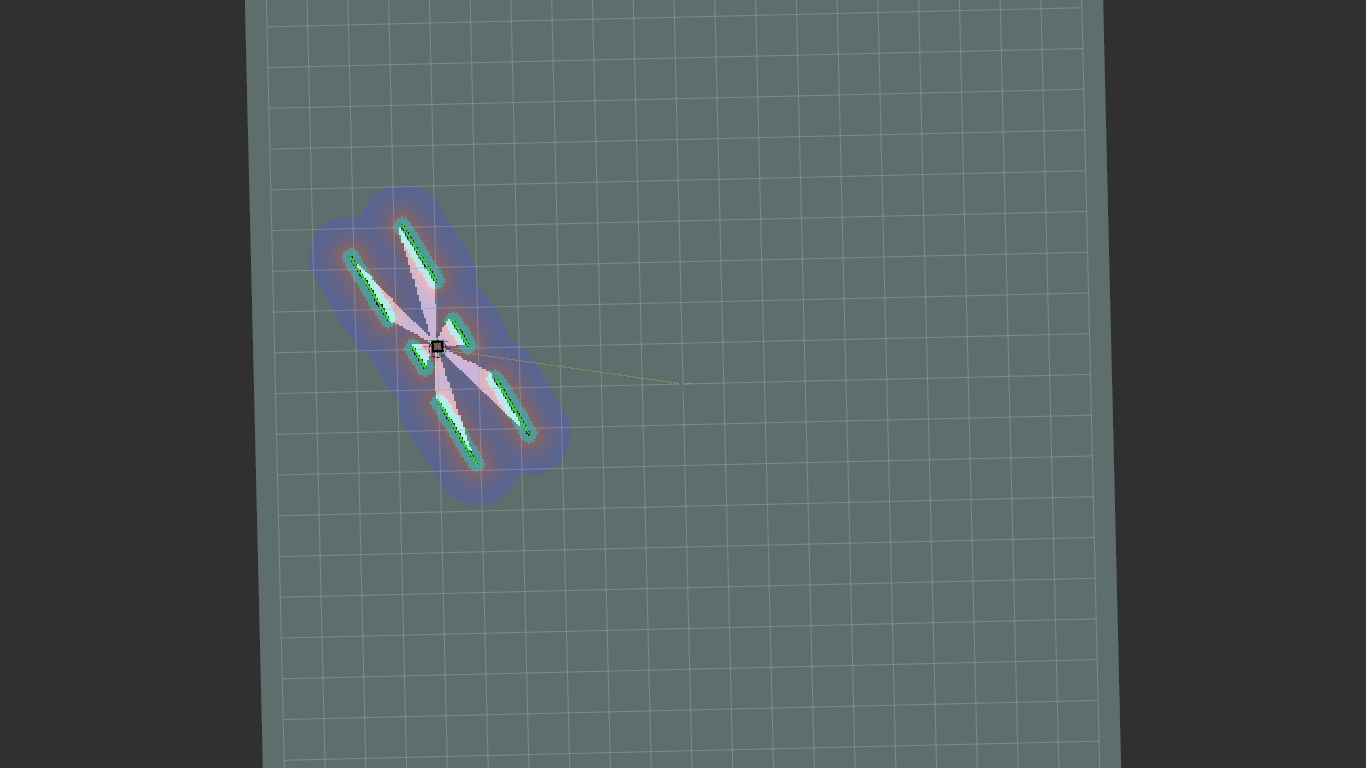

So I built a Turtlebot3 waffle pi with 4 kinect packed on it with the scope of substituting his laserscan. To do it I had to use the package depthimage_to_laserscan to transform the single kinect depth camera to a laserscan, and then I merged the various scans into a single scan with a custom python ros node. And this is the result, it seems good at first sight, seeing the map:

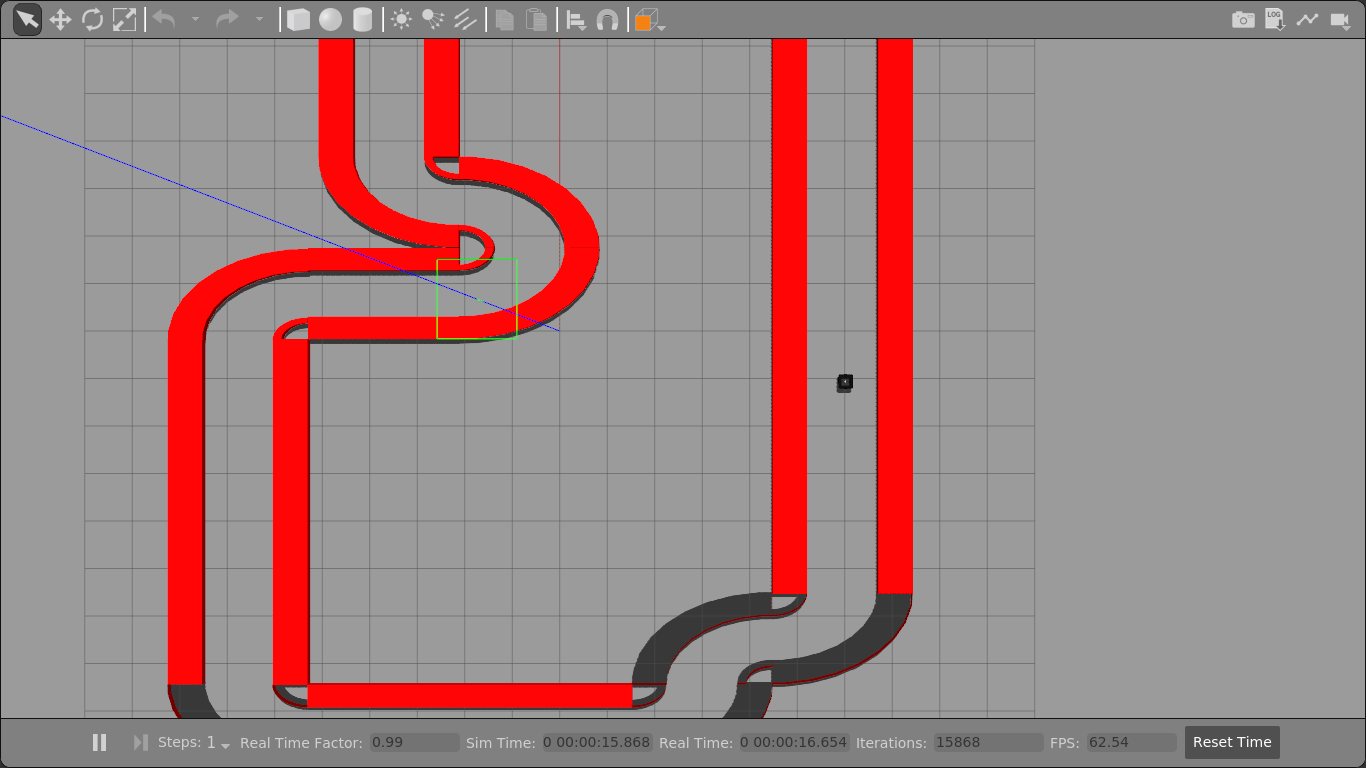

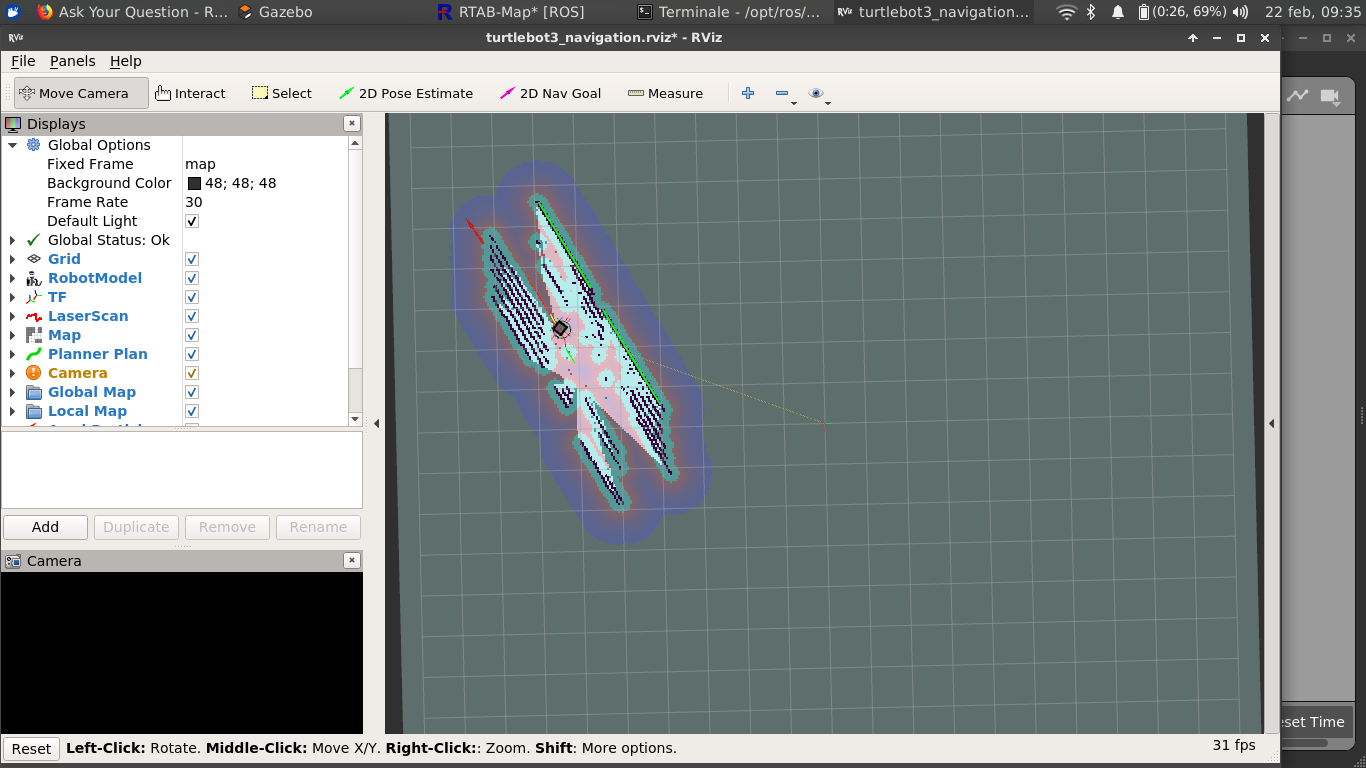

But when I try to move it around using rtabmap, mostly when I move it in small spaces, it does weird things, 'cause it overlaps the scans, and the scans don't follow the right distances, so the following image is me telling to it to move forward.

Even if I don't use navigation and only localization it works bad. I mount to it up to 6 kinects, since every kinect cover 60° (from angle_min to angle_max), and it doesn't work. Has anyone a suggestion? Always thanks for the support.

what is the config used? Do you use wheel odometry or icp odometry? Corridor-like environments with short-range lidar are very challenging without good odometry. This is explained in last paragraph of page 24 of this paper (description of Table 8).

@matlabbe is it possible to extend the forward range of a kinect so to have a longer range lidar after the transform? I use wheel odometry yes.

yes, if your simulator can generate a depth image with very far values. Make sure to disable the maximum range of depthimage_to_laserscan:

~range_max (double, default: 10.0m)