The site is read-only. Please transition to use Robotics Stack Exchange

| ROS Resources: Documentation | Support | Discussion Forum | Index | Service Status | ros @ Robotics Stack Exchange |

| 1 | initial version |

From the database you shared, the depth image seems strange because the trees are mostly big polygons with texture. But with this input data, the occupancy grid is generated correctly though.

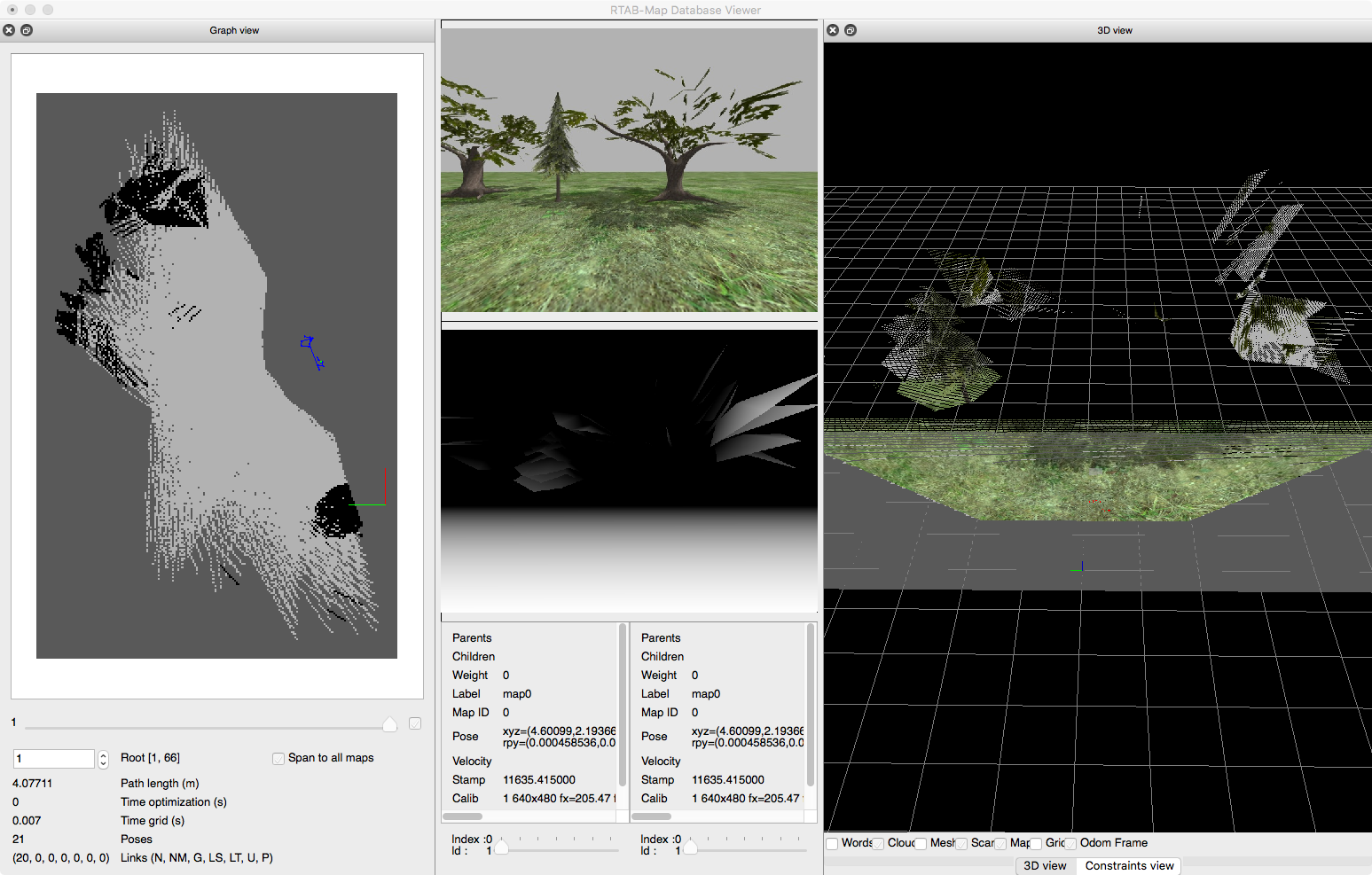

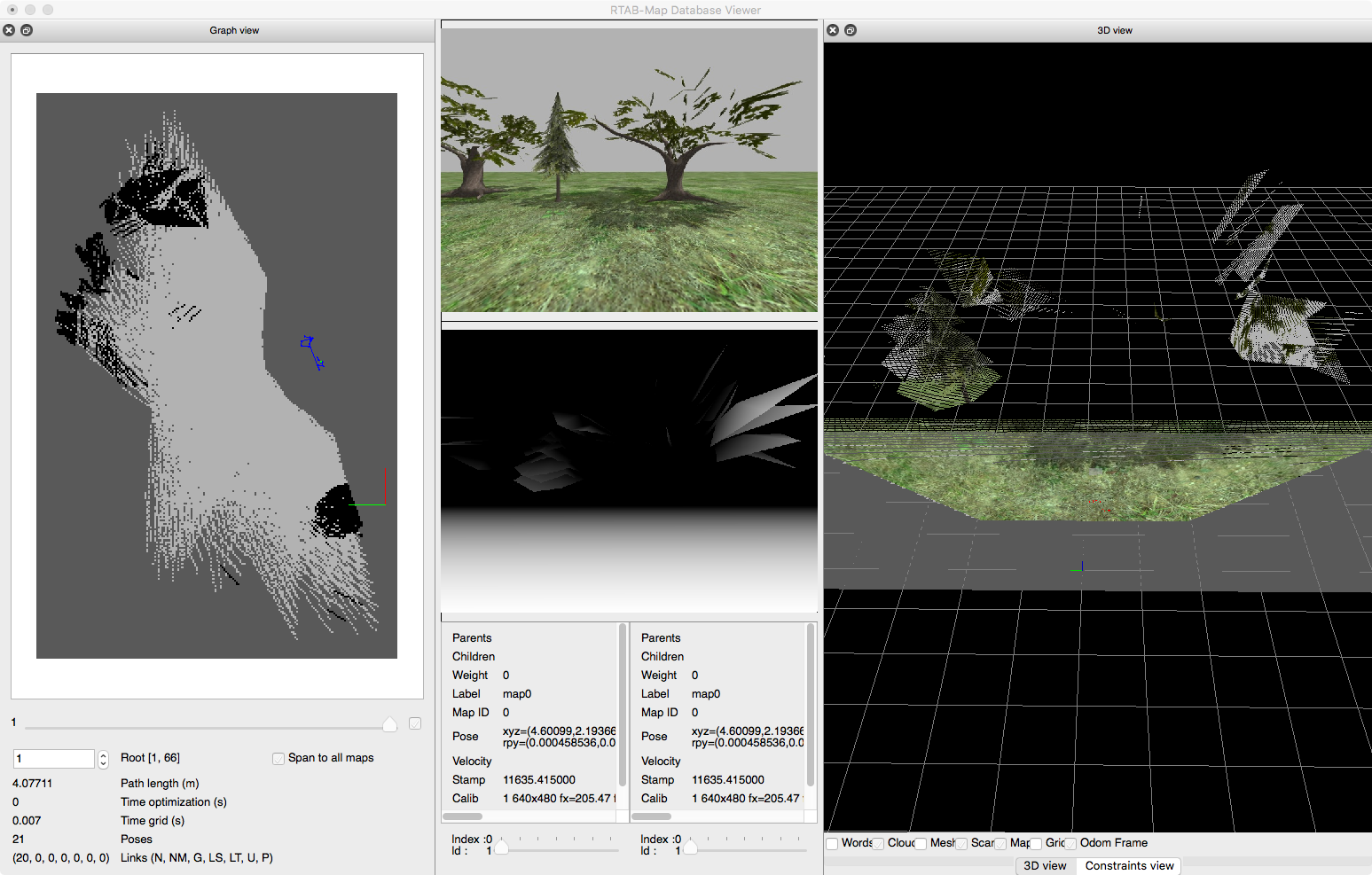

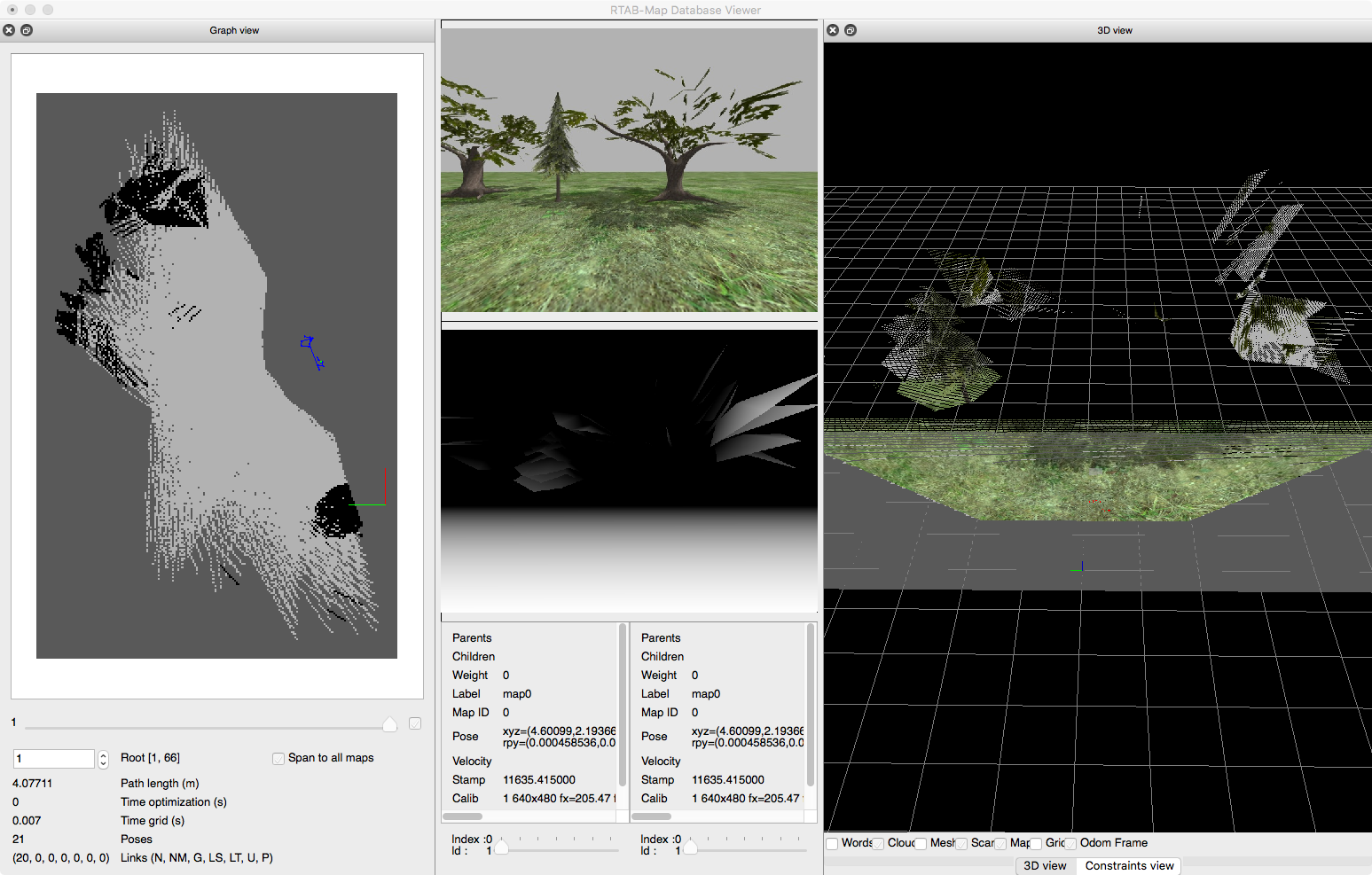

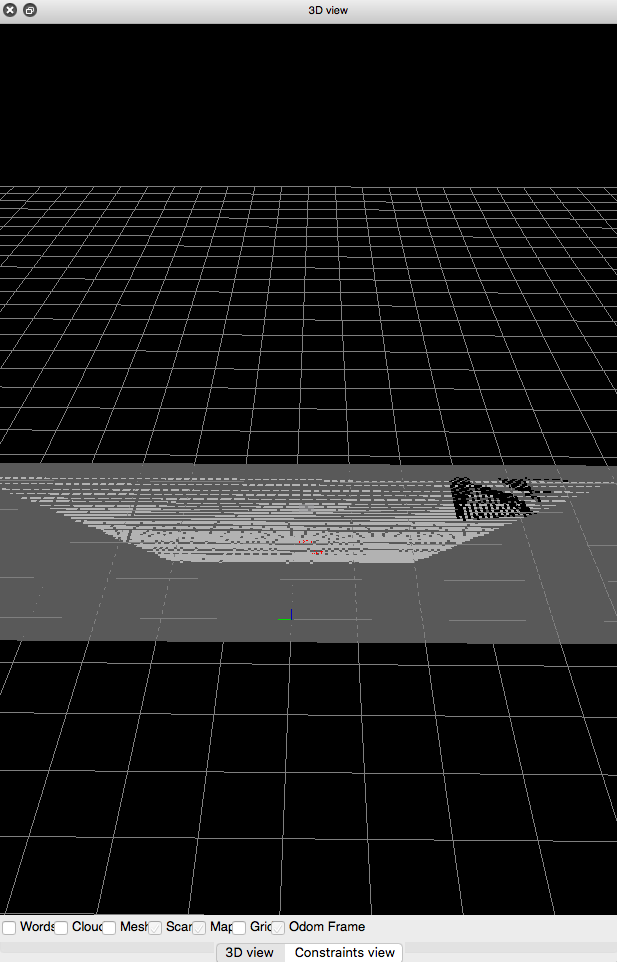

Using rtabmap-databaseViewer, raw point cloud with RGB+depth image (on left all local occupancy grids assembled) :

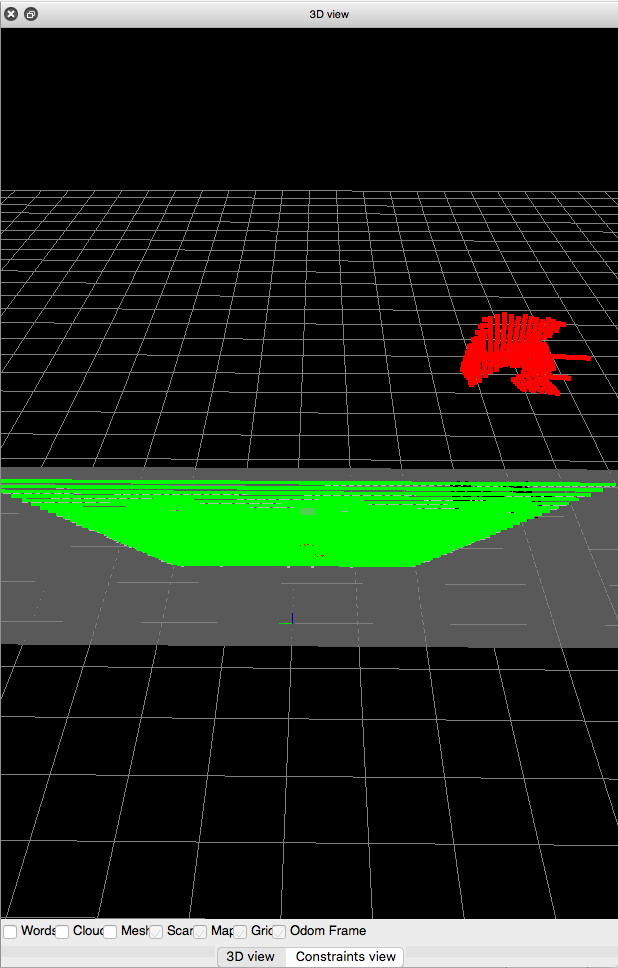

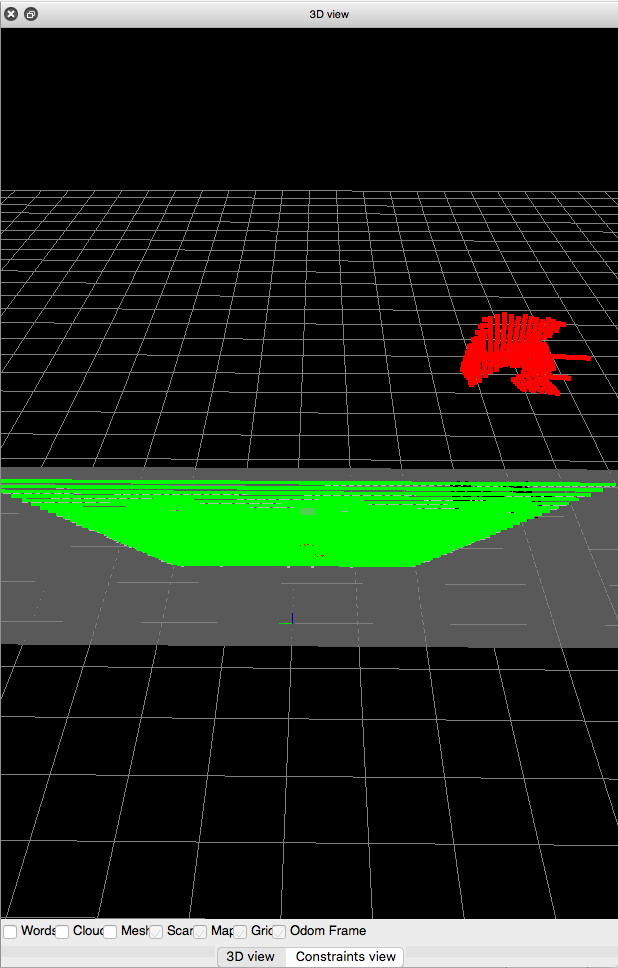

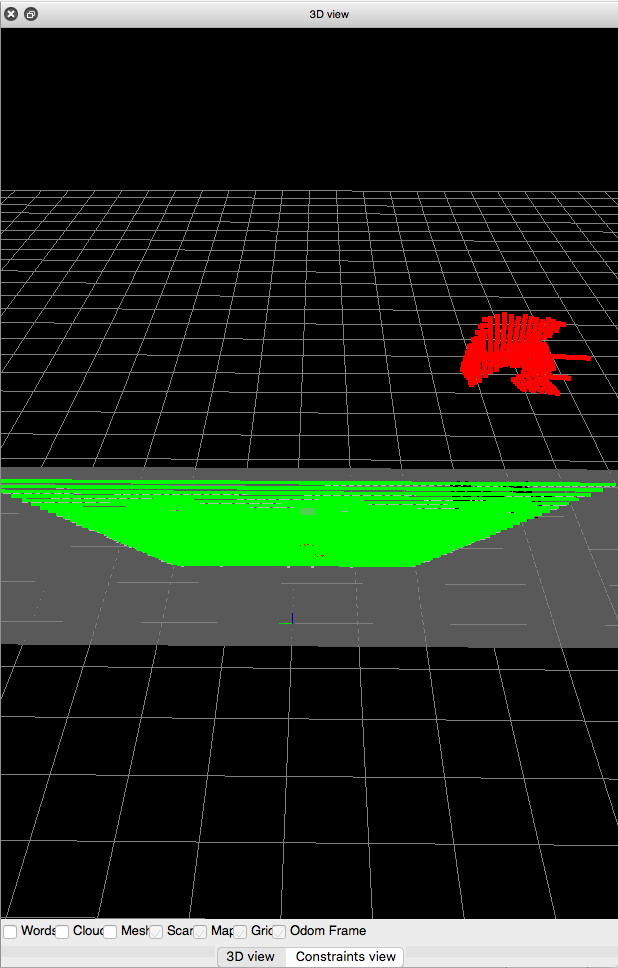

3D Segmentation based on Grid/ parameters (note that as Grid/RangeMax is 4 meters, the cloud is cut up to 4 meters):

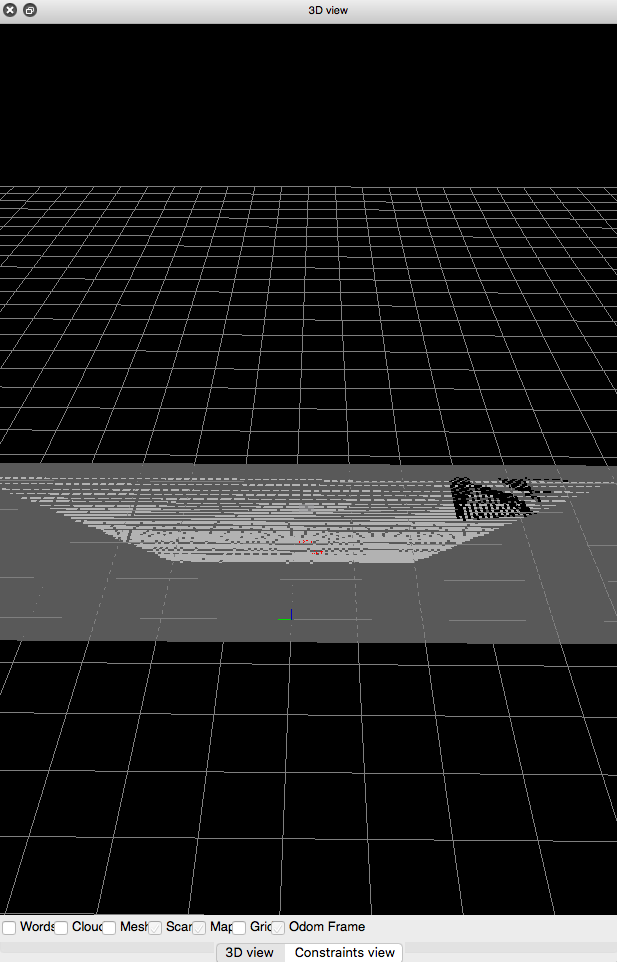

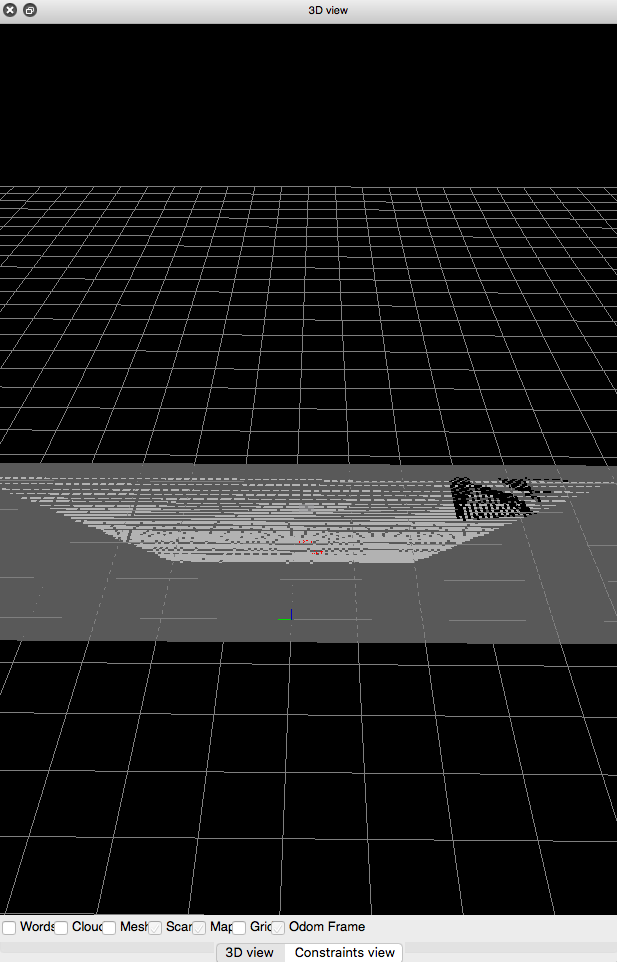

Then the projected 2D grid for this image:

cheers,

Mathieu

| 2 | No.2 Revision |

From the database you shared, the depth image seems strange because the trees are mostly big polygons with texture. But with this input data, the occupancy grid is generated correctly though.

Using rtabmap-databaseViewer, raw point cloud with RGB+depth image (on left all local occupancy grids assembled) :

3D Segmentation based on Grid/ parameters (note that as Grid/RangeMax is 4 meters, the cloud is cut up to 4 meters):

Then the projected 2D grid for this image:

EDIT

In your obstacle_layer, you take the whole point cloud of /quad/camera_/depth/points as obstacle, including the ground. It is why the ground is seen as an obstacle in the costmap. You may use a filtered cloud from /quad/camera_/depth/points without the ground instead. If the drone is flying always at the same height, it would not be so difficult to filter the ground (like with a pcl_ros passthrough). In contrast to this example with a ground robot, if the drone is moving also in Z and/or the ground is not flat, you may want to keep the ground as obstacle and use the 3D map/OctoMap output of rtabmap for 3D navigation (with moveit?).

For the Grid/RangeMax parameter, if you have an older rtabmap version, the parameter is called Grid/DepthMax (Grid/DepthMax is automatically converted to Grid/RangeMax if the version supports it). To have more points to project at longer distance, you can also set Grid/DepthDecimation to 1 (default 4) at the cost of more computation time.

cheers,

Mathieu

| 3 | No.3 Revision |

From the database you shared, the depth image seems strange because the trees are mostly big polygons with texture. But with this input data, the occupancy grid is generated correctly though.

Using rtabmap-databaseViewer, raw point cloud with RGB+depth image (on left all local occupancy grids assembled) :

3D Segmentation based on Grid/ parameters (note that as Grid/RangeMax is 4 meters, the cloud is cut up to 4 meters):

Then the projected 2D grid for this image:

EDIT

In your obstacle_layer, you take the whole point cloud of /quad/camera_/depth/points /quad/camera_/depth/pointsobstacle, obstacle, including the ground. It is why the ground is seen as an obstacle in the costmap. You may use a filtered cloud from /quad/camera_/depth/points without the ground instead. If the drone is flying always at the same height, it would not be so difficult to filter the ground (like with a pcl_ros passthrough). In contrast to this example with a ground robot, if the drone is moving also in Z and/or the ground is not flat, you may want to keep the ground as obstacle and use the 3D map/OctoMap output of rtabmap for 3D navigation (with moveit?).

For the Grid/RangeMax parameter, if you have an older rtabmap version, the parameter is called Grid/DepthMax (Grid/DepthMax is automatically converted to Grid/RangeMax if the version supports it). To have more points to project at longer distance, you can also set Grid/DepthDecimation to 1 (default 4) at the cost of more computation time.

cheers,

Mathieu

ROS Answers is licensed under Creative Commons Attribution 3.0 Content on this site is licensed under a Creative Commons Attribution Share Alike 3.0 license.

ROS Answers is licensed under Creative Commons Attribution 3.0 Content on this site is licensed under a Creative Commons Attribution Share Alike 3.0 license.