It's a common "issue" with gmapping, it's not really an issue because that is how the algorithm is working.

In your video we can see that your robot has a limited angle of view (about 90°, from -45° to 45° apparently), so when your robot is following a straight wall at its right it doesn't detect anything else at his left (because of how far your walls are between each other).

What gmapping does for localization is comparing what the sensor is detecting with the map it is building. In your case the sensor always have the similar data because your wall is straight, that wouldn't be problematic if the robot encounter another obstacle a little further or if you could see the wall behind the robot. But your robot is getting always the same data and after a while it will consider that it's an issue, the algorithm will consider that the odometry data has been wrong (because odometry data can be very noisy, gmapping will give priority to the scan data over it) and will correct the robot position accordingly.

You have some options to avoid this :

- If you are just using your simulation to test the

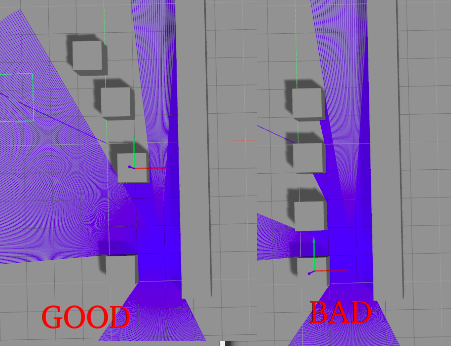

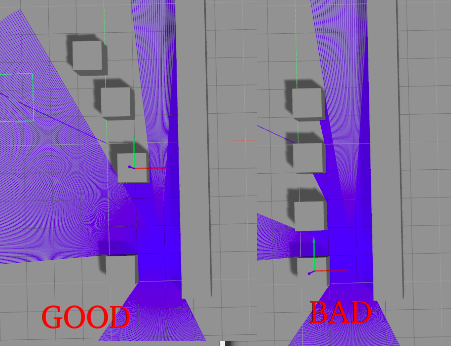

mapping and don't care to change your gazebo world, you can add more obstacles that would be detected by the sensor when following the wall. But you could find the same issue if you add your obstacles symetrically and spaced at the same distance. Here's a picture to explain it better :

- You can change your SLAM algorithm to one that give more importance to the odometry, if your odometry data is good.

- Maybe you can tune some of the gmapping parameters but I'm not sure about that.