Why the visualization interface of waymo/uber/cruise looks so similar?

I can't help noticing that those interface are similar to each other. Are they all using the same toolchain?

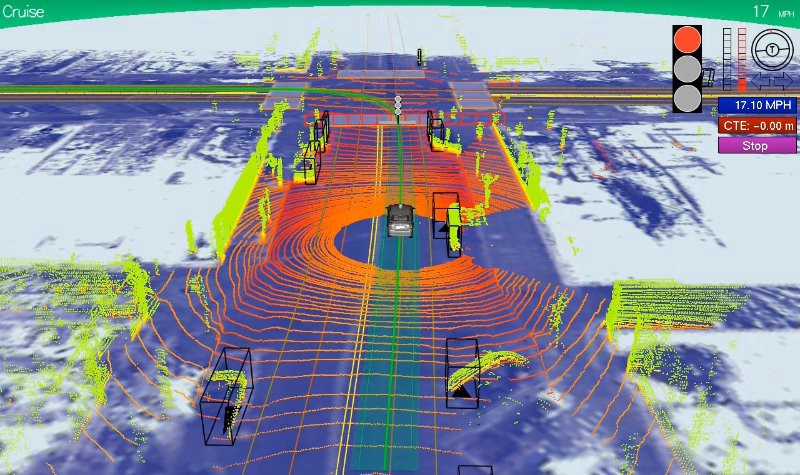

For instance, this photo shows video captured by a Google self-driving car coupled with the same street scene as the data is visualized by the car during a presentation at a media preview of Google's prototype autonomous vehicles in Moutain View, California. Credit: Elijah Nouvelage/Reuters Google interface

Another example, it is the interface of unknown company (maybe uber?) image description

And doing research on ROS shows that a popular visualization tool in ROS community is RViz. Maybe it is either RViz or Director for final visualization. Is there any connection between uber/google interface with Rviz or ROS partners?