Hand Gesture Recognition - PointCloud to Image [closed]

Hello guys, I'm doing a hand gesture recognition project, but I'm still with some doubts and looking for some answers. Probably here is not the right place for my questions and discussions, maybe better in the PCL Forum. But my final purpose is use the project in a ROS Robot, and many users are in both forums, so I will ask.

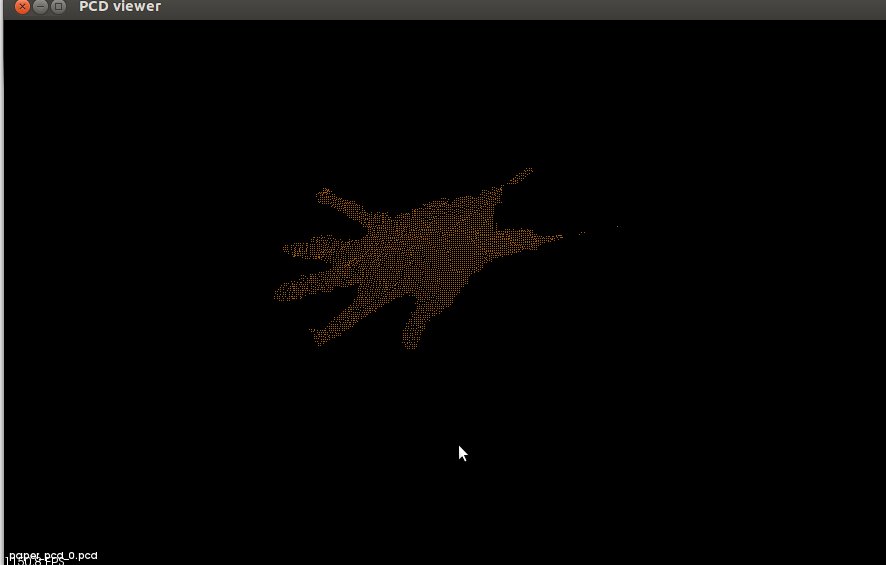

Actually is more a discussion, some advices than properly a question. I'm using PCL and a filter (now using a passthrough filter, but probably will change to a nearest filter) to acquire a hand image. Like in this image:

With this image, I read and searched a lot of PCL tutorials about using it for recognition and tested some of them, but they work better for static images / separate clouds and not for this kind of real-time application where the only cloud is the Hand. Is there any good algorithm/code for this purpose?

So, using this image i need to recognize it as a 5 finger-position. My idea now is to use this image to generate a 2D OpenCV Image to do a training and use for recognition. But I don't know how to do it, i tried change from Point Cloud to sensor_msgs::Image but it didn't work well. Another point is that I only could save it as PointCloudXYZ and not PointCloudXYZRGBA, so I don't have the RGB values. Is there a good way I can do this transformation and from this Point Cloud Image, generate a 2D Image? Probably using just 2 axis (x,y) to get the hand contours..

I know is kind of a vague question, but thank you very much of your attention. And I think we can have a good discussion about how to solve and do this project,

Luiz Felipe.