Real Kinect Scan Data not Generating Local Costmap in Nav2

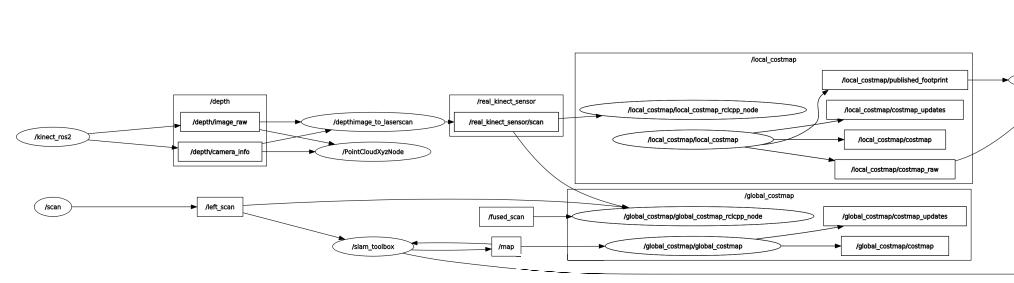

I am currently working on a project that involves integrating real Kinect sensor data with Nav2 for mapping and navigation. I can visualize the depth data from the Kinect, which is converted to scan data in Rviz2. However, I'm facing an issue: this data is not being used to create a local costmap, global costmap, or map. You can see this in more detail in the following videos:

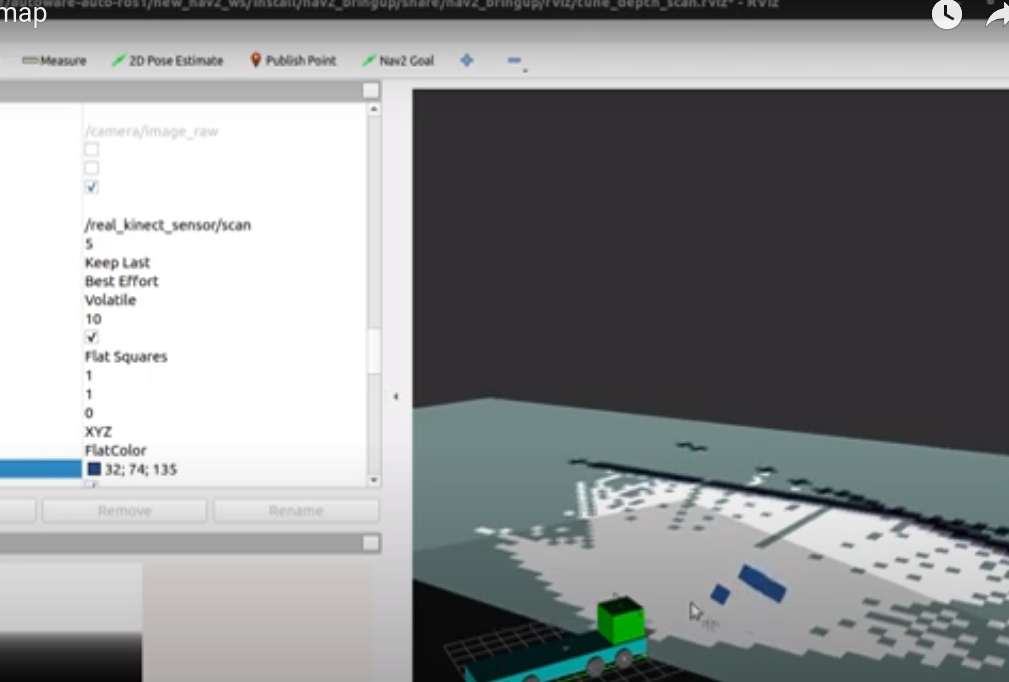

In the latter video, I even added an offset to the Kinect's frame X pose to check if data near the center of the map could generate a voxel grid or local costmap obstacles from the real environment (represented as blue squares), but this was unsuccessful.

The steps I followed are as follows:

Run the Kinect driver to get Kinect data with the following command:

ros2 run kinect_ros2 kinect_ros2_node

Launch the ROS2 nodes with the following commands:

ros2 launch nav2_bringup display.launch.py

ros2 launch nav2_bringup online_async_launch.py

ros2 launch nav2_bringup navigation_launch.py

Here are the hosted files for reference:

In Rviz2:

- Looked for or added /real_kinect_scan scan topic data.

- Set the topic to "best effort" instead of "reliable".

- Increased the size from 0.01 to 1 to visualize the incoming converted scan data.

My expectation was to see a local costmap generated based on the real Kinect scan data, but this did not happen. The rqt_graph here shows that the /real_kinect_scan topic sends data to the local costmap generation node.

I've attached the transformation image of the rqt_tree ( the tf from real_kinect_depth_frame is correctly done within a map).

I would greatly appreciate any suggestions on what might be causing this issue and how to resolve it. Following this, I need to integrate real Velodyne data with the nav2 local and global costmaps. I had previously accomplished this in my lab, as seen in this video Real Velodyne data to nav2 map integration! But unfortunately, I lost the code and have been unable to recreate it. I am really struggling with this!

In simulation, the Kinect depth's camera data is efficiently converted to scan and populate the local costmap with obstacles as demonstrated in this video, but now I need to try in the real robot!

It would be immensely helpful if anyone has a ready-to-use tutorial for integrating real hardware (sensors) to generate a Nav2 stack map, local costmap, or global costmap.

Thank you in advance for your assistance.