Normal Estimation for Lidar

Hello,

I am trying to estimate normals for a Lidar scan consisting of ~2000 points structured in 8 rings. My input is a sensor_msgs::PointCloud2 which I convert to a pcl::PointCloud<pcl::PointNormal>. The estimation is performed using PCLs NormalEstimation:

pcl::NormalEstimation<pcl::PointNormal, pcl::Normal> ne;

tree = boost::make_shared<pcl::search::KdTree<pcl::PointNormal>>();

ne.setSearchMethod( tree)

ne.setRadiusSearch( 0.3 );

ne.setInputCloud(cloud)

ne.compute(*normals)

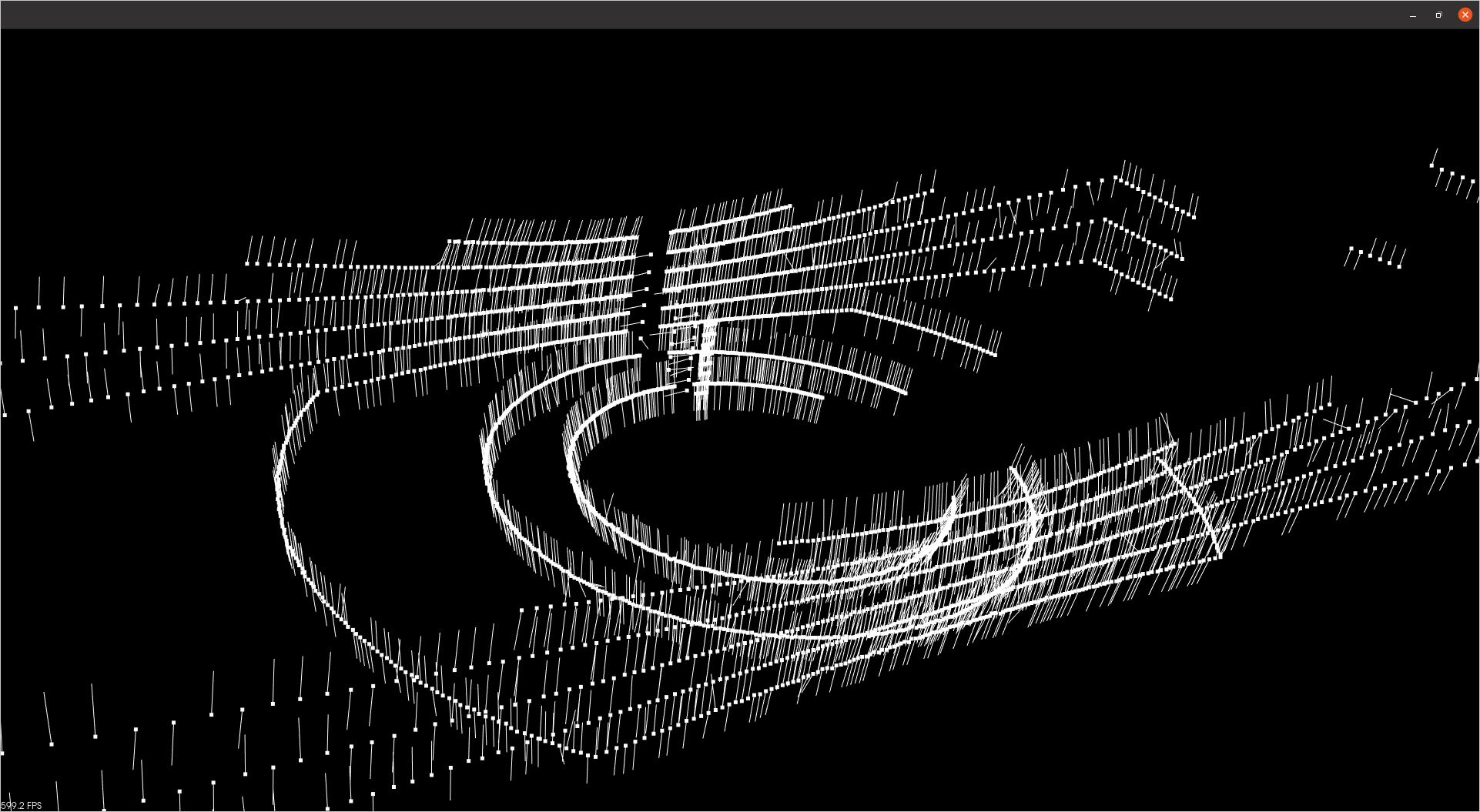

This leads to very poor results with the normals pointing in wrong directions, e.g. they should be orthogonal to the walls and not parallel (see picture).

I have played around with ne.setRadiusSearch(); and ne.setKSearch() without getting satisfying results.

Does anyone have a suggestion why this fails? It works quite well for other point clouds, e.g. obtained from depth cameras.

Two more ideas:

pcl::IntegralImageNormalEstimationseems to be a good alternative, but it needs an organized point cloud as input. My point cloud is unorganized.I can also get the scans as a

sensor_msgs/LaserScanmessage which contains the distance and angle for each point. Maybe I can use this information for the normal estimation? I could not find any solutions that work with this message...

Thanks for your ideas!

EDIT:

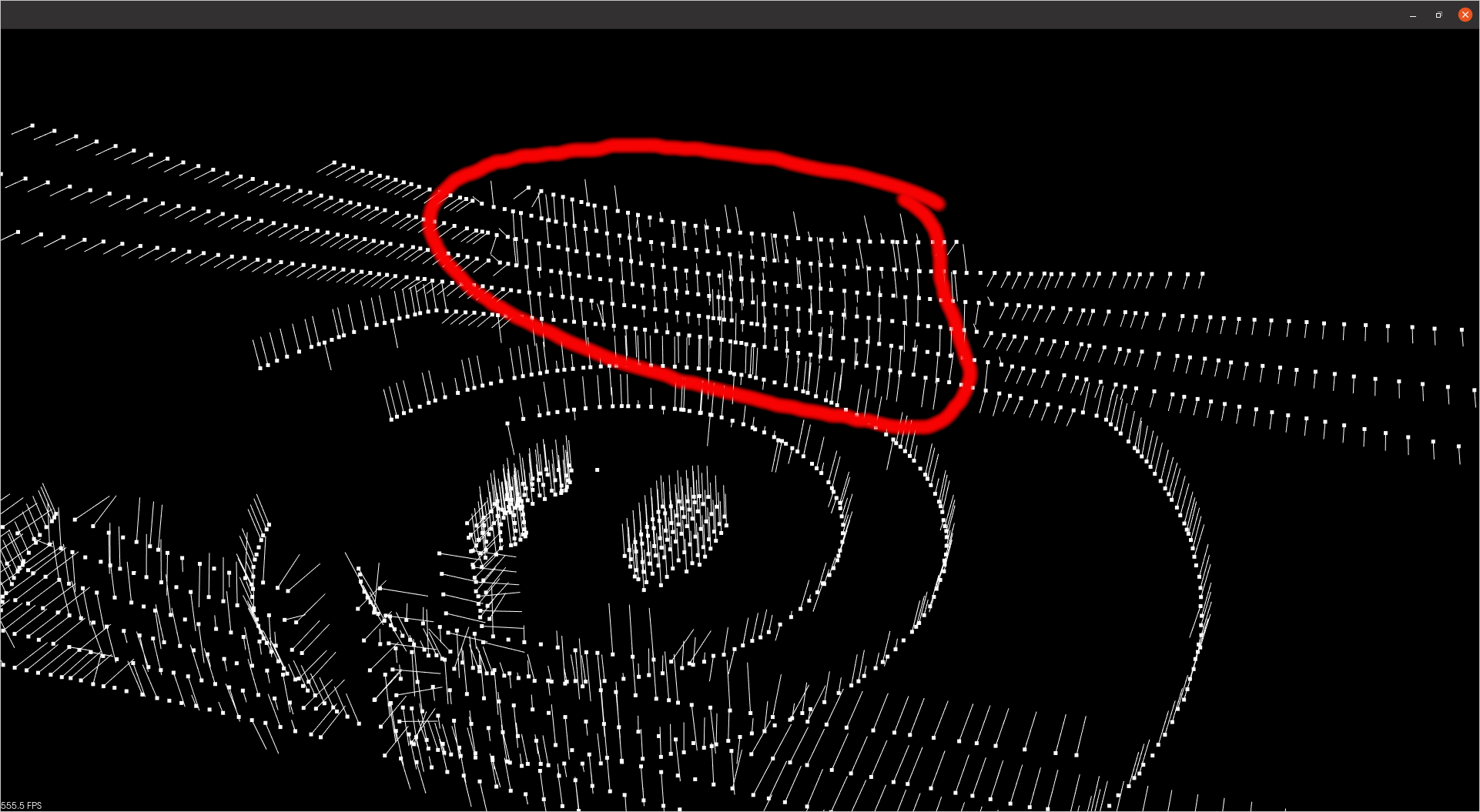

Using setKSearch(10) improves the result a lot:

Still the closer points that lie on the walls still have wrong orientations (marked red in the picture). In this area the distance between the scan rings is smaller, but I don't see how this can affect the results - K nearest neighbor search should actually not depend on the distance to the neighbors?

Still the closer points that lie on the walls still have wrong orientations (marked red in the picture). In this area the distance between the scan rings is smaller, but I don't see how this can affect the results - K nearest neighbor search should actually not depend on the distance to the neighbors?