How to compare the SLAM algorithms with gazebo Simulation Ground Truth using pose error metric?

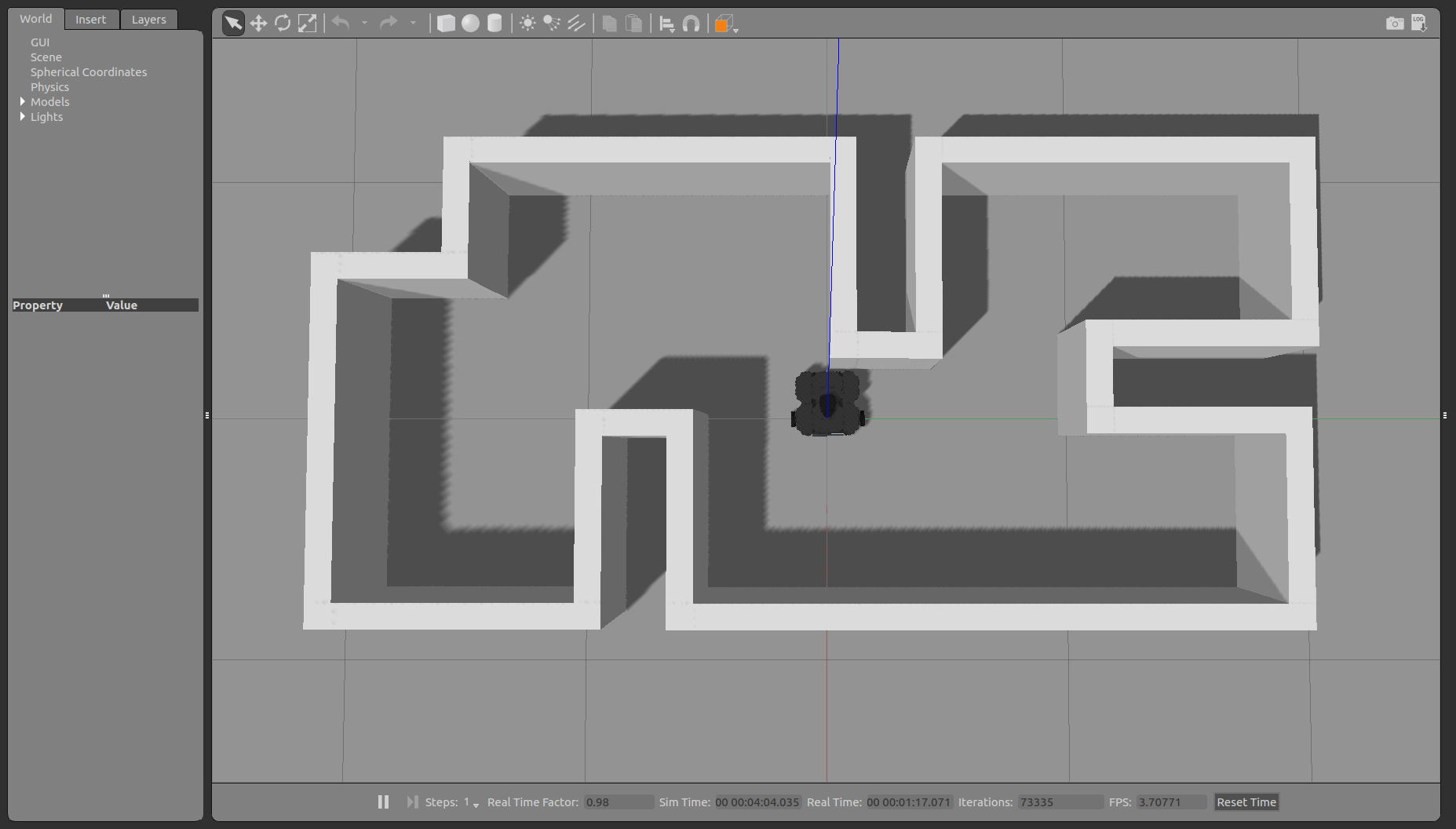

Hello, I am working with Gazebo simulator. I have successfully created a map of the environment using gmapping, hectorSLAM, kartoSLAM and cartographer. I have compared the generated SLAM map using ICP and SSIM (Mapping part).

Now I want to compare them in (localization part)using Odom error or Pose error.But I have no idea about this Comparison workflow (which steps should I do to compare the SLAM algorithms using pose error metric or trajectory error metric) I have tried the following benchmark package, but without success:

I hope you can help me :-)

Did you solve the issue, please ?

Yes, I have. see https://link.springer.com/chapter/10....http://dx.doi.org/10.13140/RG.2.2.207...

Thanks, actually your article is a main cited one in my work. Thanks for you effort. Please, practically, what is you suggestion to select the ground truth that compared with the estimated position (that could be accessed by /tf generated from slam_gmapping node)

I teleop my turtlebot3 without slam and record the data as bag file, then play the bag for the slam_gmapping , the resulted /tf postion (/footprint frame relative to /map frame) is the same result that obtained from /odometry topic (or /footprint frame relative to /dom frame ) in the recording stage.

So which is the ground truth and which is the estimated that should be compared ?

I am so confused , I hope you could help me ! Thanks in advance.

The Easiest way is to teleop the TB3 without SLAM and record the topics (/odom, /tf). Then play the recorded bag file on each SLAM and plot the odom position live (like this https://github.com/Dhaour9x/Fig2plot) and save the plots as figure to be used later for localisation error using for example Hausdorff distance (you can use the HD function in matlab (https://de.mathworks.com/matlabcentra...) if you want to use Hausdorff distance as a measurement method.)

Dear Riadh, Actually, yesterday I started to translate your thesis and trying to understand the methodologies that you follow on. It is more detailed than the paper. Actually suggestion you mentioned above is what I am doing, but I was confuse to select the teleop stage results as groundtruth.

As will as I tried to plot the two stages results (teleop and SLAM ). Using plotjuggler. And I saw that the curves of ground truth and gmappingSLAM results are aligned even when I zoomed it. So I will try to see the numerical list of the comparing paths.

Many thanks for your detailed support. And I am sorry for long explanation text. Because I think may be these words will be usefully for a newbie of this community in the future.

Thanks a lot.

this may be helpful http://www.rawseeds.org/home/ or http://ais.informatik.uni-freiburg.de...

Dear Riadh,

Please, I see that in the framework, Which is the pose of /footprint frame relative to the fixed frame /odom frame (if I am not wrong, it is the encoders + IMU fusioned estimated pose ) Is that true ?

As a scientific validation, Is it correct to choose the filtered output of odometery system (encoders and IMU ... etc) as ground truth ? What about the uncertainty of this system ? I see that most of the evaluation articles follow on the same methodology.

But, my supervisor object about the validation of this methodology and said: Is it correct to compare the estimated pose of gmappingSLAM with the estimated (or filtered / or signal of relatively accurate odometer) pose ?

I am looking forward to hearing from you, your comments about this situation.

Thanks in advance.

Kind regards.

Since the odom is not accurate enough, you can use a sensor fusion (odom + IMU) or (odom + camera) or correct the odom error with AR tags and use it as ground truth. Also, you can run a small benchmark to see the odom output deviation and correct it manually. The environment (dynamic or static and open or closed) has a big impact on the sensor selected to create the ground truth. For your Qs:

- /Odom is just the encoder without the IMU.

- For scientific validation, it is correct to use the Odom path as the ground truth, but you must Perform benchmark on Odom to check the uncertainty of this system (deviation error) and correct it manually.

there are other techniques that are more accurate than Odom to generate ground truth, but they are much more complex and take a lot of time.

- The sensors are not linear, so it ...

(more)