Can T265 Odometry Pose be centred on robot rather than sensor when using wheel Odometry input?

We've setup our robot to use the T265 sensor for odometry using the official realsense-ros package (foxy branch). We've configured the launch to fuse input from the T265 camera and wheel odometry from our motor controllers.

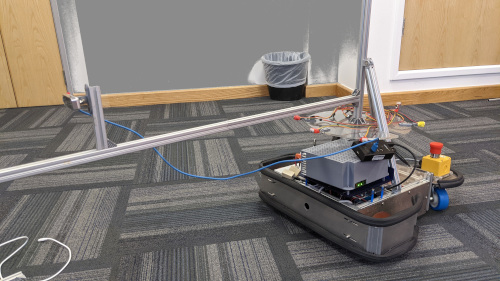

It wasn't clear to me whether the Odometry output by the realsense node is centred on the robot or the T265 sensor when using wheel odometry input. So we did a quick experiment putting the sensor 0.7m in front of the robot out on a boom.

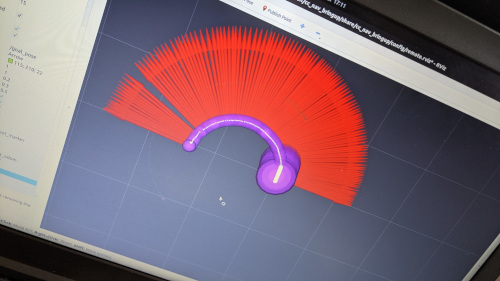

We spun the robot on the spot and it is clear that the Odometry circles the robot, so it is evident that the Odometry is centred on the T265 sensor not the robot.

Is there a setting that I have missed that allows the Odometry output to be centred on the robot (i.e. in base_link)? Otherwise we have to transform the Pose and Twist in a separate node, which is a pain.

Update:

The relevant parts of our TF tree is the following (ignoring other sensors like a rgb camera which also comes off base_link):

map -> odom -> base_link -> odom_sensor

map->odomfor this test is a static zero offset and rotation transform.odomis the frame in which the robot moves in starting from x=0,y=0,rotation=0.base_linkis the robot frame and is centred between the differential drive wheels.odom_sensoris the frame of the t265, which is normally ~10cm behind the drive wheels but for this test was 70cm in front on a boom.

We have configured the t265 node to output Odometry with the Pose in odom (header.frame_id = odom). It is not clear if the Twist (child_frame_id) should be in odom_sensor or base_link, but our experiments seem to imply that sensor is outputting Twists in odom_sensor. We therefore need to offset the Pose in odom to account for the translation between base_link and odom_sensor and also transform the Twist so that, for example, if you are spinning on the spot the Twist in base_link has zero linear velocity and and non zero angular velocity in z.

We currently feed Odometry from the motor controllers into the T265 node with the Twist in base_link (i.e. linear velocity is always along x and angular velocity along z), however I wonder if that is incorrect as I note that the node only uses linear velocity.

The odom frame does not work in the way you are describing it. Please tell us what your transform tree frame names are along a path from the "root_node_of_tree" down to the t265_sensor.

Thanks for replying, I've updated my question with details of our reference frames. I hope it makes sense.

For the tf tree please generate an image with rqt_tf_tree, it is easier to read. Also please attach a sample odometry message (e.g. output of rostopic echo -1 /odom) for the situation of the second image.

Nice update to your question. You explained it very clearly.