[Moveit] With respect to the camera move from one static position to another dynamic position

Hello there,

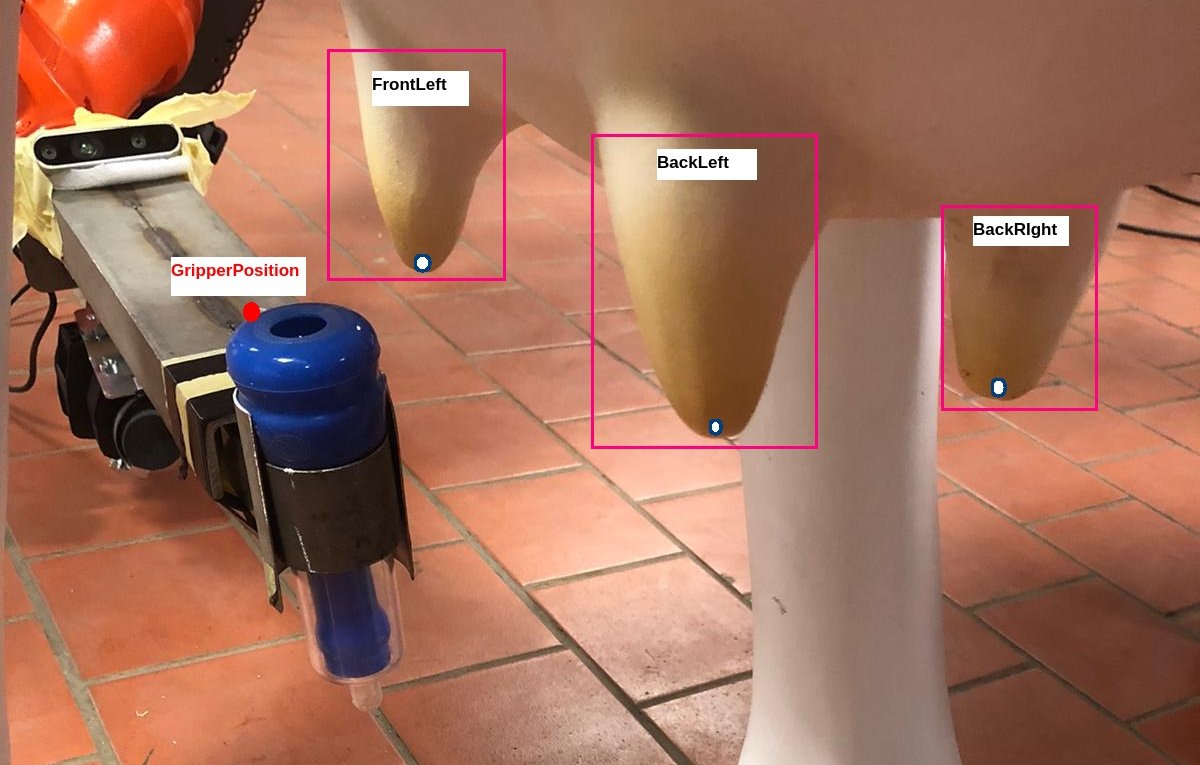

I can identify positions from the camera

- Gripper position

- BackLeft

- Backright

- FrontLeft

Currently, I a getting these things from the camera, now I want to move Gripper's point to a particular teat position every time. Example: I will have two cloud points 1. Gripper and 2. for any teat location (Let's say back right) based on this, both point 3d coordinate how can I move my robot arm in such a way that my gripper cloud-point will touch to my back-right teat's cloud point?

To sum up my question: I got my Gripper coordinate and any teat coordinate from point cloud; how can I move the robot arm gripper point so that it goes to teat position?

The in-depth explanation for this issue:

Currently, I am using hand-eye calibration and doing my task to identify the teat and, based on hand-eye calibration, moving my robotic arm concerning the world(robot base) position. It is working fine for now.

I want to get rid of hand-eye calibration. Instead, my camera should able to detect gripper and teat (Which I can do). How can I move my gripper on the teat position if the camera detects both things? I thought this because animals are uncertain and can kick cameras, and each time farmers cannot afford hand-eye calibration if the camera move.

I'm not sure I understand your question. I haven't seen a question mark anywhere.

Also, these pictures crack me up every time, so make sure to keep including them. Thanks.

Thanks, @fvd for providing feedback to my question, have updated it in detail. I think now you can able to understand the question. Thanks once again for your time.

@Ranjit: why did you remove the images?

I thought that it was not of that much used. If you want me to put this I can do it.

Your references to:

don't really make sense any more right now, nor the references to "teat" or what you are trying to do in general.

I would indeed suggest to revert the removal.

Thanks for the suggestion. Yes, you are right and I have done it.