Copy sensor_msgs/Image data to Ogre Texture

Hello everyone. I have been trying to manually copy the contents of an image message to a dynamic Ogre Texture buffer. I want to display the images of a certain topic to a plane in the RViz viewport.

I am aware of both the rviz_textured_quads solution by lucasw and the ROSImageTexture class. However they both have unsatisfactory performance. The first is just inefficient, the second has an extra conversion that I am hoping I can skip.

I successfully managed to achieve what I wanted by first converting the Image message to a cv_bridge::CvImagePtr. However I was wondering whether or not I could pull the image data directly from the image message.

Here is my definition of the Texture:

image_texture_ = texture_manager.createManual("DynamicImageTexture",

resource_group_name,

Ogre::TEX_TYPE_2D,

width_, height_, 0,

Ogre::PF_BYTE_RGB,

Ogre::TU_DYNAMIC_WRITE_ONLY_DISCARDABLE);

And here is my update function:

Ogre::HardwarePixelBufferSharedPtr buffer = image_texture_->getBuffer(0,0);

buffer->lock(Ogre::HardwareBuffer::HBL_DISCARD);

const Ogre::PixelBox &pb = buffer->getCurrentLock();

// Obtain a pointer to the texture's buffer

uint8_t *data = static_cast<uint8_t*>(pb.data);

// Copy the data

memcpy(

(uint8_t*)pb.data,

&image_ptr_->data[0],

sizeof(uint8_t)*image_ptr_->data.size()

);

buffer->unlock();

Is there something wrong I'm doing in copying the data? For reference, here are the results.

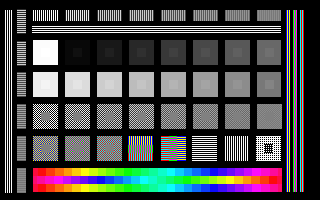

Copying the data from the CV Pointer:

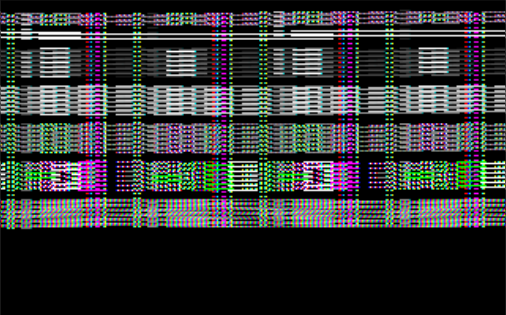

And the image buffer directly:

Please do not link to off-site image hosters. Images tend to disappear that way.

Attach your screenshots to your question directly. I've give you sufficient karma.

Updated, thanks a lot for the permissions.