Incorrect Bounding Boxes using pcap file with 3D Perception Stack of Autoware.Auto

Hi, I have been trying to run the 3D perception stack demo (https://autowarefoundation.gitlab.io/...), but seem to be getting incorrect bounding boxes (see video below). The demo ran in an ade environment using the .aderc-amd64-foxy file. There seems to be a similar issue here, but in my case I am not using the LGSVL simulator. To run the demo the following commands were done:

Terminal 1:

ade$ udpreplay -r -1 route_small_loop_rw.pcap

Terminal 2:

ade$ source /opt/AutowareAuto/setup.bash

ade$ ros2 run velodyne_nodes velodyne_cloud_node_exe --model vlp16 --ros-args --remap __ns:=/lidar_front --params-file /opt/AutowareAuto/share/velodyne_nodes/param/vlp16_test.param.yaml

Terminal 3:

source /opt/AutowareAuto/setup.bash

Terminal 4:

ade$ source/opt/AutowareAuto/setup.bash

ade$ ros2 launch autoware_demos lidar_bounding_boxes_pcap.launch.py

This is my current rviz visualization: https://youtu.be/ANKaX4vJIFE. The bounding boxes seem to be incorrect and I am not too sure why. Any input would greatly be appreciated. Thank you.

Can you be more specific? What do you mean by "seem to be incorrect?" Maybe a screenshot would help.

Yes, sorry about that. Here is a video of my visualization currently https://youtu.be/ANKaX4vJIFE.

Playing back route_small_loop_rw.pcap does show the roads being detected as non-ground objects and thus explains the euclidean clustering algorithm creating a bounding box for it.

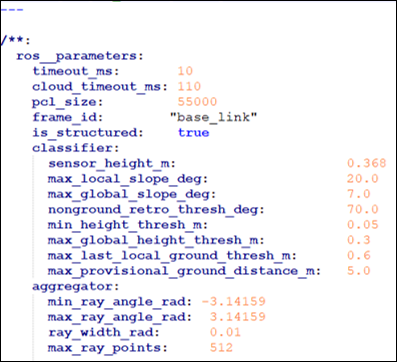

The question is, are the parameters for the 3D perception stack set according the appropriate location and orientation of the sensors when the route_small_loop_rw.pcap file was captured? If yes, then what is the explanation for the roads being detected as non-ground objects? If no, then is there another pcap file that could be used to test the perception stack with the current parameters values?

Also, I noticed the orientation of the rear lidar is reversed and it had to be rotated 180 degrees by changing the quaternion in /opt/AutowareAuto/share/point_cloud_filter_transform_nodes/param/vlp16_sim_lexus_filter_transform.param.yaml when testing with the provided file, route_small_loop_rw.pcap.