Navigation stack planning global path through large unknown wall segments of map

I'm running a Turtlebot type platform with a fairly large map of a building, about 70x100m. Using the navigation stack to go to waypoints works almost all the time when the destination is close by and the path is relatively straight, but I'm getting some weird behaviors when planning long paths across the shape of the map.

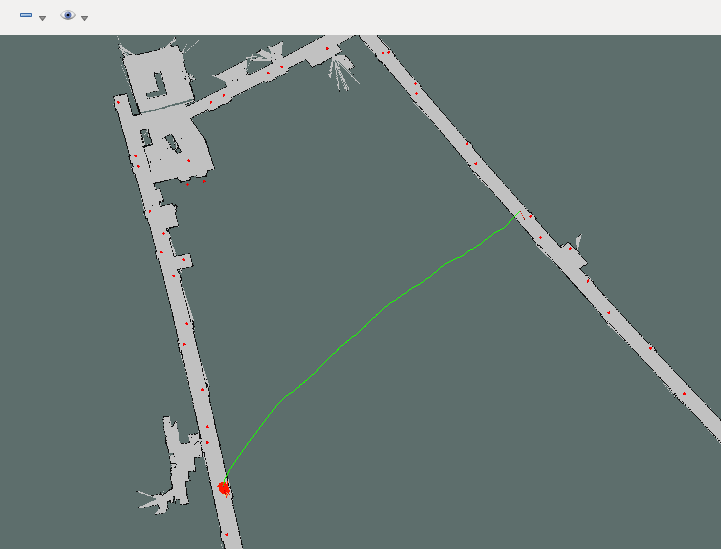

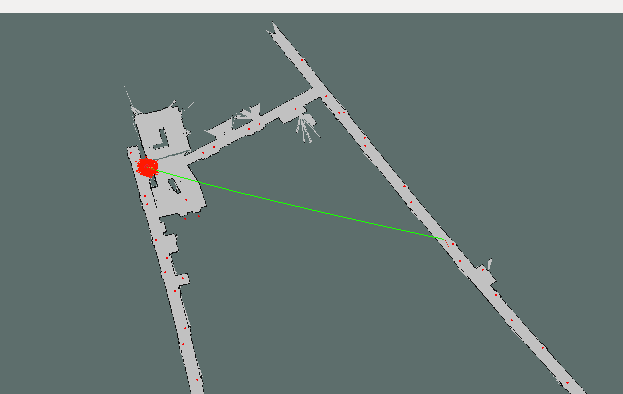

The map is a U shape, when trying to plan a plan from one side to the other, the global path basically takes a straight line across the U, going through both walls, and completely ignoring the known open space available to it on the map.

The other interesting behavior I saw was it chose to navigate down one hallway instead of crossing to the other, in this situation below. This photo isn't the path from when it had that issue, but instead of cutting across and going down the right side, the robot went down the left hallway then stopped roughly perpendicular to the destination and tried to plan through the wall, which it obviously couldn't.

It seems to me that, given there's a global map with known open space, the navigation stack should be putting out a path that goes through that empty space, following the hallways rather than cutting across. It also seems to me that the stack shouldn't be trying to plan through a known blocked space, the wall on both sides. Am I correct in these two, or am I misunderstanding how the stack works?

One suggestion from another question suggested turning off allow_unknown in the path planner, but there are areas of my map, mainly doorways, where I need to use unknown space to get to some destinations.

I've put my configurations below, but I'm a bit at a loss of what to do to resolve this... Any suggestions?

base_local_planner_params.yaml:

TrajectoryPlannerROS:

max_vel_x: 0.45

min_vel_x: 0.1

max_vel_theta: 1.5

min_in_place_vel_theta: 0.2

acc_lim_theta: 3.2

acc_lim_x: 2.5

acc_lim_y: 2.5

holonomic_robot: false

yaw_goal_tolerance: 0.3

xy_goal_tolerance: 0.2

latch_xy_goal_tolerance: true

costmap_common_params.yaml:

obstacle_range: 2.5

raytrace_range: 15.0

#footprint: [[x0, y0], [x1, y1], ... [xn, yn]]

robot_radius: 0.22

inflation_radius: 0.22

observation_sources: laser_scan_sensor

laser_scan_sensor: {sensor_frame: laser, data_type: LaserScan, topic: scan, marking: true, clearing: true}

recovery_behaviors.yaml:

reovery_behavior_enabled: true

recovery_behaviors:

- name: 'conservative_reset'

type: 'clear_costmap_recovery/ClearCostmapRecovery'

- name: 'aggressive_reset'

type: 'clear_costmap_recovery/ClearCostmapRecovery'

conservative_reset:

reset_distance: 3.0

aggressive_reset:

reset_distance: 0.0

global_costmap_params.yaml:

global_costmap:

global_frame: /map

robot_base_frame: base_link

update_frequency: 5.0

static_map: false

width: 200

local_costmap_params.yaml:

local_costmap:

global_frame: odom

robot_base_frame: base_link

update_frequency: 5.0

publish_frequency: 2.0

static_map: false

rolling_window: true

width: 6.0

height: 6.0

resolution: 0.05

obstacle_layer:

observation_sources: "base_scan"

base_scan: {data_type: LaserScan, sensor_frame: laser, clearing: true, marking: true, topic: /scan}

height: 200

The robot is running Ubuntu 16.04 with Kinetic onboard an Odroid XU4.

Even though without

allow_unknownparameter set, it shouldn't go through an obstacle, through a wall. There are couple of things to check. What is yourrobot_radiusorfootprintparameter under costmap params? The default should be enough to avoid planning through walls. Also the picture resolution is low in your question, so I can't tell if there are empty/unoccupied pixels in your walls. About doorways, while mapping, you should keep the doors you want to use open so the robot could plan through.Robot radius is 0.22m. Which is correct to the size of the robot. I checked the segment of the wall where it was trying to path plan through and there was a line of black pixels at least 1 pixel wide across the entire region, so it's not like there was a gap there. Would the black region need to be thicker? I would think that the path planner would register any black pixels as an obstacle, but who knows...

If the

robot_radiusalso set under global costmap, then it's alright. I suggest re-mapping all the area by covering all the space you need to travel, and disablingallow_unknown. I also suggest looking at my answer in this question: #q217360I understand that, so make the unknown space untraversable, but there's two situations where I think I need to use unknown space as an optional barrier. There's a section of sliding wall that can completely open or cover one room, and there are two elevator shafts that are normally closed, but may open. For these, I thought it was best to use the unknown space, but maybe I'm wrong. Will the planner avoid the unknown space more if the cost is above 0 but less than lethal (254)?

No it will plan through unknown space according to the value. I previously recorded elevator doors as empty space and it was working fine. But if your localization performance is poor, it might affect it slightly. But also if you think about it, there's no difference between a person's leg being detected by laser sensor and the elevator door being detected by laser as an obstacle on the robot's way.

I guess that's try, I'll have to try that out and see how it works...