Hi,

If you want to play PCAP data on ROS, see this page:

https://dominoc925.blogspot.com/2019/...

For an example with RTAB-Map (>=0.20.7 built with libpointmatcher support), based on this ouster example, you can try the following:

1) Play a PCAP (I tried the 1.4 GB one):

roslaunch velodyne_pointcloud 32e_points.launch pcap:=$HOME/Downloads/velodyne/2016-05-28hdl32.pcap read_once:=true rpm:=1200

2) Add a base_link to make x-axis of the car forward (lidar is looking right)

rosrun tf static_transform_publisher 0 0 0 -1.570796327 0 0 base_link velodyne 100

3) Start mapping:

roslaunch rtabmap_ros rtabmap.launch \

use_sim_time:=false \

depth:=false \

subscribe_scan_cloud:=true \

frame_id:=base_link \

scan_cloud_topic:=/velodyne_points \

scan_topic:=/disabled \

scan_cloud_max_points:=34750 \

scan_cloud_assembling:=true \

scan_cloud_assembling_time:=0.5 \

scan_cloud_assembling_voxel_size:=0.5 \

icp_odometry:=true \

approx_sync:=false \

args:="-d \

--RGBD/CreateOccupancyGrid false \

--RGBD/ProximityMaxGraphDepth 0 \

--RGBD/ProximityPathMaxNeighbors 5 \

--RGBD/LocalRadius 30 \

--RGBD/ProximityMaxPaths 1 \

--Rtabmap/DetectionRate 0 \

--Icp/PM true \

--Icp/VoxelSize 0.5 \

--Icp/MaxTranslation 10 \

--Icp/MaxCorrespondenceDistance 1.5 \

--Icp/PMOutlierRatio 0.7 \

--Icp/Iterations 30 \

--Icp/PointToPlane true \

--Icp/PMMatcherKnn 3 \

--Icp/PMMatcherEpsilon 1 \

--Icp/PMMatcherIntensity true \

--Icp/Epsilon 0.0001 \

--Icp/PointToPlaneK 10 \

--Icp/PointToPlaneRadius 0 \

--Icp/CorrespondenceRatio 0.2 \

--Icp/PointToPlaneGroundNormalsUp 0.8 \

--Icp/PointToPlaneMinComplexity 0.0" \

odom_args:="\

--Odom/ScanKeyFrameThr 0.7 \

--OdomF2M/ScanMaxSize 10000 \

--OdomF2M/ScanSubtractRadius 0.5 \

--Icp/Iterations 10 \

--Icp/CorrespondenceRatio 0.01 \

--Icp/MaxTranslation 2 \

--Icp/PMOutlierRatio 0.4 \

--Icp/PointToPlane false"

Here are some results:

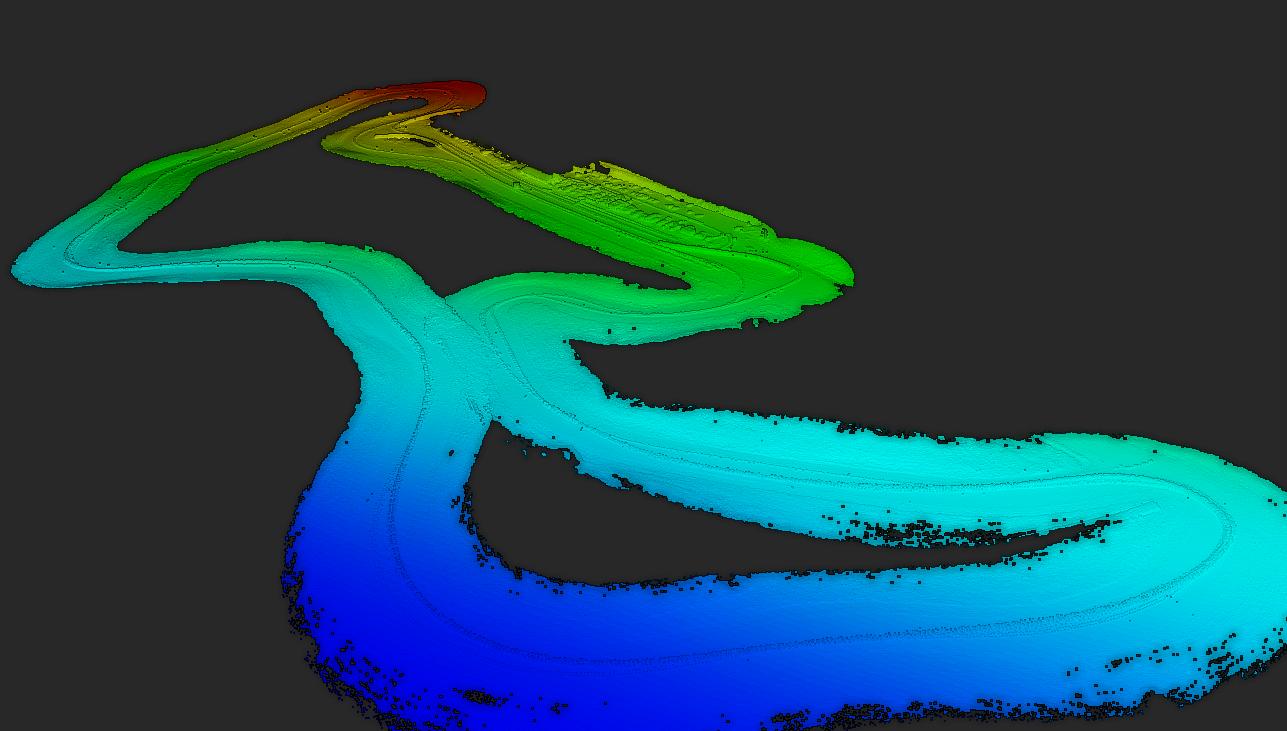

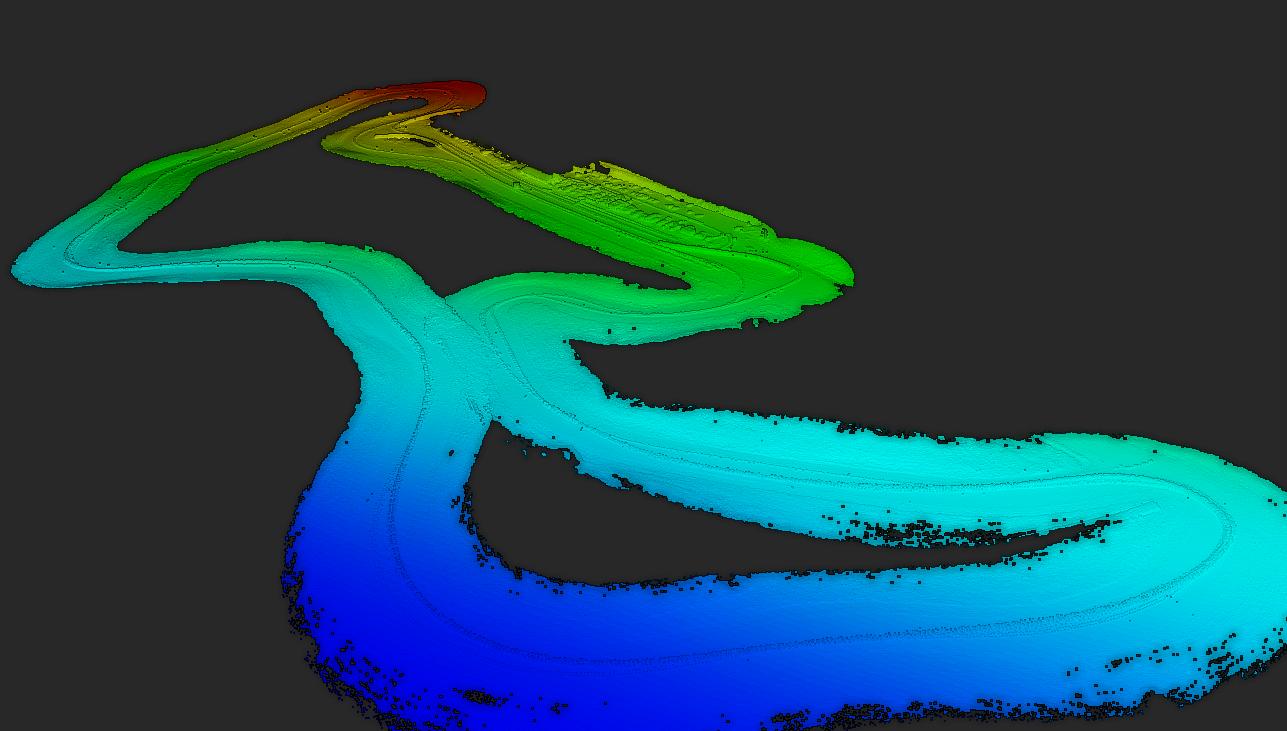

Colored with intensity from LiDAR:

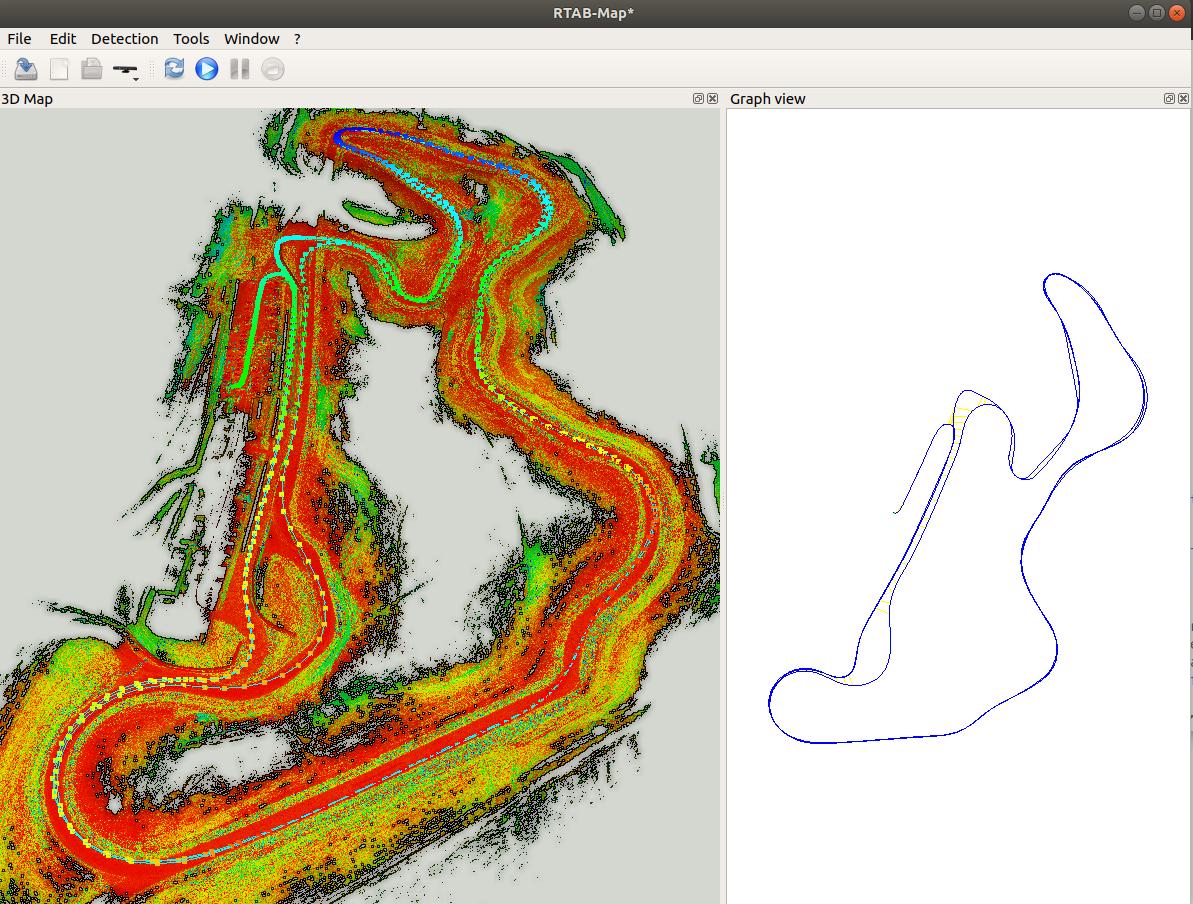

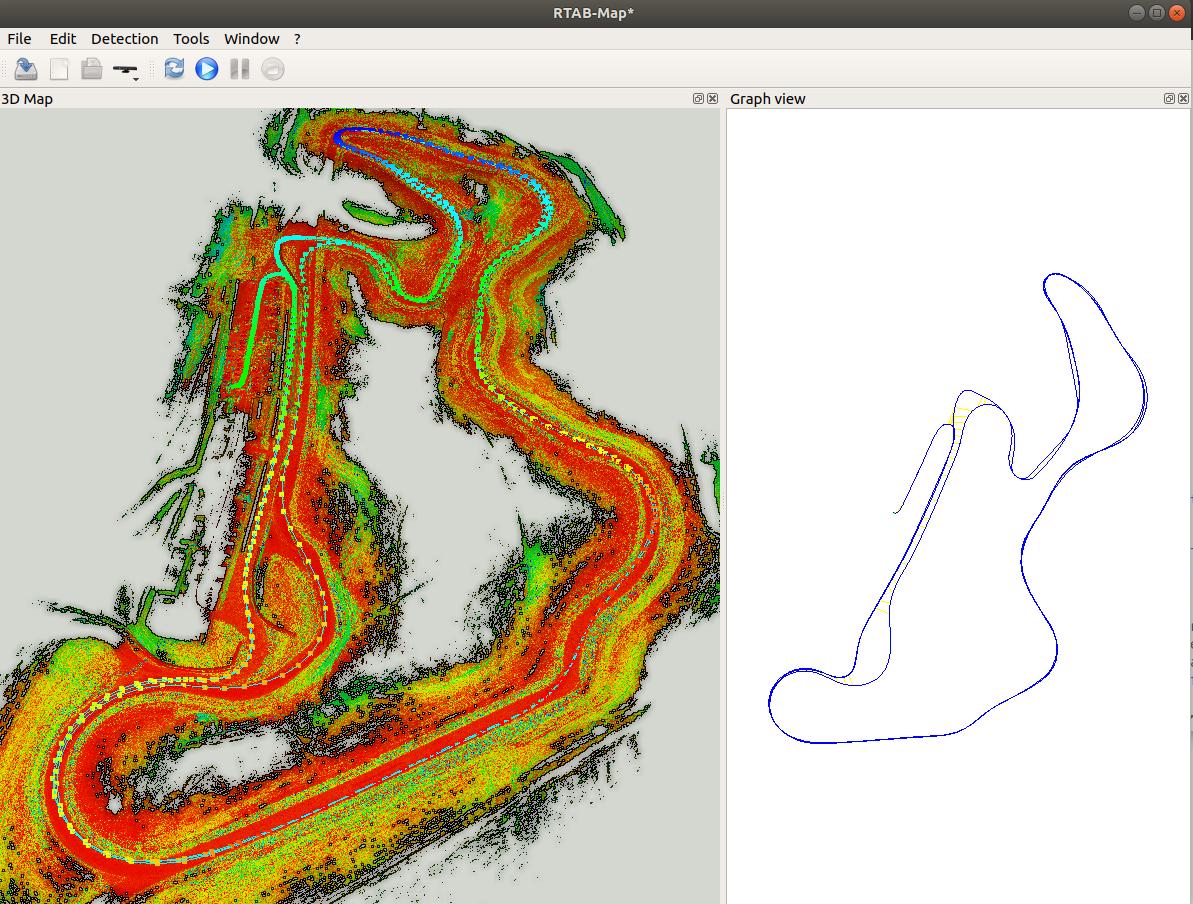

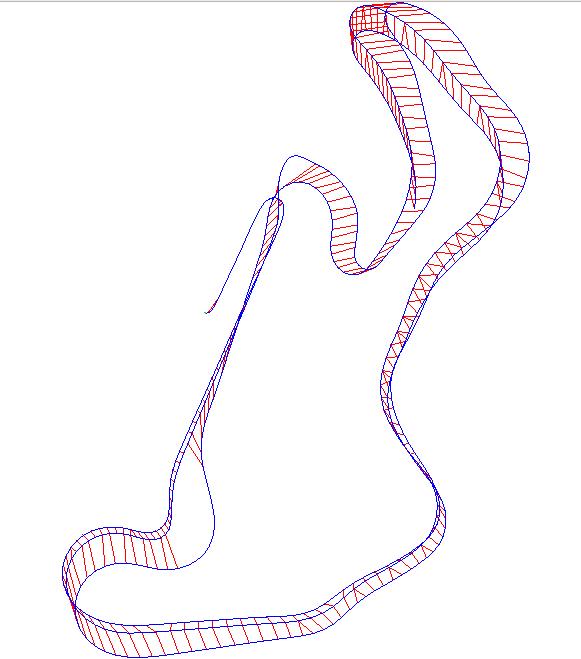

LiDAR Odometry-only (red lines are loop closures):

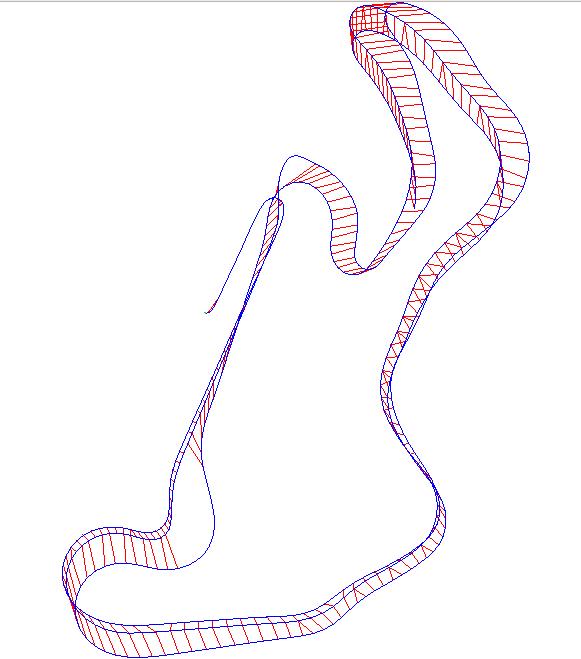

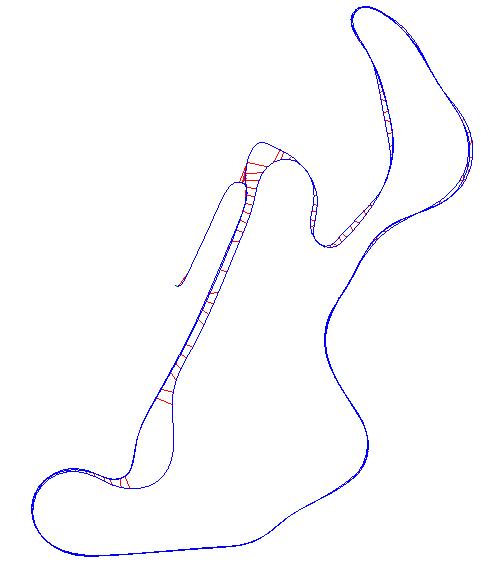

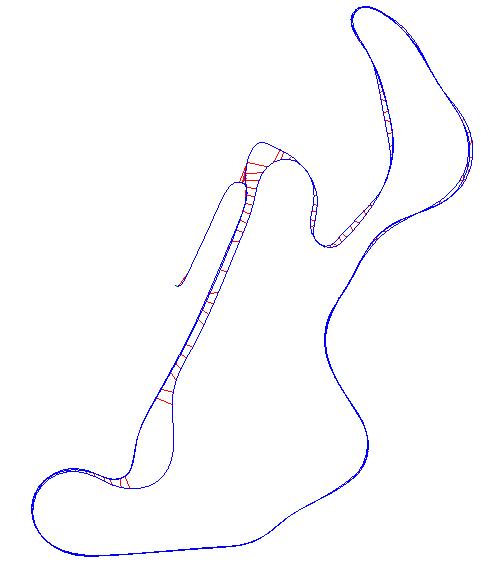

With loop closure optimization:

To distinguish the track and the grass, the velodyne's intensity channel can give a good idea. We can see it more clearly in this video: https://youtu.be/hmtdcxV-0NU

The parameters above are quite very for this dataset to close the loops without a camera. Combining with a stereo camera could help to detect loop closures more robustly.

EDIT

Integrating a stereo camera is possible (assuming it is already calibrated, synchronized between left and right cameras and provide common stereo topics). You could add to rtabmap.launch command above:

stereo:=true \

rgbd_sync:=true \

approx_sync:=true \

approx_rgbd_sync:=false \

left_image_topic:=/stereo_camera/left/image_rect \

right_image_topic:=/stereo_camera/right/image_rect \

left_camera_info_topic:=/stereo_camera/left/camera_info \

right_camera_info_topic:=/stereo_camera/right/camera_info

This will synchronize stereo data with Lidar data. You may also do stereo visual odometry instead of LiDAR odometry, I think it would be a little more robust because of the lack of geometry in this kind of environment. To use stereo odometry, remove icp_odometry:=true above and set back --Rtabmap/DetectionRate 2. As I did for Lidar, there could be some tunings for visual parameters depending on the resolution of the cameras and this kind of environment. If you want to use external odometry from another visual odometry package, add:

visual_odometry:=false \

odom_topic:=/my_visual_odometry_topic