I think the intent of your question is more general than the specific questions you asked, so I'll try to answer:

Specific questions:

but how can I make it move accordingly with the goal coordinates?

Is there any ROS node which I can use as starting point to develop my ROS node? Do you have suggestions on the algorithm to use or on other sensors/methods?

There are several different packages available within ROS to do motion planning with the Navigation Stack sort of being the "hello world" application for robots equiped with Lidar and wheel encoder.

Does the laser/scan topic work fine with laser distance sensors?

You could make your 4 laser distance sensors output data that could be built into a laser/scan message. But that message with only 4 active data points would be of limited use in a typical robot environment.

Input for what I expect is the intent of the post: Give me advice for how to do this.

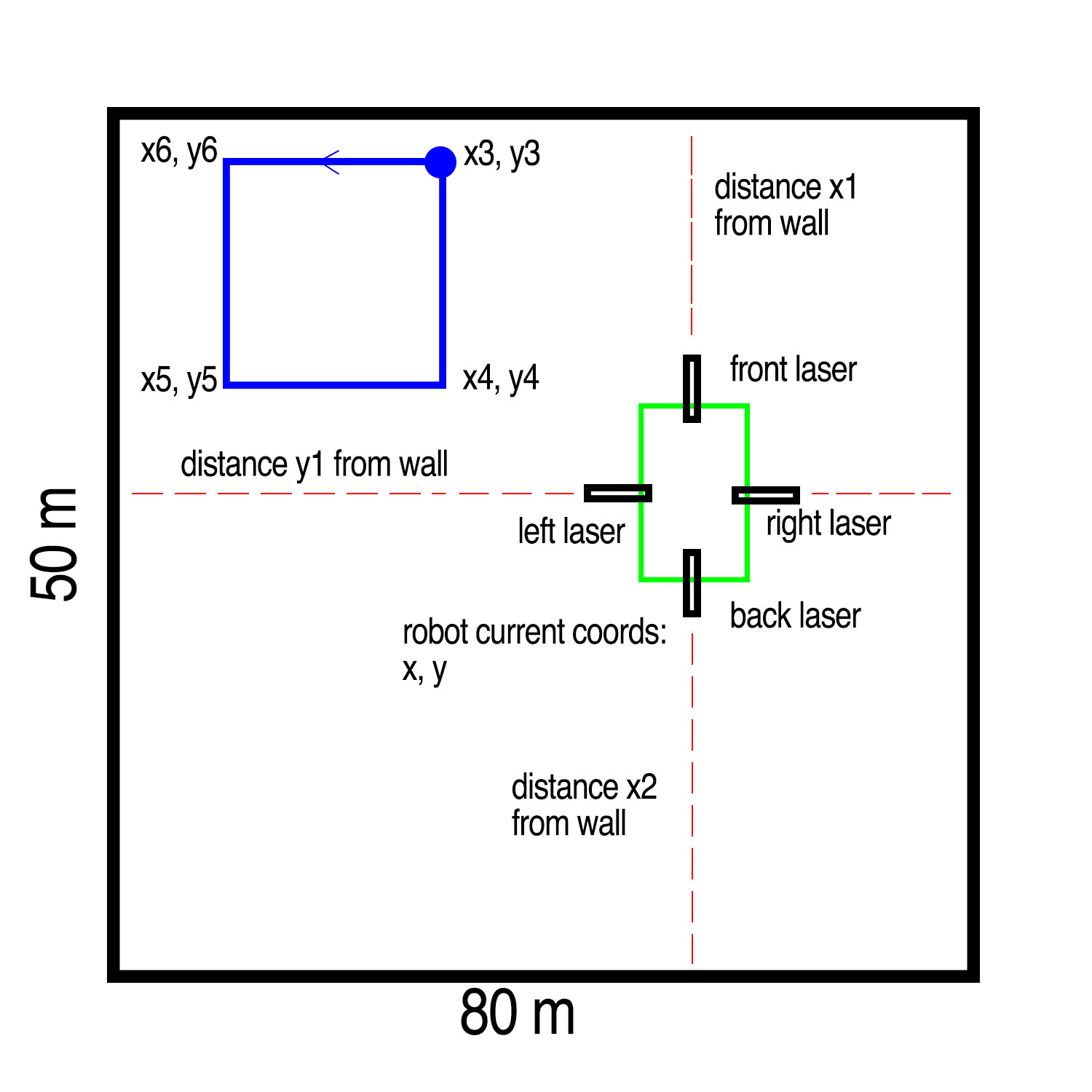

The issue of range for laser scanners is not new. Your proposed idea of 4 distance sensors pointing at the walls will work fine provided you can assure that the robot will

- be and stay oriented parallel to the walls

- you have some way to either detect obstacles or can be assured there will not be any obstacles.

If 1) and 2) are met you would need to generate an odom message based on the readings of the lasers and move_base could be used to do path planning. Of course there are some caveats regarding noise and accuracy of your lasers. But I'll skip that for now.

In the case for 4 stationary lasers on a platform that cannot be assured to remain oriented, I can imagine an algo that determines accurate long term orientation and location provided the following are met:

- you know the starting location and orientation of the robot

- you have accurate measurements of the room

- the lasers are suitably quiet, stable, and repeatable

- you know which way the wheels are rotating (assuming you have wheels)

It's possible AMCL can do this out of the box with 4 points, but not sure. The algo I'm imagining is more deterministic than AMCL (meaning more likely to fail in noisy conditions)

A more general setup that can use well-tested ROS apps would be to mount one or more of your 80m sensors on a rotating platform and generate a multi-angle laser/scan message. With a rotating laser(s) you could see the walls, detect orientation of the robot to the walls and track obstacles.

Many people have put laser ranger finders on rotating platforms and posted youtube videos of the process. I have built a laser scanner based on a cheap range finder. It has better range than the laser scanners I bought but it's noisier.

Hello guys,

We have a precise (±2cm) indoor navigation system (Indoor “GPS”) that is designed to provide real-time location coordinates for autonomous robots, vehicles, AR and VR system.

Demo how it works:

https://www.youtube.com/channel/UC4O_kJBQrKC-NCgidS_4N7g/videos.Basics: You have stationary beacons (not Bluetooth or WiFi) every 10-50 meters. And you trace a mobile beacon on your robot with ±2cm precision. You get the coordinates either directly from the beacon on your robot via USB or UART or SPI or I2C; or you can get data from the central controller - depending on the needs of your system. Detailed description of the protocol is available.

More information is on

marvelmind.comI will be happy to provide you with more information, if needed.P.S.

You can use the following coupon as "Welcome Discount" 3% OFF srg_a55k_3

The most important is that the system is readily available and you ...(more)

Honestly speaking they look like all the other UWB products that you can find on the market and they provide only +/-50 cm accuracy in best scenarios. I could be interested but I doubt that they can reach a so accurate precision.

You can contact me by email (check my profile) in order to better talk about your system

Hi Marcus, Thank you for interest of our system. I do not see your email in the profile. May I ask you to send me mail to sergey(at)marvelmind.com? I will answer for all your questions. Thank you in advance.

@Marcus Barnet: it would be interesting (and beneficial to the community) if you could post a comment here with whatever you learn about @Sergey_marvelmind's product.

@gvdhoorn yes, I will share everything for sure! @Sergey_marvelmind: I sent you an email.

By the way, i've found another company that provides a very high accuracy (+/-2cm) with radio beacons, but their system is very expensive (>10.000 USD).

@gvdhoorn if you what I can share all info what you need + additional discount coupon for community.

We have a lot of demo of our products, video and docs.

Just for example

- https://www.youtube.com/watch?v=YAU-WXz26YY - https://www.youtube.com/watch?v=OXetXiDyAZI - https://www.youtube.com/watch?v=MccIB2pUFaM

I'm not necessarily interested in your product(s). I just wanted to make sure your comments are not just marketing for your company, but would result in a contribution to the question @Marcus Barnet posted.

If you would have a proper ROS driver (adhering to the relevant REPs, etc), you could perhaps post in the Projects category on ROS Discourse. Plain advertisements are not wanted there though.