rtab-map to scan the PLAIN wall in front of the robot

Hello,

i'm working on a robotic arm which can be equipped with a realsense or a zed rgbd camera.

The camera is attached to the end-effector of the robot, so im able to make a "zig-zag" (from left to right) movement to scan a 2m x 2m area in front of the robot. (The executed movement is quite slow)

The area in front of the robot is always plain wall (white wall) with some obstacles (boxes).

My first question is that what is the most effective way to get a nice pointcloud2 of the environment (i need both rgb and xyz data)?

I tried using the popular rtab-map package to create the cloud output, however i could not get good results.

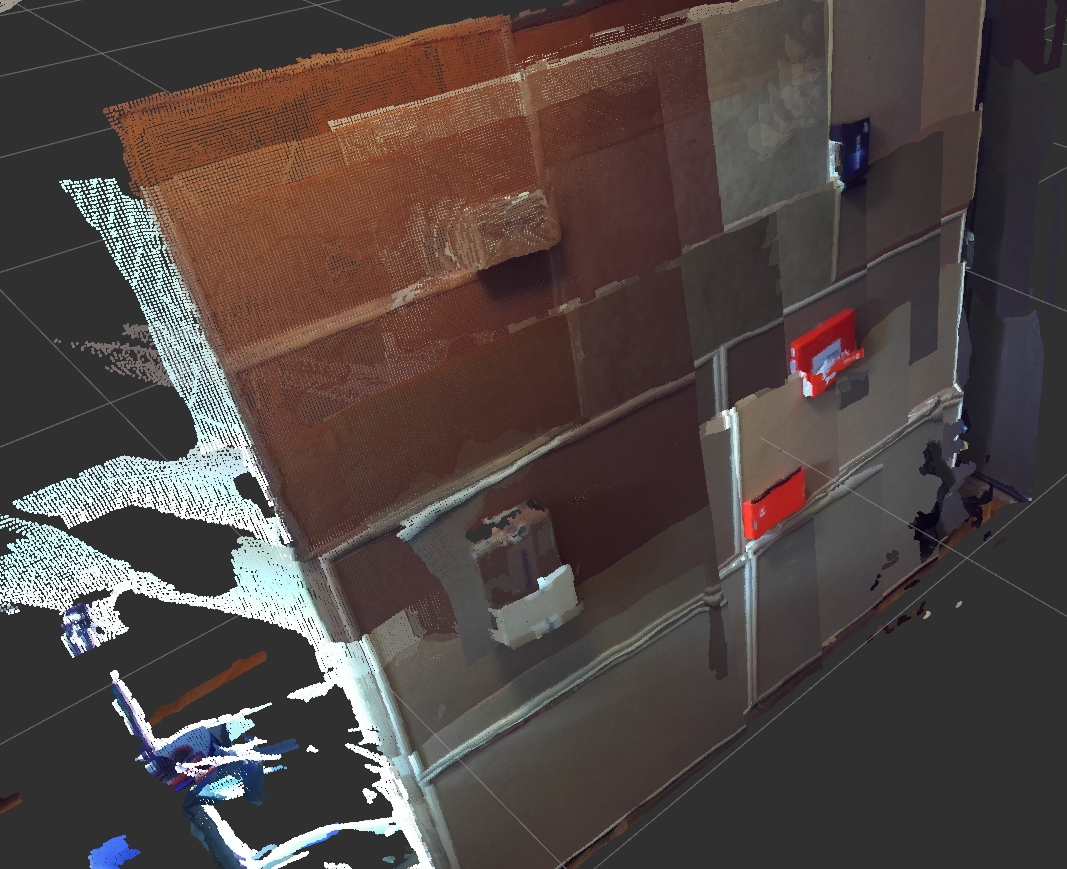

I assume that due to the plain wall (i.e., no reference points for merging the data?), the algorithm constructed unacceptable output. I tried decreasing cloud_voxel_size and the decimation, and increasing DetectionRate parameters, but i could not achieve better results.

So if the rtab-map is the most suitable package to solve the aforementioned problem, then could you please help me in the setup of the package? What are the relevant parameters that i should change?

Im not interested in localization, nor in merging maps. I just want to scan the 2x2m area and get a pointcloud2 data set of that area.

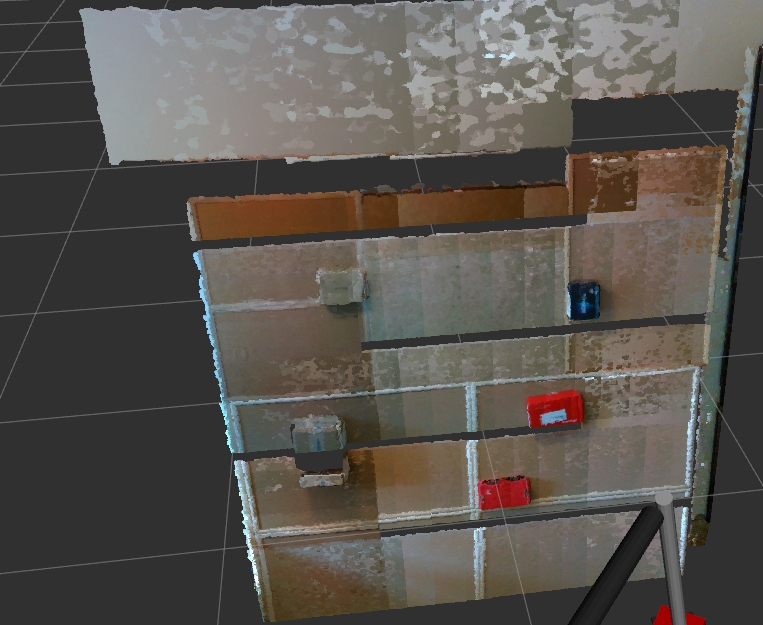

UPDATE (after the answer): Current outcome:

cloud_voxel_size=0.005, DetectionRate=2, MaxFeatures=-1, ProximityBySpace=false, AngularUpdate=0, LinearUpdate=0

ZED - unacceptable

Realsense- quite good (smaller FOV resulted in empty parts/gaps that can be easily corrected with modification of the zig-zag scanning path)

Thank you for the help in advance.