RealSense D435 + rtabmap + Pixhawk (IMU) + robot_localization

Hello everyone,

I am trying to fuse a visual odometry with Pixhawk IMU. For those who don't know, Pixhawk is an autopilot used for drone in this case. There is ROS integration provided thanks to mavros package, so I can get standard imu message from the Pixhawk. I am using only the IMU data from Pixhawk. I am using ROS Kinetic.

Intel already has a camera with built-in IMU, which is D435i. They provided example of launch file with exact same config as I want to use (realsense, rtabmap, robot_localization). Only difference is the IMU used. The launch file is here: opensource_tracking.launch

Since I am using different IMU, I changed the code a little bit: look here I launch node for gathering data from Pixhawk elsewhere, so it cannot be found in the launch file above.

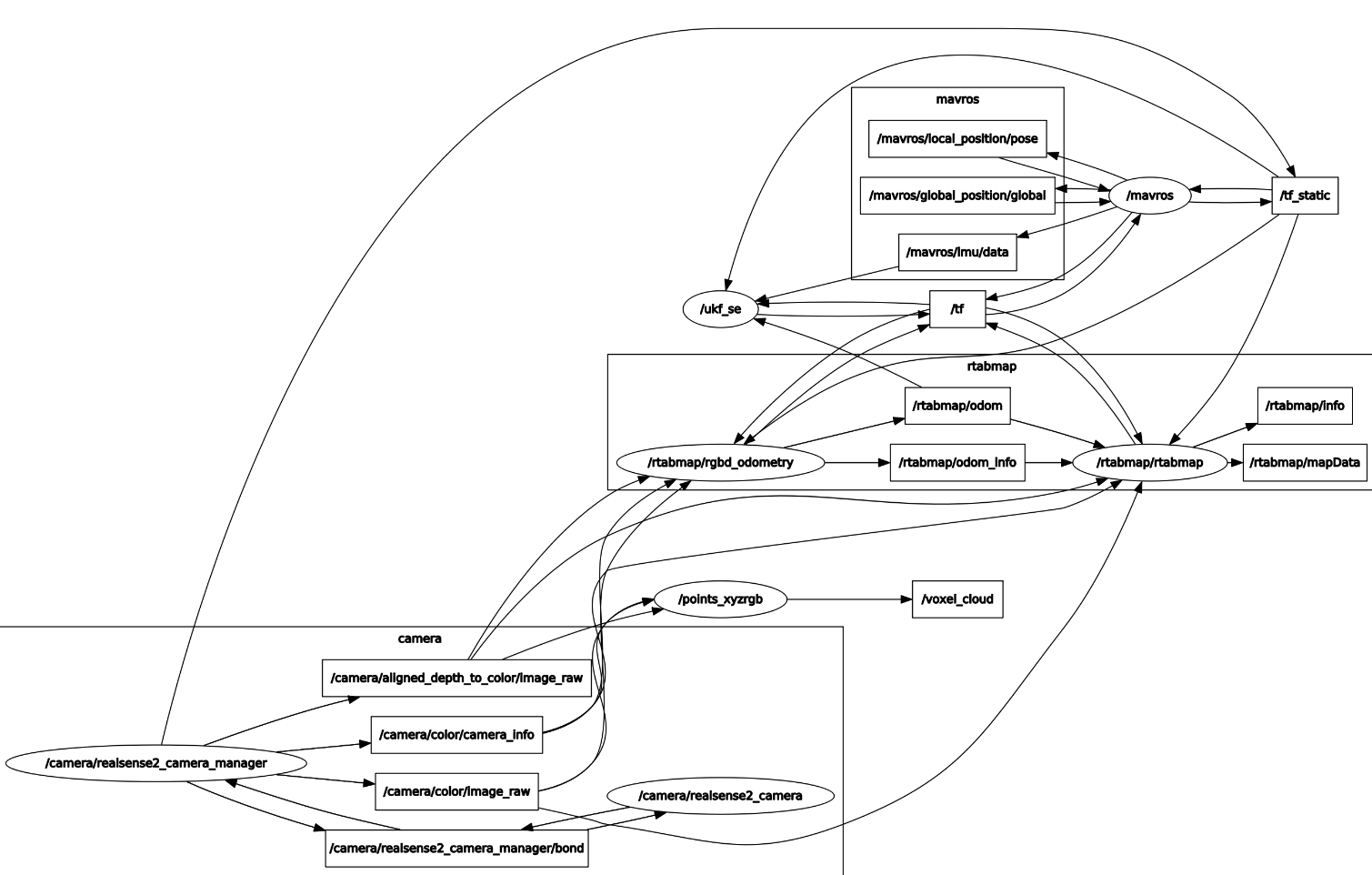

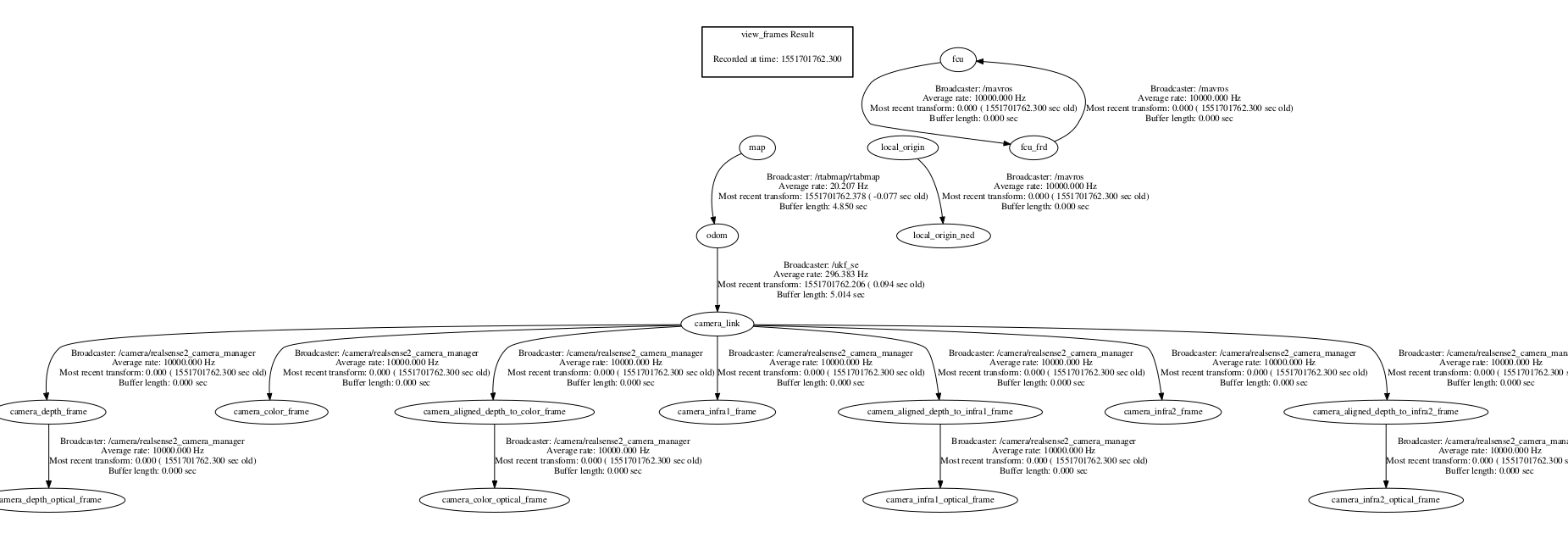

So to the problem. I am able to run the rtabmap, create the 3D map of environment, localize myself with the visual odometry itself. I am able to get the imu data from pixhawk, when I echo the pixhawk imu data topic. Everything runs without errors. Although I am not sure, whether the config is correct. I attached the Pixhawk and RealSense camera to a plastic plate. I thought it was operating correctly, but when I detach Pixhawk and move it around, odometry does not change (I visualize odometry in RViz). The odom topic I try to visualize is /odometry/filtered, which is output of robot_localization package, so I guess this odometry should be output of merged imu and visual odometry. So to sum up: I am not sure if the IMU affects the odometry at all. I attach rqt_graph and tf view_frames for better understanding what is happening.

P.S. I may have forgotten to write everything about this issue, so please ask for additional info, if it is needed. I really appreciate any help! Thanks

@eMrazSVK: please attach your images directly to your question. I've given you sufficient karma to do that.

Thanks, done.