Advice on improving pose estimation with robot_localization

Dear Tom Moore,

Let me start by thanking you on your excellent work on the robot_localization ROS package. I have been playing with it recently to estimate the pose of a differential-drive outdoor robot equipped with several sensors, and I would like to kindly ask your opinion about it.

Perhaps you could send me some tips on how to improve the pose estimation of the robot, especially the robot's orientation. Here is a video of the pose estimation that I get.

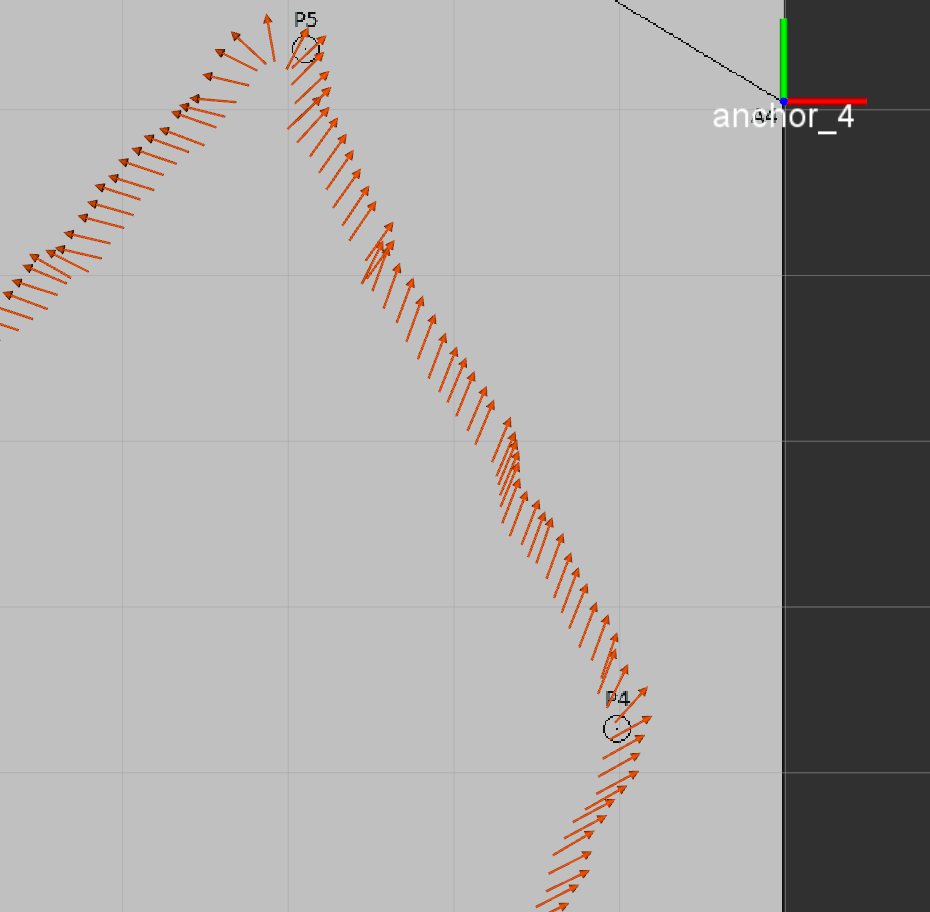

The Odometry estimation given by the EKF node is the dark orange/red one. Below, you have a list of the main topics available in my dataset:

- /laser/scan_filtered --> Laserscan data

- /mavros/global_position/local --> Odometry msg fusing GPS+IMU (from mavros: Brown in the video)

- /mavros/global_position/raw/fix --> GPS data

- /mavros/imu/data --> Mavros IMU data

- /robot/odom --> Encoder data (odometry: Green in the video)

- /robot/new_odom --> Encoder data (odometry with covarince -- added offline)

- /tf --> Transforms

- /uwb/multilateration_odom --> Multilateration (triangulation method providing global x,y,z)

- /yei_imu/data --> Our own IMU data

- /zed/odom_with_twist --> Visual odometry from the ZED Stereolabs outdoor camera (Blue in the video)

Although I have plenty of data, I am trying to fuse in a first stage the estimation given by the onboard Ultra Wide band (UWB) multilateration software (just positional data, no orientation given) + the robot encoders, which are decent + our IMU (on yei_imu/data).

However, as you can see, the estimated orientation of the robot is sometimes odd. I would expect the blue axis of the base_link frame (in the video) to always point up, and the red axis to always point forward. However, it is clear that especially the red axis points outwards sometimes, instead of pointing to the direction of movement. This is clear here:

Do you have any suggestion to improve the orientation estimation of my robot?

Also, I notice that for positional tracking, it doesn't seem to make much of a different to use just the UWB estimation, when compared to fusing UWB + robot encoders. I was expecting to smooth out the trajectory a bit, as the UWB data is subject to some jumps in positional data.

These are the params that I am currently using in the robot_localization software, in case you want to advise me to change anything.

Btw, I'm on ROS Kinetic, Ubuntu 16.04. Just some general guidelines and things that I could try from your perspective would be greatly appreciated. If you are interested in trying out my dataset, I can send a rosbag later.

Thank you in advance!

EDIT: posting config in-line:

frequency: 10

sensor_timeout: 0.25 #NOTE [D]: UWB works at 4Hz.

two_d_mode: false

transform_time_offset: 0.0

transform_timeout: 0.25

print_diagnostics: true

publish_tf: true

publish_acceleration: false

map_frame: map

odom_frame: odom

base_link_frame: base_link

world_frame: odom

# UWB (x,y,z):

odom0: uwb/multilateration_odom

odom0_config: [true, true, true, #x,y,z

false, false, false,

false, false, false,

false, false, false,

false, false, false]

odom0_differential: false

odom0_relative: false

odom0_queue_size: 2

odom0_pose_rejection_threshold: 3 ...

If you could post a bag file that would be useful.