RTABMAP with two stereo cameras

Hello, first of all thanks to Mathieu Labbe for all his hard work on rtabmap and for bringing it to ROS!

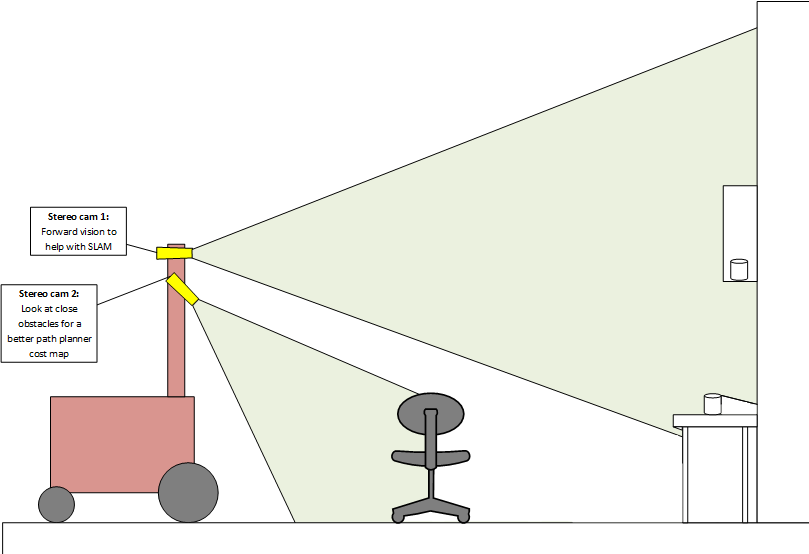

I would like to use two stereo cameras to aid in SLAM and also path planning. I have been experimenting with one camera and I found that if I point it forward, SLAM works well but the path planner doesn't because it is guessing about obstacles at the feet of the robot. If I point the camera down path planning works well but mapping suffers.

Id like to use two stereo cameras increase path planner obstacle detection accuracy and maintain good map building abilities.

Question is, what is the best way of doing this? rtabmap seems to be written to subscribe to only one stereo camera. Should I combine the point clouds generated by stereo_image_proc?

Also I'm unsure as to how I should use my wheel odometry in this setup. It looks like rtab can only use one odometry source. Should I just do visual odometry if it works well enough and pipe that into rtab? I'm experimenting right now with wheel odometry data and one stereo camera with rtab and I'm slightly unsure on how I should connect these systems together.

Thanks!