robot_localization IMU and visual odometry from rtabmap

I realize this topic has come up a lot, but I've read every related question on here and nothing is quite working yet.

I have a depth camera and an IMU. The IMU is rigidly attached to the camera. I'm using rtabmap to produce visual odometry and localize against a map I previously created, and I've created my own IMU node called Tacyhon for my invensense MPU 9250 board.

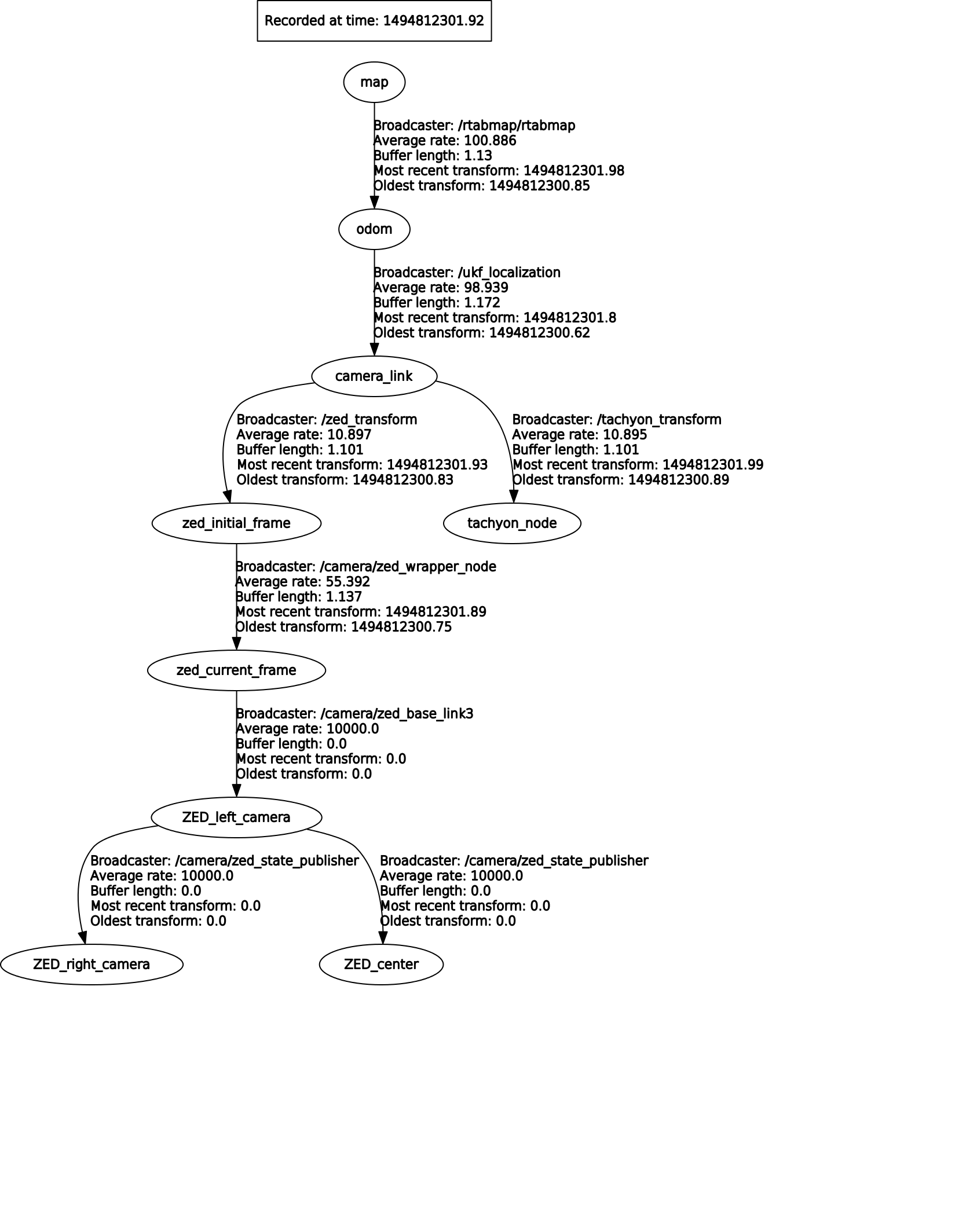

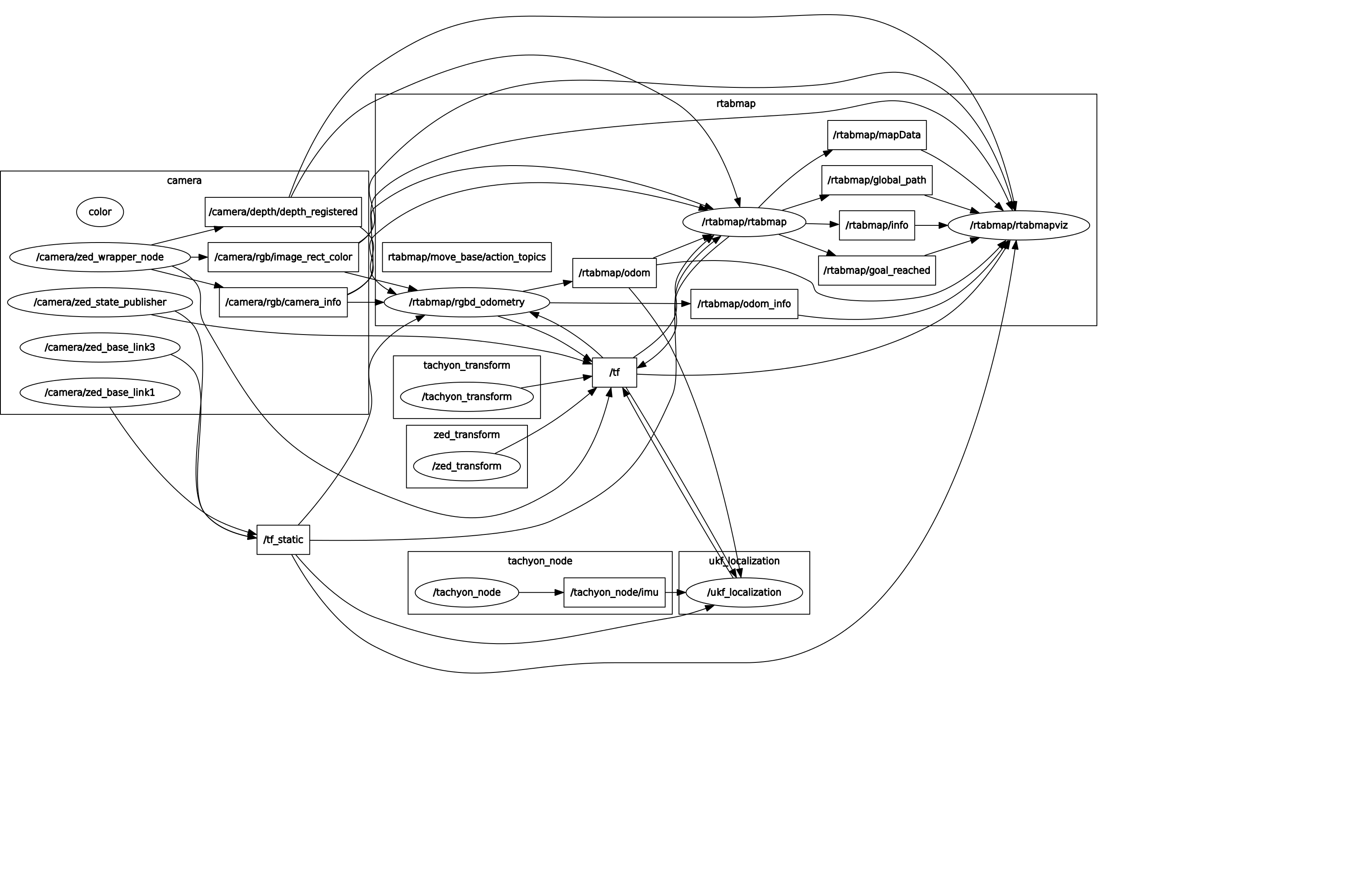

Here are my frames and rosgraph...

The acceleration and angular velocity seem to be working fine, however, orientation is relative to camera_link, not relative to map. Thus a 90 degree rotation results in robot_localization thinking that the IMU is facing 180 degrees from its starting location, since it is 90 degrees rotated from camera_link. How can I set my acceleration and angular velocity from the IMU topic to be relative to camera link, but my orientation data to be relative to map so that r_l can fuse the orientation from the IMU with the orientation from rtabmap?

Here is my launch file.

Any help would be appreciated!

<launch>

<node pkg="sextant_tachyon_node"

type="sextant_tachyon_node"

name="tachyon_node" />

<node name="tachyon_transform" pkg="tf" type="static_transform_publisher"

args="0 0 0.005 0 0 0 camera_link tachyon_node 100" />

<node pkg="robot_localization"

type="ukf_localization_node"

name="ukf_localization"

clear_params="true"

output="screen">

<param name="frequency" value="100" />

<param name="sensor_timeout" value="10" />

<param name="map_frame" value="map" />

<param name="odom_frame" value="odom" />

<param name="base_link_frame" value="camera_link" />

<param name="transform_time_offset" value="0.0" />

<param name="imu0" value="/tachyon_node/imu" />

<param name="odom0" value="/rtabmap/odom" />

<rosparam param="imu0_config">

[false, false, false,

true, true, true,

false, false, false,

true, true, true,

true, true, true]

</rosparam>

<rosparam param="odom0_config">

[true, true, true,

true, true, true,

false, false, false,

false, false, false,

false, false, false]</rosparam>

<param name="odom0_differential" value="false" />

<param name="odom0_relative" value="false" />

<param name="print_diagnostics" value="true" />

<param name="odom0_queue_size" value="500" />

</node>

</launch>