Where does the offset/rotation come from with Industrial Calibration Extrinsic Xtion to Arm Calibration?

I calibrated our Xtion to the arm of our robot with the following parameters:

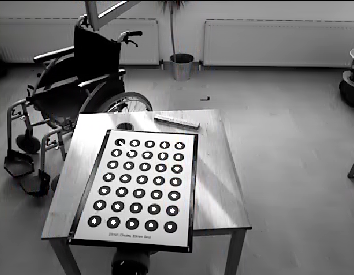

- target: CircleGrid5x7

- cost_type: LinkCameraCircleTargetReprjErrorPK

- transform_interface: ros_camera_housing_cti Calibrated

Only one single static scene was used.

Outcome:

cost_per_observation: 0.0259

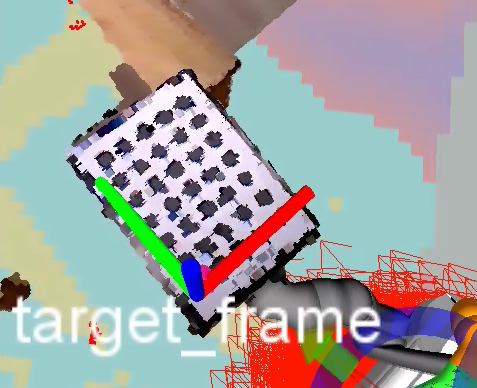

Recognized target:

Nothing was moved after calibration!

The target_frame is not perfectly oriented! What could be the source of this problem? Shouldn't everything be aligned perfectly with cost_per_observation this low? As I understand it should be perfect even if the intrinsic calibration of the camera is not correct?