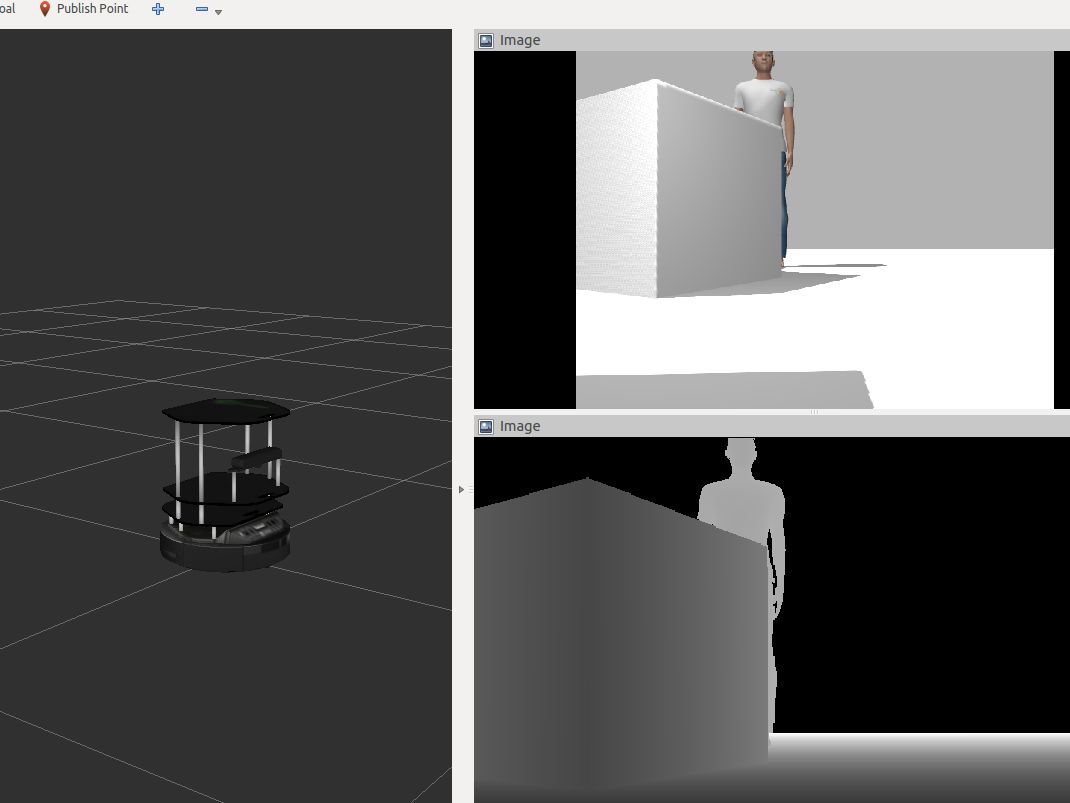

face_detector not detecting with RGBD camera

I recently installed the face_detector node and compilled it successfully. However, when I run the .launch given in the package I cannot detect any faces. I'm using Ubuntu 12.04, ROS Hydro, rosbuild and a Kinect both for its RGB camera and its depth sensor. I already checked dependency libraries and I think they're okay. Whenever I do:

rostopic echo face_detector/status

It shows me that the node it's working, but it's not publishing anything in the face detection topics. Also, even if I set the parameter do_display to true, it doesn't show a OpenCV window.

Here's the .launch I'm using to run the node. I run openni_launch before using this:

<launch>

<arg name="camera" default="camera" />

<arg name="image_topic" default="image_rect_color" />

<arg name="depth_topic" default="image_rect_raw" />

<arg name="fixed_frame" default="camera_rgb_optical_frame" />

<arg name="depth_ns" default="depth_registered" />

<!--include file="$(find openni_launch)/launch/openni.launch"/-->

<node name="$(anon dynparam)" pkg="dynamic_reconfigure" type="dynparam" args="set_from_parameters $(arg camera)/driver">

<param name="depth_registration" type="bool" value="true" />

</node>

<node pkg="face_detector" type="face_detector" name="face_detector" output="screen">

<remap from="camera" to="$(arg camera)" />

<remap from="image_topic" to="$(arg image_topic)" />

<remap from="depth_topic" to="$(arg depth_topic)" />

<remap from="depth_ns" to="$(arg depth_ns)" />

<param name="fixed_frame" type="string" value="$(arg fixed_frame)" />

<param name="classifier_name" type="string" value="frontalface" />

<param name="classifier_filename" type="string" value="$(env ROS_ROOT)/../OpenCV/haarcascades/haarcascade_frontalface_alt.xml" />

<param name="classifier_reliability" type="double" value="0.9"/>

<param name="do_continuous" type="bool" value="true" />

<param name="do_publish_faces_of_unknown_size" type="bool" value="true" />

<param name="do_display" type="bool" value="true" />

<param name="face_size_min_m" type="double" value="0.05" />

<param name="face_size_max_m" type="double" value="0.8" />

<param name="max_face_z_m" type="double" value="8.0" />

<param name="use_rgbd" type="bool" value="true" />

<param name="approximate_sync" type="bool" value="true" />

</node>

</launch>

EDIT: Thanks to Dan Lazewatsky's suggestion I created a sample code that subscribes to topics /face_detector/faces_cloud and /face_detector/people_tracker_measurements_array. However, it's still not detecting faces. Here's my code:

#include <ros/ros.h>

#include <cv_bridge/cv_bridge.h>

#include <opencv/cv.h>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/objdetect/objdetect.hpp>

#include <people_msgs/PositionMeasurementArray.h>

#include <sensor_msgs/PointCloud.h>

#include <sensor_msgs/image_encodings.h>

void face_cloud_Callback(sensor_msgs::PointCloud a)

{

ROS_INFO("I received some point cloud info!");

}

void pos_meas_Callback(people_msgs::PositionMeasurementArray a)

{

ROS_INFO("I received some position info!");

}

int main(int argc, char **argv)

{

ros::init(argc, argv, "faces");

ros::NodeHandle nh;

ros::Subscriber sub = nh.subscribe("/face_detector/faces_cloud", 1000, face_cloud_Callback);

ros::Subscriber sub2 = nh.subscribe("/face_detector/people_tracker_measurements_array", 1000, pos_meas_Callback);

ros::spin();

}