How to deal with an offset in precise GPS data?

I've got a robot setup with a very precise differential GPS (centimeter level). For technical reasons, I can't attach the GPS directly over the rotational center of the robot, but rather need to make an offset in both x- and y-direction.

There are two things I'd like to do:

First, infer the orientation of the robot from the measurements of the GPS

Secondly, infer the position of the robot on the UTM grid from the position of the GPS

Obviously, the first task is the harder one. Once it is solved, the second one is trivial.

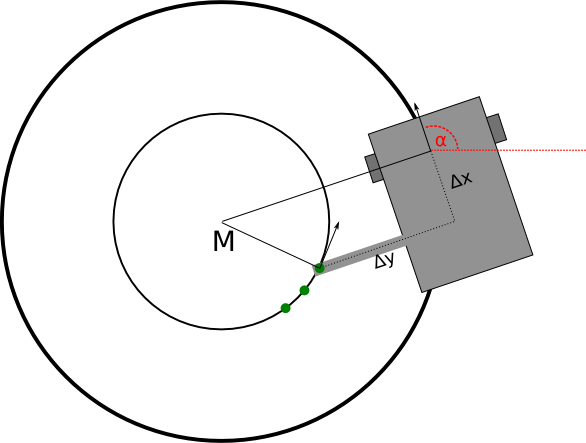

I hope the picture illustrates the situation a bit. My robot has a differential drive, so the rotational center is located between the two wheels. My GPS is attached to a rod with offset delta_x and delta_y (in the picture, delta_x is negative). The green dots stand for the three last GPS measurements that were recorded. What I'd like to infer is angle alpha.

From what I've tried so far, I gather that there is no single solution if I take only the last two measurements into account. Therefore, I am thinking about using the last three measurements. They lie on exactly one circle with midpoint M. This means that my robot is currently moving on a circle with the same midpoint.

Apparently, my geometry skills simply aren't good enough - I've not been able to compute a solution. The solution is fairly simple if either delta_x or delta_y are equal to zero - but with both values != 0, I don't get anywhere.

Can anybody give me a hint whether this problem is solvable and how you'd go about solving it? On first glance it sounds like a fairly common usage of a GPS.