The site is read-only. Please transition to use Robotics Stack Exchange

| ROS Resources: Documentation | Support | Discussion Forum | Index | Service Status | ros @ Robotics Stack Exchange |

| 1 | initial version |

Hello !

First you should take a look at @LeoE link in the comment, there is all you need to know about tf, take a look at this answers if you want some image.

I you want to fuse the sensor data, you don't want them to have the same Frame as they don't have the same location.

When you publish a Frame, you actually tell Tf that there is 2 frames : The source frame and target frame.

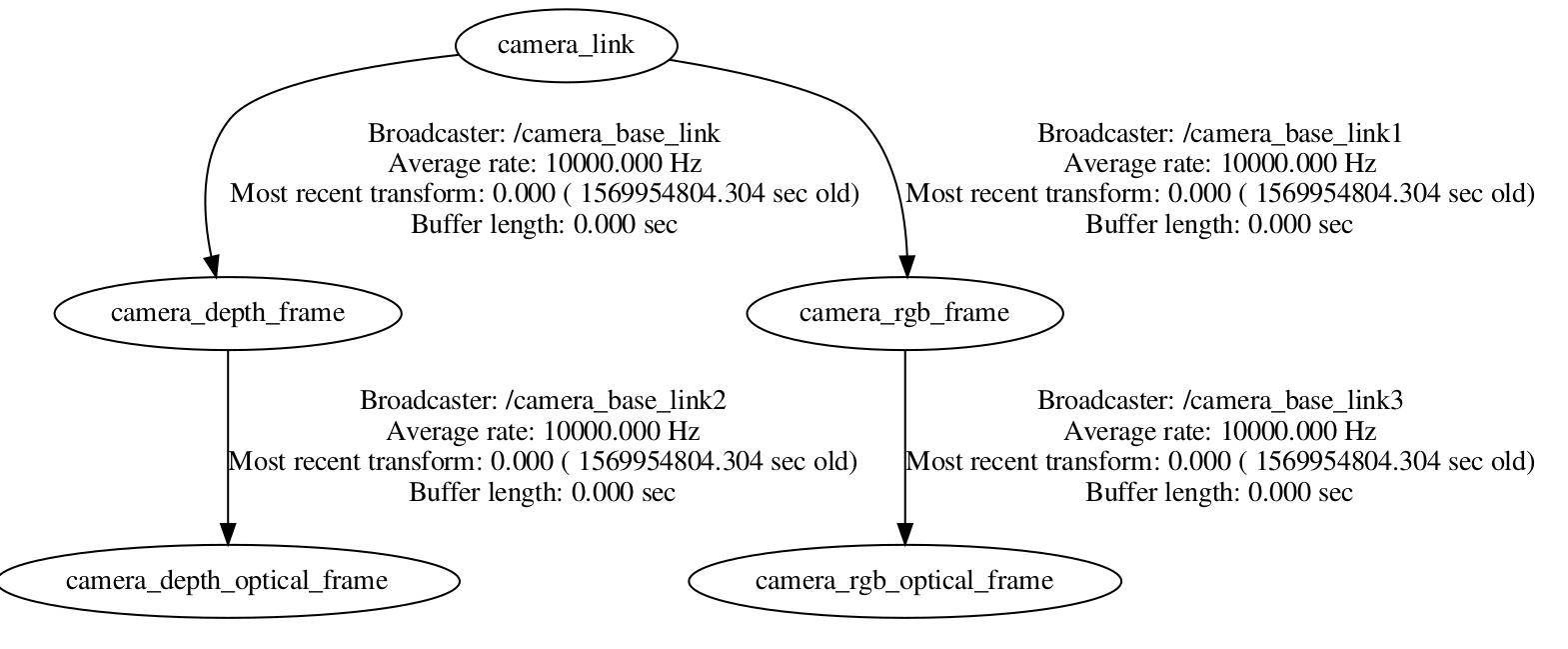

For example, here is the frame from the "rgbd_launch" package (you can see it with rosrun tf view_frames:

each broadcaster is broadcasting the target and the source. The Camera link, the source of all frame, is never broadcasted, instead ROS is waiting for an another node (the application node) to tell where is this frame.

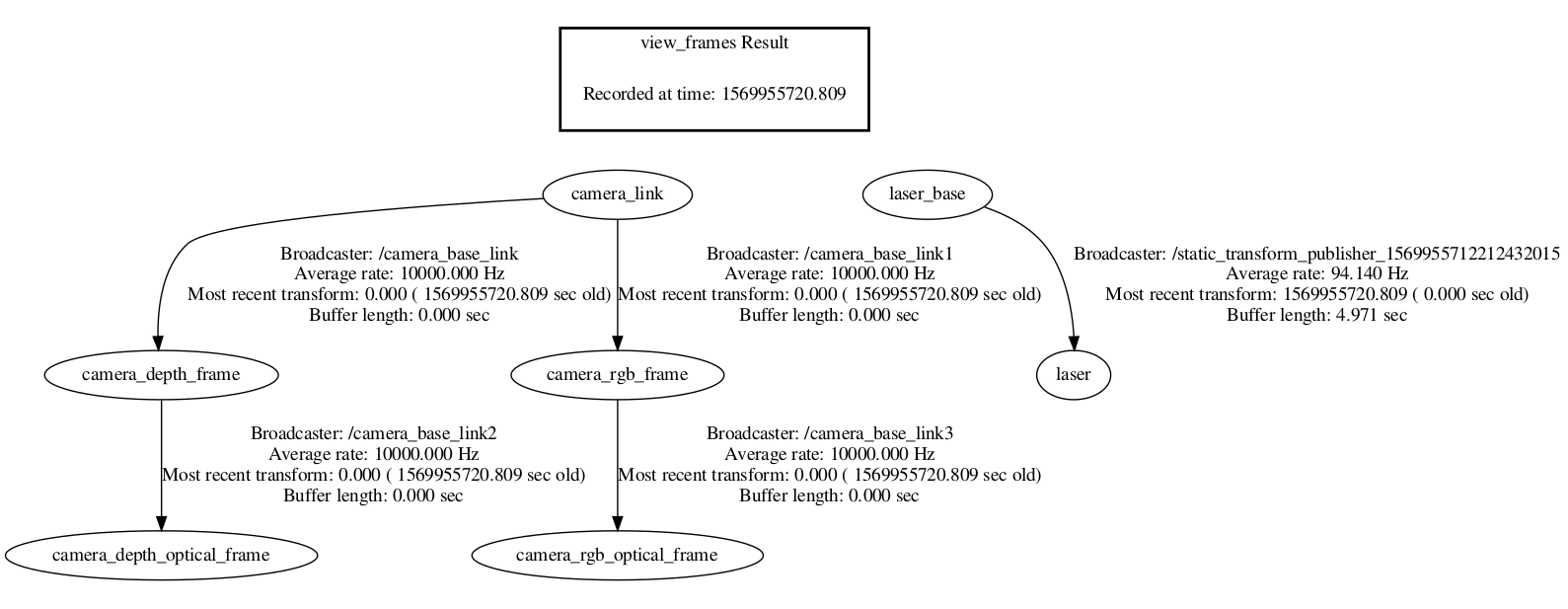

If you launch your lidar node, you should have something like this (I don't have a lidar so I just assumed a single tf was published, I used static_transform_publisher :

In this case, lookuptransform will fail, as the tf tree are not connected.

In your application you should tell Tf where are located the camera to the lidar (or the inverse), or even better if you have a reference point (A robot chassis ?) for the camera and the lidar

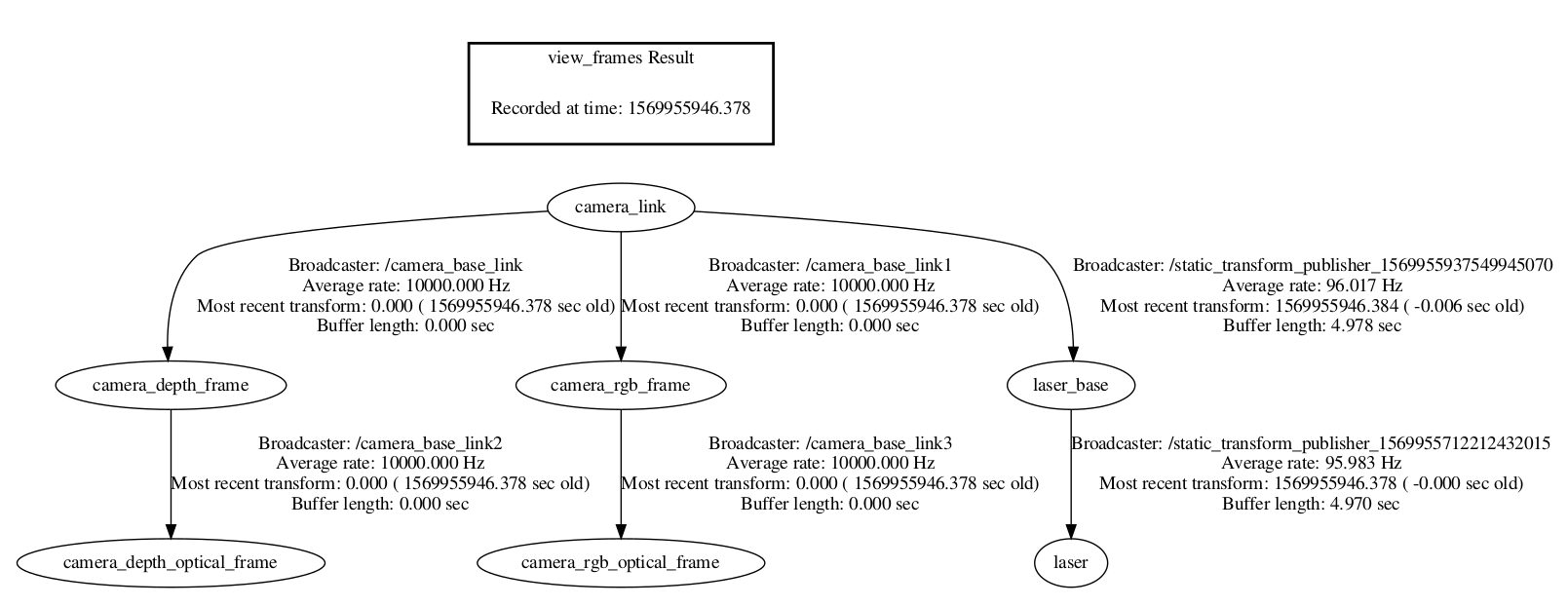

For example, I can tell TF that the lidar is 20cm above the camera (Z-axis) with rosrun tf static_transform_publisher 0 0 0.2 0 0 0 camera_link laser_base 10:

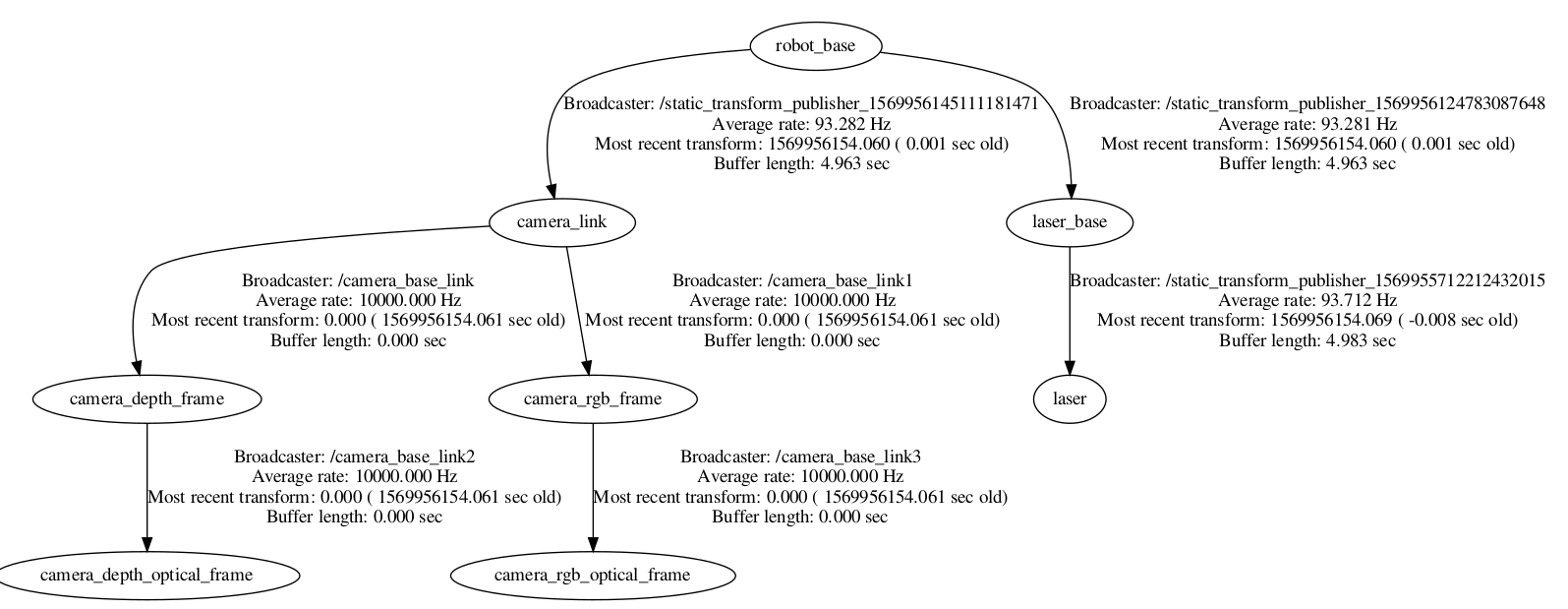

Or if both the camera and the lidar are connected to the same robot, I can tell tf too :

You can use different way to tell tf the transform between your sensors, the best way (I think) is an URDF with robot_state_publisher :

http://wiki.ros.org/tf/Tutorials

http://wiki.ros.org/robot_state_publisher/Tutorials

http://wiki.ros.org/urdf/Tutorials

ROS Answers is licensed under Creative Commons Attribution 3.0 Content on this site is licensed under a Creative Commons Attribution Share Alike 3.0 license.

ROS Answers is licensed under Creative Commons Attribution 3.0 Content on this site is licensed under a Creative Commons Attribution Share Alike 3.0 license.