The site is read-only. Please transition to use Robotics Stack Exchange

| ROS Resources: Documentation | Support | Discussion Forum | Index | Service Status | ros @ Robotics Stack Exchange |

| 1 | initial version |

I looked into the problem together with scgroot. I've added a dockerfile and a docker-compose to the github page, this way people familiar with docker can quickly recreate the problem stated above. I've also added one extra piece of example code which uses 1 node instead of 10.

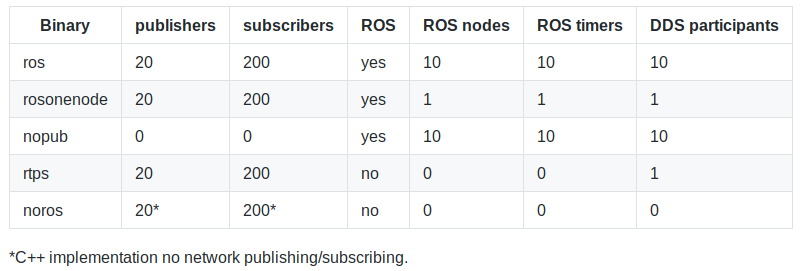

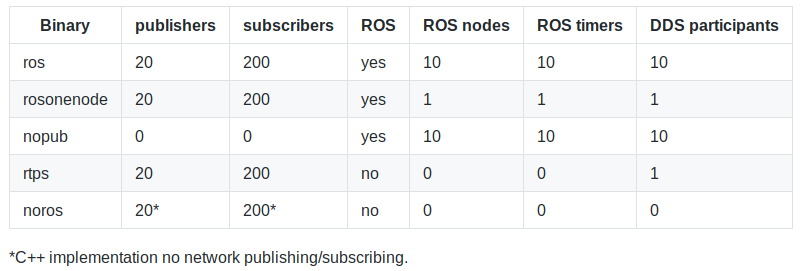

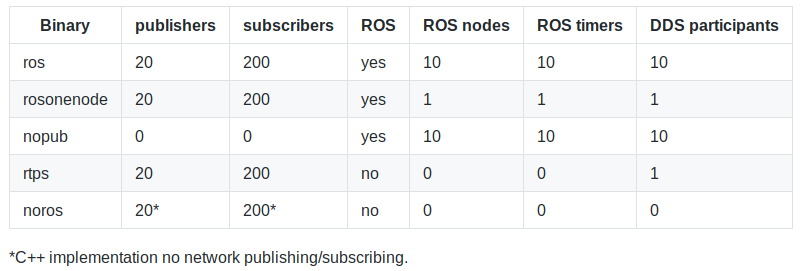

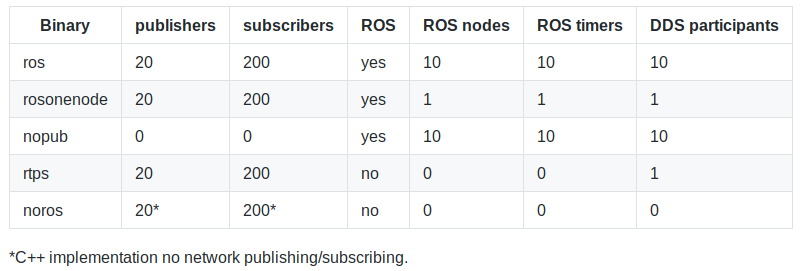

A description of what each binary contains is given here:

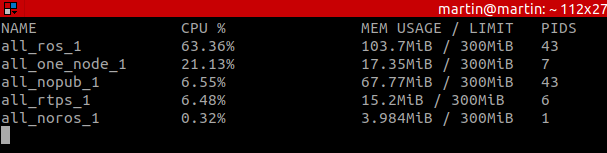

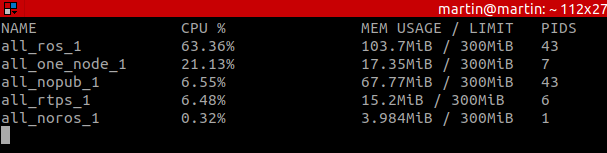

The resulting CPU usage when the binaries each get their own isolated docker container is given here:

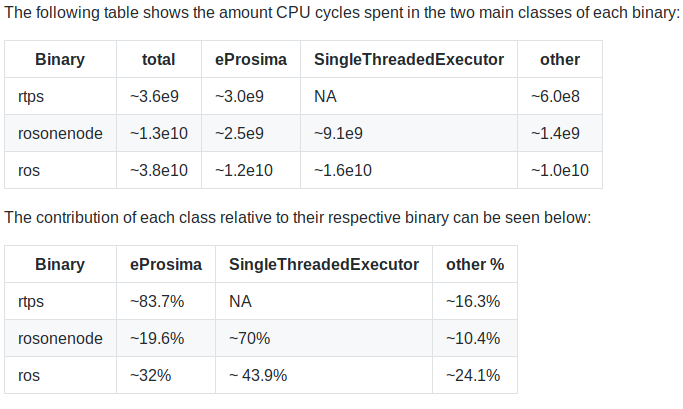

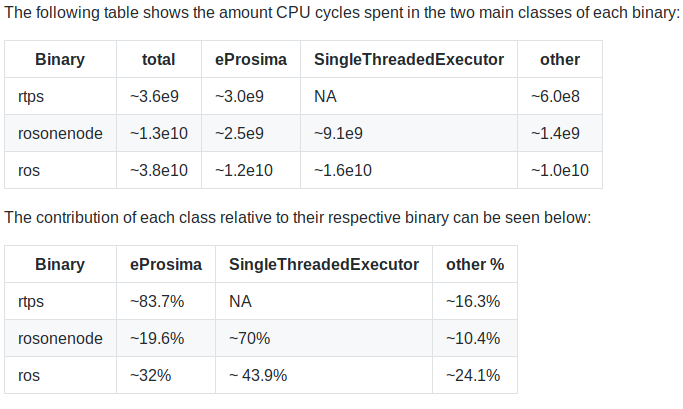

Inspection with valgrind (callgrind) and perf gives:

Conclusions:

Solution / Answer:

A more in-depth explanation of our research and measurements can be found in the README.md of the github page mentioned in the question. Since there does not appear to be a quick and easy solution to this problem (besides using 1 node for your entire application to reduce CPU usage) we opened a discussion on ROS discourse. People who are interested can follow the link that will be posted on the github page.

| 2 | No.2 Revision |

I looked into the problem together with scgroot. I've added a dockerfile and a docker-compose to the github page, this way people familiar with docker can quickly recreate the problem stated above. I've also added one extra piece of example code which uses 1 node instead of 10.

A description of what each binary contains is given here:here:

The resulting CPU usage when the binaries each get their own isolated docker container is given here:

Name CPU% MEM USAGE PIDS

ros 63.36 103.70MiB 43

one node 21.13 17.35MiB 7

nopub 6.55 67.77MiB 43

rtps 6.48 15.20MiB 6

no ros 0.32 3.98MiB 1

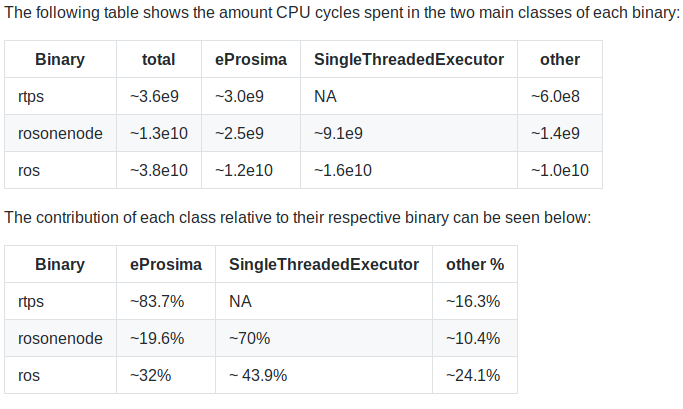

Inspection with valgrind (callgrind) and perf gives:

Conclusions:

Solution / Answer:

A more in-depth explanation of our research and measurements can be found in the README.md of the github page mentioned in the question. Since there does not appear to be a quick and easy solution to this problem (besides using 1 node for your entire application to reduce CPU usage) we opened a discussion on ROS discourse. People who are interested can follow the link that will be posted on the github page.

| 3 | No.3 Revision |

I looked into the problem together with scgroot. I've added a dockerfile and a docker-compose to the github page, this way people familiar with docker can quickly recreate the problem stated above. I've also added one extra piece of example code which uses 1 node instead of 10.

A description of what each binary contains is given here:

The resulting CPU usage when the binaries each get their own isolated docker container is given here:

Name CPU% MEM USAGE PIDS

ros 63.36 103.70MiB 43

one node rosonenode 21.13 17.35MiB 7

nopub 6.55 67.77MiB 43

rtps 6.48 15.20MiB 6

no ros noros 0.32 3.98MiB 1

Inspection with valgrind (callgrind) and perf gives:

Conclusions:

Solution / Answer:

A more in-depth explanation of our research and measurements can be found in the README.md of the github page mentioned in the question. Since there does not appear to be a quick and easy solution to this problem (besides using 1 node for your entire application to reduce CPU usage) we opened a discussion on ROS discourse. People who are interested can follow the link that will be posted on the github page.

| 4 | No.4 Revision |

I looked into the problem together with scgroot. I've added a dockerfile and a docker-compose to the github page, this way people familiar with docker can quickly recreate the problem stated above. I've also added one extra piece of example code which uses 1 node instead of 10.

A description of what each binary contains is given here:

Binary | Publishers| Subscribers| ROS| ROS nodes| ROS timers| DDS participants|

ros | 20| 200| yes| 10| 10| 10|

rosonenode| 20| 200| yes| 1| 1| 1|

nopub | 0| 0| yes| 10| 10| 10|

rtps | 20| 200| no| 0| 0| 1|

noros | 20*| 200*| no| 0| 0| 0|

*C++ implementation no network publishing/subscribing

The resulting CPU usage when the binaries each get their own isolated docker container is given here:

Name CPU% MEM USAGE PIDS

ros 63.36 103.70MiB 43

rosonenode 21.13 17.35MiB 7

nopub 6.55 67.77MiB 43

rtps 6.48 15.20MiB 6

noros 0.32 3.98MiB 1

Inspection with valgrind (callgrind) and perf gives:

Binary Total DDS Executor Other

rtps 3.6e9 3.0e9 NA 6.0e8

rosonenode 1.3e10 2.5e9 9.1e9 1.4e9

ros 3.8e10 1.2e10 1.6e10 1.0e10

Values given in average #CPU cycles

Binary Total DDS Executor Other

rtps 100 83.7 NA 16.3

rosonenode 100 19.6 70.0 10.4

ros 100 32.0 43.9 24.1

Values given in % of total CPU usage contribution

Conclusions:

Solution / Answer:

A more in-depth explanation of our research and measurements can be found in the README.md of the github page mentioned in the question. Since there does not appear to be a quick and easy solution to this problem these problems (besides using 1 node for your entire application to reduce CPU usage) we opened a discussion two discussions on ROS discourse. People who are interested in discussing either problem can follow the link links that will be posted on the github page.

ROS Answers is licensed under Creative Commons Attribution 3.0 Content on this site is licensed under a Creative Commons Attribution Share Alike 3.0 license.

ROS Answers is licensed under Creative Commons Attribution 3.0 Content on this site is licensed under a Creative Commons Attribution Share Alike 3.0 license.