How to tune mono visual odometry parameters of viso2_ros?

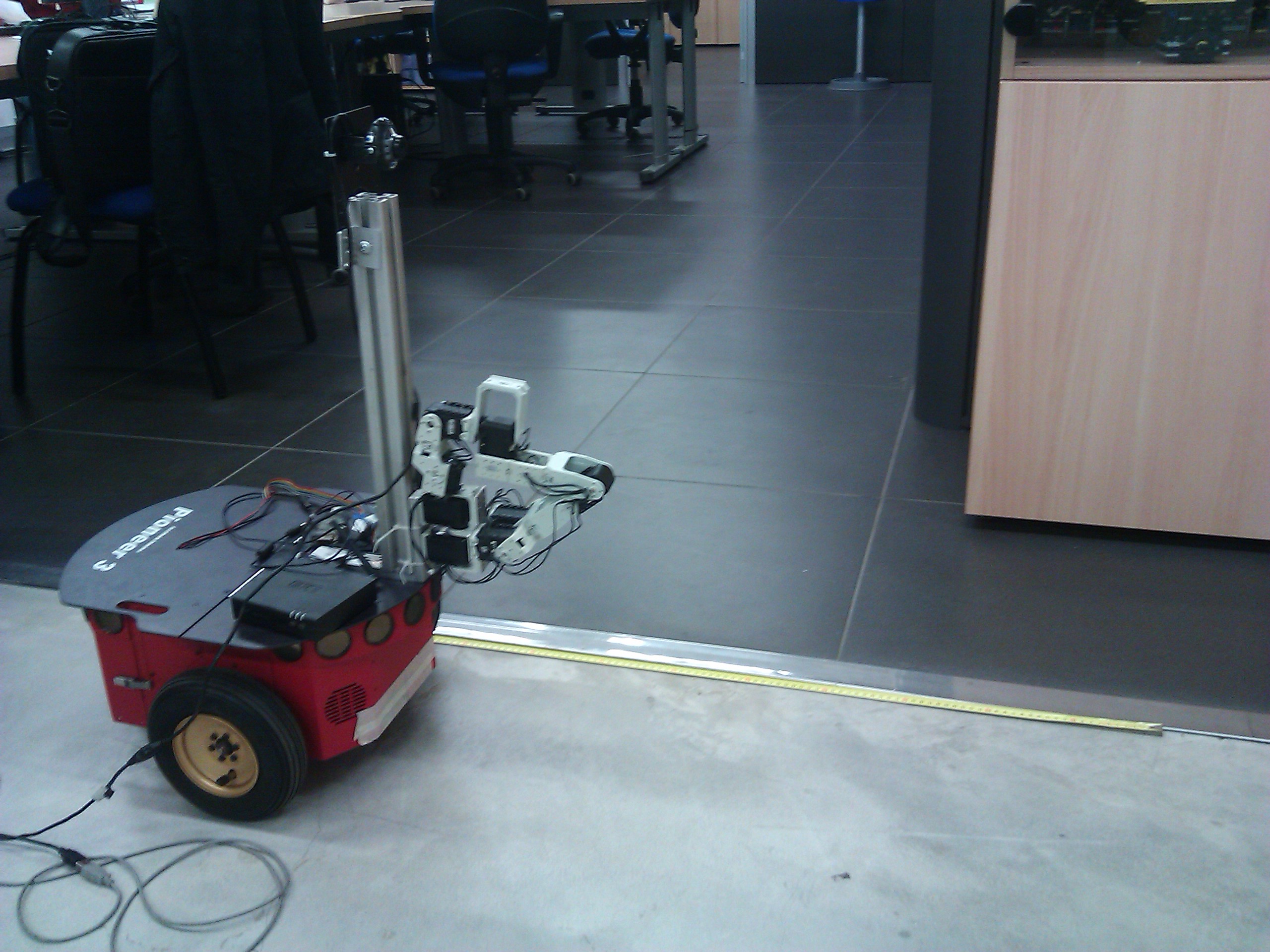

Hi everybody, I'm testing odometry accuracy of viso2_ros monocular vision. This is my system based on a differential drive:

I tried to move the robot for one meter going to the straight direction and after I turned it on the right (90° around itself): http://www.youtube.com/watch?v=UMFbkt4-6s4

As you can see, viso2_ros monocular estimate 1.6626688201 meters along X direction instead of 1meter (see X position in console). In addiction to this, when I rotate it, it estimates a totally wrong position and orientation. My question is: how to tune all these parameters? I used the following:

Matcher parameters:

nms_n = 3

nms_tau = 50

match_binsize = 50

match_radius = 200

match_disp_tolerance = 2

outlier_disp_tolerance = 5

outlier_flow_tolerance = 5

multi_stage = 1

half_resolution = 1

refinement = 1

Bucketing parameters:

max_features = 2

bucket_width = 50

bucket_height = 50

Mono odometry parameters:

camera_height = 0.714

camera_pitch = 0

ransac_iters = 2000

inlier_threshold = 1e-05

motion_threshold = 100

I tried to set different values for inlier_threshold and motion_threshold without any success. Thankyou everybody.