Hector SLAM with odometry switch

I've spent a good amount of time trying to find a solution to the Hector SLAM with long corridors localisation problem(if you found this through a Google search you know exactly what I'm talking about). Instead of doing sensor fusion with the odometry of my robot I’ve decided to do a switch as soon as parameters in the scan matching covariance in Hector SLAM start showing erratic behaviour. The variable is called lastScanMatchCov. In my testing I found that the middle value of the covariance matrix spikes in long corridor scenarios, the spike occurs early enough for the odometry “switch” to trigger.

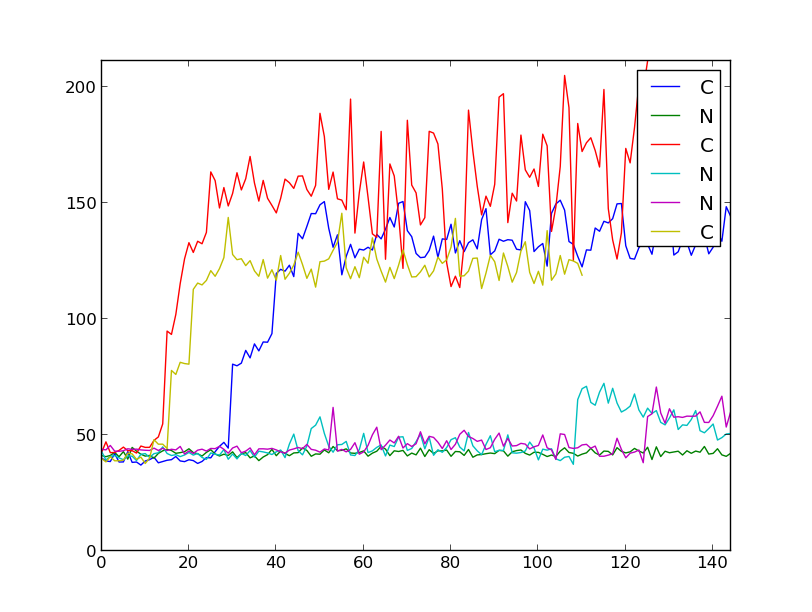

The plot shows the middle element in different scenarios, N are the non-long-corridor environments while C are the long-corridor environments. As seen in the plot all the long-corridor environments yield a great spike, this is used as the trigger to use odometry.

When the switch triggers I want to use the odometry pose estimate instead of the current poseHintWorld that the laser scan matching algorithm uses. I know that the odometry is being published as /odom topic, and rostopic echoing this topic gives me updates on the position. My problem is how do I get the odometry position estimate? I’m fairly new to ROS and I’ve finished the subscriber/publisher tutorial, but still can’t seem to get a hole through. Here are the code parts I want to edit in HectorSlamProcessor.h

bool update(const DataContainer& dataContainer, const Eigen::Vector3f& poseHintWorld, bool map_without_matching = false, bool force_update = false)

{

bool ret = false;

Eigen::Vector3f newPoseEstimateWorld;

if (!map_without_matching){

//middle value of 3x3 covariance matrix

if (lastScanMatchCov(1,1) > 100.0)

{

std::cout <<"\nunsure!\n";

//USE DIFFERENT POSE ESTIMATE

//poseHintWorld = "position estimate from /odom topic"

//newPoseEstimateWorld = poseHintWorld

}

newPoseEstimateWorld = (mapRep->matchData(poseHintWorld, dataContainer, lastScanMatchCov));

}else{

newPoseEstimateWorld = poseHintWorld;

}

lastScanMatchPose = newPoseEstimateWorld;

//std::cout << "\nt1:\n" << newPoseEstimateWorld << "\n";

//std::cout << "\n1";

//std::cout << "\n" << lastScanMatchPose << "\n";

if(util::poseDifferenceLargerThan(newPoseEstimateWorld, lastMapUpdatePose, paramMinDistanceDiffForMapUpdate, paramMinAngleDiffForMapUpdate) || map_without_matching || force_update){

ret = true;

}

Hope you guys can help me out.

Edit: I should mention that the map is generated in a very harsh environment for Hector SLAM.

- The corridors are way longer than the range of the scanner, eliminating the possibility of the scanners seeing the wall at the end of the corridor.

- The map is drawn such that the walls along the corridor have absolutely 0 features. This is done because the robots have a really good odometry estimate by combining IMU and odometry.

- The solution must be a variant of Hector SLAM, this is not my decision, these are just my instructions :)

Update I'm now able to extract the position data of the robot with the help of a subscriber

void HectorMappingRos::odomCallback(const nav_msgs::Odometry::ConstPtr& msg)

{

Eigen::Vector3f odometry_pose;

odometry_pose(0) = msg->pose.pose.position.x;

odometry_pose(1) = msg->pose.pose.position.y;

odometry_pose(2) = msg->pose.pose.orientation.z;

std::cout << "\nodometry_pose_vector\n" << odometry_pose << "\n";

}

But how do I extract this information to use it in the SLAM update ...

I've upvoted you, so you can now probably upload the image.

Thank you!